Generative Adversarial Networks (GANs) excel at producing highly realistic images by training two neural networks in opposition, enhancing output quality through continuous refinement. Variational Autoencoders (VAEs) offer a probabilistic approach, efficiently learning latent representations to generate new data with robust control over variations and smooth interpolation. Both models serve distinct purposes in AI, with GANs favoring sharper outputs and VAEs providing better latent space structures for generative tasks.

Table of Comparison

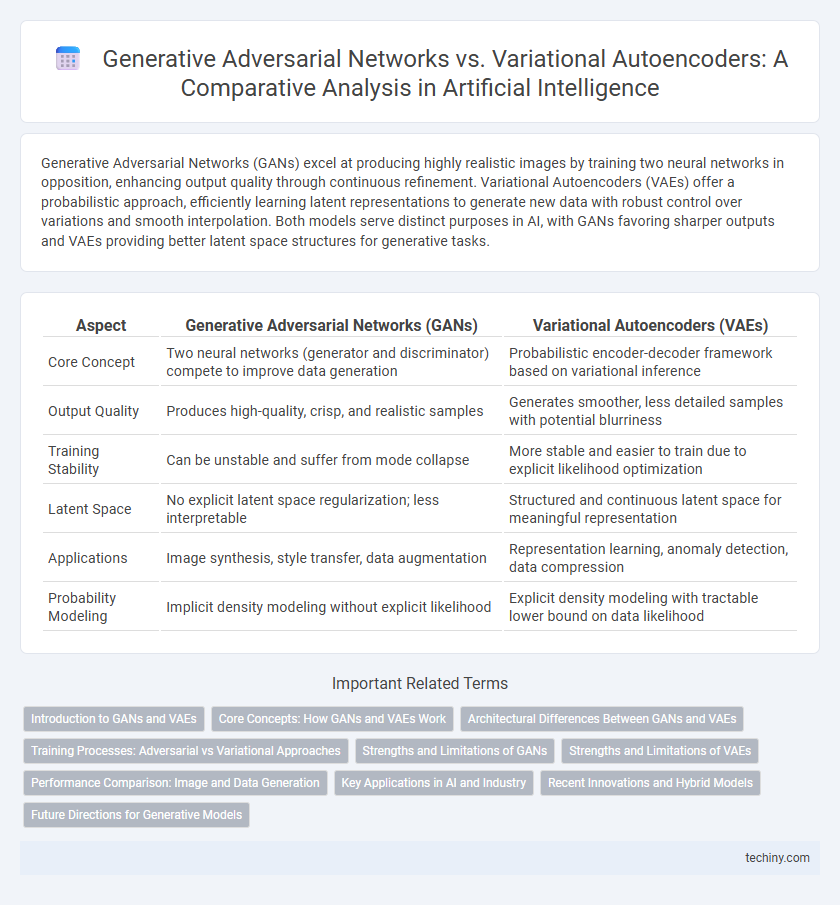

| Aspect | Generative Adversarial Networks (GANs) | Variational Autoencoders (VAEs) |

|---|---|---|

| Core Concept | Two neural networks (generator and discriminator) compete to improve data generation | Probabilistic encoder-decoder framework based on variational inference |

| Output Quality | Produces high-quality, crisp, and realistic samples | Generates smoother, less detailed samples with potential blurriness |

| Training Stability | Can be unstable and suffer from mode collapse | More stable and easier to train due to explicit likelihood optimization |

| Latent Space | No explicit latent space regularization; less interpretable | Structured and continuous latent space for meaningful representation |

| Applications | Image synthesis, style transfer, data augmentation | Representation learning, anomaly detection, data compression |

| Probability Modeling | Implicit density modeling without explicit likelihood | Explicit density modeling with tractable lower bound on data likelihood |

Introduction to GANs and VAEs

Generative Adversarial Networks (GANs) consist of two neural networks, the generator and the discriminator, competing to create realistic synthetic data by optimizing a minimax game. Variational Autoencoders (VAEs) use probabilistic inference to encode input data into a latent space and then decode it back to generate new samples with controlled variability. Both models excel in unsupervised learning scenarios but differ fundamentally in architecture and training objectives, with GANs focusing on adversarial training and VAEs on maximizing data likelihood.

Core Concepts: How GANs and VAEs Work

Generative Adversarial Networks (GANs) operate through a dual neural network system where a generator creates synthetic data and a discriminator evaluates its authenticity, engaging in a continuous adversarial process to improve generation quality. Variational Autoencoders (VAEs) encode input data into a probabilistic latent space and decode samples to reconstruct data, optimizing the balance between reconstruction accuracy and latent space regularization via a variational inference framework. The core difference lies in GANs' adversarial training yielding sharp, high-fidelity outputs, while VAEs emphasize structured, interpretable latent representations essential for probabilistic reasoning and controlled data generation.

Architectural Differences Between GANs and VAEs

Generative Adversarial Networks (GANs) consist of two neural networks, a generator and a discriminator, engaged in a competitive training process where the generator creates realistic data while the discriminator evaluates authenticity, enabling high-quality synthetic data generation. Variational Autoencoders (VAEs) use a single encoder-decoder architecture, with the encoder mapping input data to a probabilistic latent space and the decoder reconstructing data from this latent distribution, emphasizing smooth interpolation and latent space representation. GANs typically produce sharper images due to adversarial loss, whereas VAEs offer better latent variable interpretability and stability during training because of their probabilistic framework.

Training Processes: Adversarial vs Variational Approaches

Generative Adversarial Networks (GANs) train through a competitive process where a generator creates data samples and a discriminator evaluates their authenticity, driving the generator to improve iteratively. Variational Autoencoders (VAEs) optimize a probabilistic lower bound on data likelihood by encoding inputs into a latent space and decoding them with a reconstruction loss combined with a regularization term. The adversarial process in GANs often achieves sharper outputs, while VAEs provide stable training through explicit variational inference, balancing reconstruction fidelity and latent space smoothness.

Strengths and Limitations of GANs

Generative Adversarial Networks (GANs) excel at producing highly realistic and high-resolution images by leveraging a competitive training process between the generator and discriminator. Their major limitation lies in training instability, mode collapse, and sensitivity to hyperparameter tuning, which can hinder convergence and model robustness. Despite these challenges, GANs remain a powerful tool for tasks demanding sharp and detailed synthetic data generation.

Strengths and Limitations of VAEs

Variational Autoencoders (VAEs) excel in learning smooth latent representations that facilitate interpolations and generate diverse outputs through probabilistic encoding. They provide efficient training and scalability, making them suitable for high-dimensional data such as images and speech. However, VAEs often produce blurrier outputs compared to Generative Adversarial Networks (GANs) due to their reliance on reconstruction loss and variational approximations, limiting the sharpness and detail in generated samples.

Performance Comparison: Image and Data Generation

Generative Adversarial Networks (GANs) generally produce higher-quality and more realistic images due to their adversarial training mechanism, which sharpens generated data through a discriminator's feedback. Variational Autoencoders (VAEs) offer smoother latent space interpolation and better representation learning but often result in blurrier outputs, making them less effective for photorealistic image generation. Performance benchmarks indicate GANs excel in image fidelity and fine details, while VAEs perform better in generating diverse data samples with meaningful latent encodings.

Key Applications in AI and Industry

Generative Adversarial Networks (GANs) excel in generating high-quality synthetic images, video synthesis, and data augmentation for training robust AI models, widely adopted in entertainment, fashion, and healthcare for realistic content creation. Variational Autoencoders (VAEs) are key in anomaly detection, drug discovery, and latent space representation, enabling industrial applications such as material design and predictive maintenance where interpretability and smooth data generation are crucial. Both models drive innovation in AI by enabling unsupervised learning and enhancing generative tasks across industries with distinct advantages in fidelity and representation.

Recent Innovations and Hybrid Models

Recent innovations in Generative Adversarial Networks (GANs) include improved architectures like StyleGAN3 and GANs with attention mechanisms, enhancing image synthesis quality and diversity. Variational Autoencoders (VAEs) have seen advancements in hierarchical models and discrete latent space adaptations, boosting their representational power and sample coherence. Hybrid models combining GANs and VAEs leverage the strengths of both frameworks, achieving better generative performance by balancing high-fidelity generation with structured latent representations.

Future Directions for Generative Models

Future directions for generative models emphasize enhancing the scalability and interpretability of Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) to better capture complex data distributions. Research focuses on hybrid architectures combining GANs' sharp sample generation with VAEs' latent space regularization to improve robustness and training stability. Incorporating advances in self-supervised learning and multimodal synthesis aims to expand applications in realistic image generation, natural language processing, and drug discovery.

Generative Adversarial Networks vs Variational Autoencoders Infographic

techiny.com

techiny.com