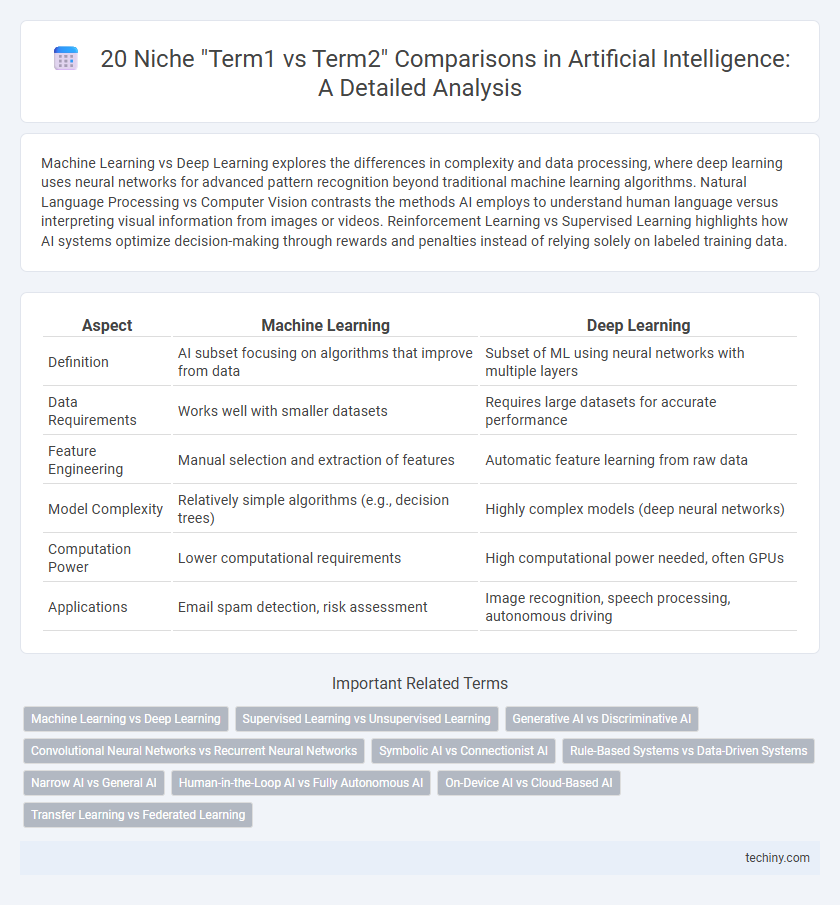

Machine Learning vs Deep Learning explores the differences in complexity and data processing, where deep learning uses neural networks for advanced pattern recognition beyond traditional machine learning algorithms. Natural Language Processing vs Computer Vision contrasts the methods AI employs to understand human language versus interpreting visual information from images or videos. Reinforcement Learning vs Supervised Learning highlights how AI systems optimize decision-making through rewards and penalties instead of relying solely on labeled training data.

Table of Comparison

| Aspect | Machine Learning | Deep Learning |

|---|---|---|

| Definition | AI subset focusing on algorithms that improve from data | Subset of ML using neural networks with multiple layers |

| Data Requirements | Works well with smaller datasets | Requires large datasets for accurate performance |

| Feature Engineering | Manual selection and extraction of features | Automatic feature learning from raw data |

| Model Complexity | Relatively simple algorithms (e.g., decision trees) | Highly complex models (deep neural networks) |

| Computation Power | Lower computational requirements | High computational power needed, often GPUs |

| Applications | Email spam detection, risk assessment | Image recognition, speech processing, autonomous driving |

Machine Learning vs Deep Learning

Machine Learning involves algorithms that parse data, learn from it, and make decisions with minimal human intervention, while Deep Learning is a subset of Machine Learning utilizing neural networks with multiple layers to model complex patterns in large datasets. Deep Learning excels in handling unstructured data such as images, audio, and text through architectures like convolutional neural networks and recurrent neural networks. Machine Learning methods include decision trees, support vector machines, and clustering, which are typically less computationally intensive than Deep Learning models but may require more manual feature engineering.

Supervised Learning vs Unsupervised Learning

Supervised learning involves training AI models on labeled datasets, enabling accurate predictions by learning input-output mappings, whereas unsupervised learning identifies patterns and structures in unlabeled data without predefined categories. Common supervised algorithms include support vector machines and neural networks, while unsupervised methods feature clustering techniques like k-means and hierarchical clustering. Understanding the differences between these learning paradigms is crucial for selecting appropriate AI models based on data availability and problem requirements.

Generative AI vs Discriminative AI

Generative AI models, such as GANs and VAEs, focus on creating new data by learning the underlying distribution, while Discriminative AI models like logistic regression and SVM classify data by modeling decision boundaries. Generative models excel in applications requiring data synthesis or augmentation, producing realistic images, text, or audio. Discriminative models perform better in classification tasks by directly estimating conditional probabilities, making them more efficient for predictive accuracy.

Convolutional Neural Networks vs Recurrent Neural Networks

Convolutional Neural Networks (CNNs) excel at processing spatial data like images by leveraging hierarchical feature extraction through convolutional layers. Recurrent Neural Networks (RNNs) are optimized for sequential data such as time series or natural language, utilizing loops in their architecture to maintain temporal dependencies. While CNNs are preferred for tasks like image recognition and object detection, RNNs dominate applications in speech recognition, language modeling, and sequence prediction.

Symbolic AI vs Connectionist AI

Symbolic AI emphasizes rule-based logic and explicit knowledge representation, relying on human-readable symbols and reasoning processes. Connectionist AI, embodied by neural networks, models learning through distributed processing and pattern recognition across interconnected nodes. While Symbolic AI excels in interpretable decision-making, Connectionist AI thrives in handling ambiguous data and adapting through training with large datasets.

Rule-Based Systems vs Data-Driven Systems

Rule-Based Systems in artificial intelligence rely on predefined logical rules and expert knowledge to make decisions, ensuring transparency and interpretability in outcomes. Data-Driven Systems utilize machine learning algorithms that learn patterns from large datasets, enabling adaptability and improved accuracy over time but often lacking explainability. The contrast highlights trade-offs between predictability and flexibility, with Rule-Based Systems excelling in controlled environments and Data-Driven Systems dominating in complex, data-rich scenarios.

Narrow AI vs General AI

Narrow AI refers to artificial intelligence systems designed to perform specific tasks with high efficiency, such as image recognition or natural language processing, while General AI aims for human-level cognitive abilities across a wide range of activities. Narrow AI operates within predefined parameters and lacks true understanding or consciousness, whereas General AI seeks adaptability, reasoning, and problem-solving in unfamiliar scenarios. The distinction highlights current technological limitations, with Narrow AI dominating today's applications and General AI representing a long-term research goal for achieving versatile machine intelligence.

Human-in-the-Loop AI vs Fully Autonomous AI

Human-in-the-Loop AI integrates continuous human oversight and intervention to enhance decision accuracy and ethical compliance, especially in complex or high-stakes environments. Fully Autonomous AI operates independently without human input, relying on advanced machine learning algorithms for real-time decision-making, prioritizing speed and scalability. The trade-off between these approaches centers on balancing control and automation to optimize performance and accountability in AI-driven systems.

On-Device AI vs Cloud-Based AI

On-Device AI processes data locally on hardware like smartphones or IoT devices, ensuring faster response times and enhanced data privacy by minimizing reliance on internet connectivity. Cloud-Based AI leverages remote servers to provide scalable computing resources and extensive data storage, enabling complex model training and real-time updates across distributed systems. The trade-off between latency, data security, and computational power defines the choice between On-Device and Cloud-Based AI implementations in diverse applications.

Transfer Learning vs Federated Learning

Transfer Learning leverages pre-trained models on large datasets to adapt quickly to new, related tasks, enhancing efficiency and reducing data requirements. Federated Learning enables decentralized model training across multiple devices while preserving data privacy by keeping local data on the device and only sharing model updates. Both approaches address data scarcity and privacy concerns but differ in their implementation: Transfer Learning transfers knowledge from one task to another, whereas Federated Learning collaborates across distributed data sources without centralizing data.

Certainly! Here’s a list of niche and specific "term1 vs term2" comparisons in the context of Artifi Infographic

techiny.com

techiny.com