Autoencoders compress data by learning a deterministic function mapping inputs to a lower-dimensional latent space and reconstructing the input with minimal error. Variational Autoencoders (VAEs) introduce a probabilistic approach, encoding inputs as distributions rather than fixed points, which enables generating new data samples by sampling from the latent space. The key distinction lies in VAEs optimizing a variational lower bound, fostering smooth latent space representations ideal for generative modeling, unlike traditional autoencoders that focus solely on reconstruction accuracy.

Table of Comparison

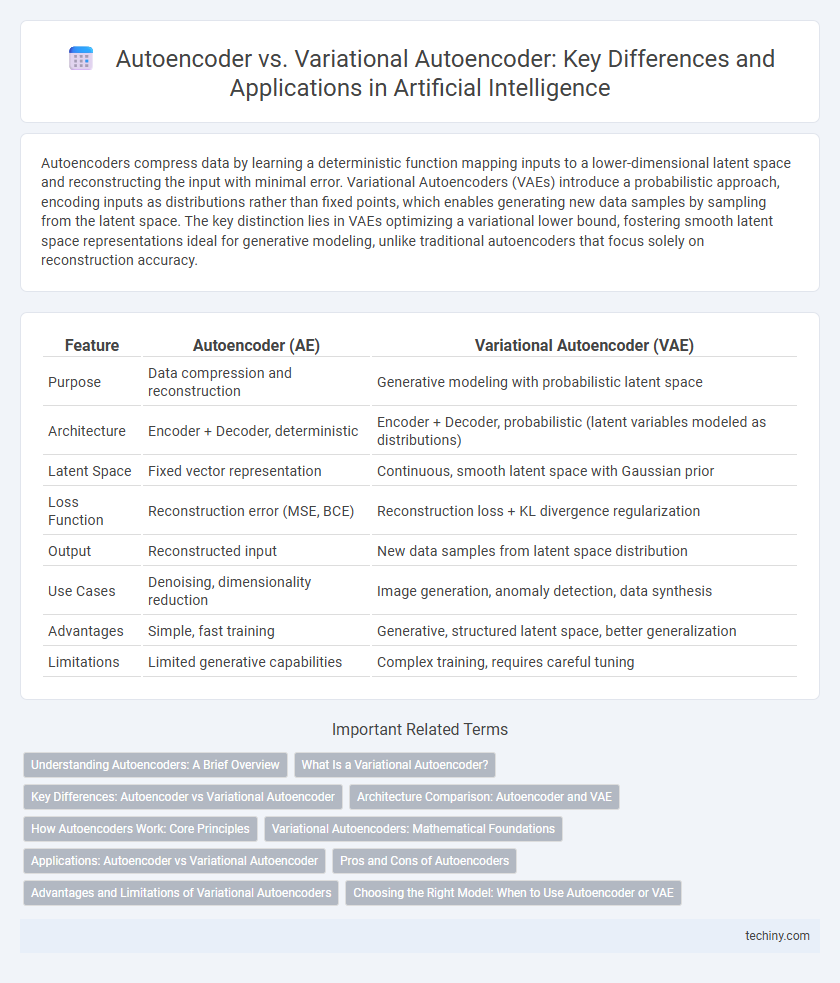

| Feature | Autoencoder (AE) | Variational Autoencoder (VAE) |

|---|---|---|

| Purpose | Data compression and reconstruction | Generative modeling with probabilistic latent space |

| Architecture | Encoder + Decoder, deterministic | Encoder + Decoder, probabilistic (latent variables modeled as distributions) |

| Latent Space | Fixed vector representation | Continuous, smooth latent space with Gaussian prior |

| Loss Function | Reconstruction error (MSE, BCE) | Reconstruction loss + KL divergence regularization |

| Output | Reconstructed input | New data samples from latent space distribution |

| Use Cases | Denoising, dimensionality reduction | Image generation, anomaly detection, data synthesis |

| Advantages | Simple, fast training | Generative, structured latent space, better generalization |

| Limitations | Limited generative capabilities | Complex training, requires careful tuning |

Understanding Autoencoders: A Brief Overview

Autoencoders are neural networks designed for unsupervised learning that compress input data into a latent space representation and then reconstruct the output with minimal loss. Variational Autoencoders (VAEs) extend this concept by learning probabilistic latent variables, enabling more efficient data generation and smooth interpolation between data points. Understanding the deterministic compression of standard autoencoders is essential before exploring the probabilistic framework of VAEs.

What Is a Variational Autoencoder?

A Variational Autoencoder (VAE) is a generative model that builds on traditional autoencoders by learning a probabilistic latent space, enabling it to generate new data samples by sampling from this space. Unlike standard autoencoders that compress data into fixed points, VAEs encode inputs as distributions, typically Gaussian, capturing uncertainty and variability in the latent representation. This probabilistic approach allows VAEs to perform tasks such as image generation, denoising, and complex data synthesis with improved generalization and robustness.

Key Differences: Autoencoder vs Variational Autoencoder

Autoencoders compress data by learning a deterministic latent space that minimizes reconstruction error, while Variational Autoencoders (VAEs) generate probabilistic latent representations, enabling sampling and generative modeling. VAEs optimize a loss function combining reconstruction loss with a Kullback-Leibler divergence term, promoting a structured latent space following a prior distribution. This key difference allows VAEs to produce diverse outputs and better generalize in generative tasks compared to traditional autoencoders.

Architecture Comparison: Autoencoder and VAE

Autoencoders consist of encoder and decoder networks that compress input data into a latent space and reconstruct it with minimal loss, relying on deterministic mappings. Variational Autoencoders employ probabilistic encodings by modeling the latent space as distributions, typically Gaussian, enabling generative capabilities and regularized latent representations through a KL divergence loss term. The architecture of VAEs differs by incorporating stochastic sampling layers and a reparameterization trick, enhancing data generation and smooth latent space interpolation compared to traditional autoencoders.

How Autoencoders Work: Core Principles

Autoencoders operate by encoding input data into a compressed latent representation and then decoding it to reconstruct the original input, minimizing reconstruction error through backpropagation. They consist of an encoder that captures essential features in a lower-dimensional space and a decoder that aims to recreate the input from this compact encoding. The training process optimizes neural network weights to ensure the output closely matches the input, enabling efficient dimensionality reduction and feature learning.

Variational Autoencoders: Mathematical Foundations

Variational Autoencoders (VAEs) leverage probabilistic graphical models and Bayesian inference to encode input data into a latent space characterized by a distribution rather than fixed points. The core mathematical foundation involves optimizing the Evidence Lower Bound (ELBO), balancing reconstruction loss and the Kullback-Leibler divergence between the approximate posterior and the prior distribution. This framework allows VAEs to generate new data samples by sampling from the learned latent distribution, providing a principled approach to unsupervised learning and generative modeling.

Applications: Autoencoder vs Variational Autoencoder

Autoencoders excel in applications such as dimensionality reduction, noise reduction, and feature extraction due to their ability to learn compressed representations of input data. Variational Autoencoders (VAEs) are preferred for generative tasks like image synthesis, anomaly detection, and semi-supervised learning because they model data distributions and generate new samples. Both techniques are crucial in AI-driven fields, but VAEs offer enhanced capabilities in probabilistic modeling and data generation compared to traditional autoencoders.

Pros and Cons of Autoencoders

Autoencoders excel at compact data representation and noise reduction by learning efficient latent embeddings through deterministic encoding-decoding processes. They lack probabilistic interpretation, limiting their ability to generate diverse outputs or model data uncertainty compared to Variational Autoencoders (VAEs). Autoencoders often face overfitting and poor generalization, making them less suitable for tasks requiring structured latent space or generative modeling.

Advantages and Limitations of Variational Autoencoders

Variational Autoencoders (VAEs) offer advantages such as generating diverse and smooth latent representations, enabling effective data synthesis and interpolation, which traditional autoencoders lack due to their deterministic nature. VAEs provide a probabilistic framework that captures data distributions more accurately, enhancing model generalization and robustness in tasks like image generation and anomaly detection. Limitations include challenges in balancing reconstruction quality and latent space regularization, often resulting in blurry outputs compared to GANs, and difficulties in scaling to complex, high-dimensional datasets without extensive architecture tuning.

Choosing the Right Model: When to Use Autoencoder or VAE

Autoencoders excel in deterministic data compression and feature extraction tasks where precise reconstruction is essential, such as image denoising or dimensionality reduction. Variational Autoencoders (VAEs) provide probabilistic latent representations, making them ideal for generative modeling, anomaly detection, and scenarios requiring data variability and interpolation. Selecting between Autoencoder and VAE depends on whether the goal prioritizes exact data reconstruction or capturing underlying data distributions for generation and uncertainty quantification.

Autoencoder vs Variational Autoencoder Infographic

techiny.com

techiny.com