Sentence embedding captures the semantic meaning of individual sentences, enabling precise understanding and comparison at a granular level. Document embedding aggregates broader contextual information, representing entire documents as compact vectors for tasks like topic modeling and document classification. Selecting between sentence and document embeddings depends on the specific use case, with sentence embeddings excelling in fine-grained analysis and document embeddings optimizing large-scale content representation.

Table of Comparison

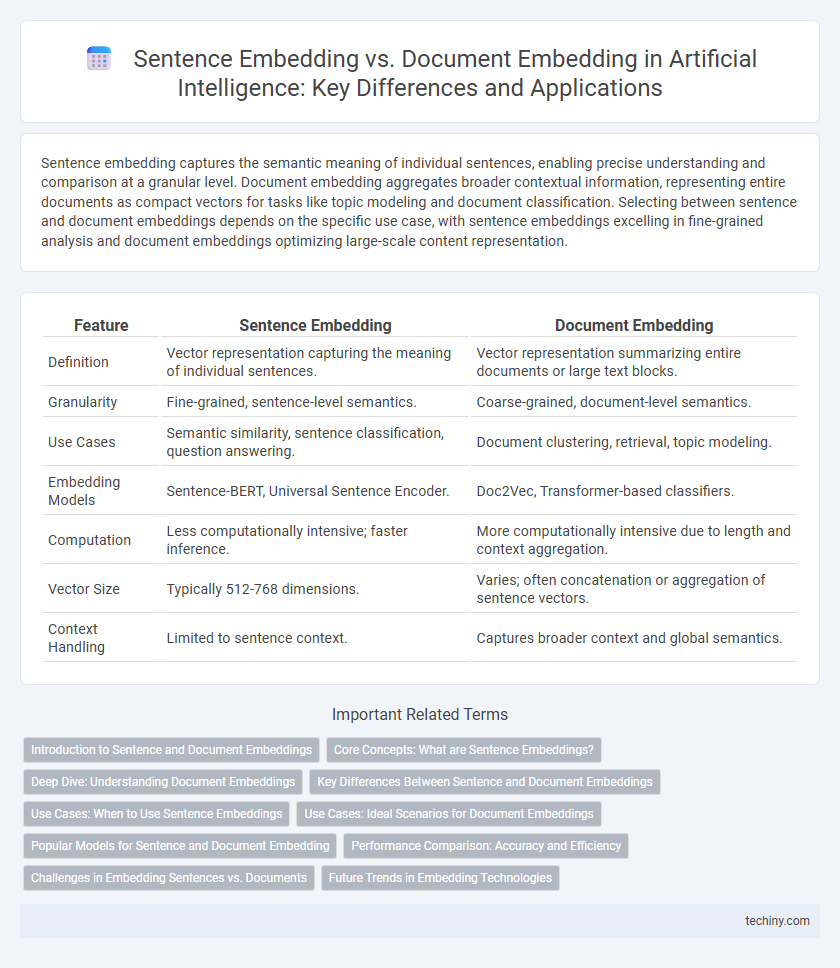

| Feature | Sentence Embedding | Document Embedding |

|---|---|---|

| Definition | Vector representation capturing the meaning of individual sentences. | Vector representation summarizing entire documents or large text blocks. |

| Granularity | Fine-grained, sentence-level semantics. | Coarse-grained, document-level semantics. |

| Use Cases | Semantic similarity, sentence classification, question answering. | Document clustering, retrieval, topic modeling. |

| Embedding Models | Sentence-BERT, Universal Sentence Encoder. | Doc2Vec, Transformer-based classifiers. |

| Computation | Less computationally intensive; faster inference. | More computationally intensive due to length and context aggregation. |

| Vector Size | Typically 512-768 dimensions. | Varies; often concatenation or aggregation of sentence vectors. |

| Context Handling | Limited to sentence context. | Captures broader context and global semantics. |

Introduction to Sentence and Document Embeddings

Sentence embeddings transform individual sentences into dense vector representations, capturing semantic meaning for tasks like sentiment analysis and semantic search. Document embeddings extend this concept by representing entire documents as fixed-length vectors, enabling efficient comparison and retrieval of longer texts. Both embeddings leverage neural network models such as transformers and word2vec to encode contextual information and semantic relationships within text data.

Core Concepts: What are Sentence Embeddings?

Sentence embeddings are dense vector representations that capture the semantic meaning of individual sentences by encoding contextual information and word relationships within a fixed-length format. They enable machines to understand sentence-level nuances, facilitating tasks like semantic similarity, sentiment analysis, and machine translation. Unlike document embeddings which summarize entire texts, sentence embeddings focus on fine-grained meaning at the sentence scale, optimizing performance in sentence-centric natural language processing applications.

Deep Dive: Understanding Document Embeddings

Document embeddings capture the semantic meaning of entire texts by aggregating contextual information from sentence embeddings using models like BERT or Doc2Vec, enabling improved performance in tasks such as document classification and information retrieval. Unlike sentence embeddings, which focus on representing individual sentences, document embeddings encapsulate broader themes, topics, and relationships within a full document. Advanced techniques leverage transformers and attention mechanisms to preserve contextual nuances and hierarchical structures, resulting in richer, more accurate semantic representations for large-scale natural language understanding.

Key Differences Between Sentence and Document Embeddings

Sentence embeddings capture the semantic meaning of individual sentences by encoding fine-grained contextual information, while document embeddings aggregate broader context, capturing themes and topics across multiple sentences or paragraphs. Sentence embeddings typically involve models like BERT or Universal Sentence Encoder, optimized for short text granularity, whereas document embeddings rely on methods such as Doc2Vec or hierarchical transformers for representing longer text structures. The key difference lies in their granularity and application scope: sentence embeddings excel in tasks requiring precise semantic similarity, whereas document embeddings enhance performance in comprehensive topic modeling and document classification.

Use Cases: When to Use Sentence Embeddings

Sentence embeddings excel in capturing the semantic meaning of short text fragments, making them ideal for tasks like sentiment analysis, intent detection, and question answering where precise understanding of individual sentences is crucial. These embeddings enable efficient retrieval in semantic search engines and improve chatbot responsiveness by interpreting user inputs at the sentence level. Use sentence embeddings when the application demands fine-grained contextual analysis of brief textual units rather than full document comprehension.

Use Cases: Ideal Scenarios for Document Embeddings

Document embeddings excel in scenarios requiring comprehensive understanding of entire texts such as legal document analysis, scientific research summarization, and customer feedback aggregation. They capture contextual and thematic elements across multiple sentences, enabling improved performance in tasks like document classification, topic modeling, and information retrieval. Unlike sentence embeddings, document embeddings provide richer semantic representation for long-form content, enhancing machine learning models in complex natural language processing applications.

Popular Models for Sentence and Document Embedding

Popular models for sentence embedding include Sentence-BERT (SBERT) and Universal Sentence Encoder (USE), which are optimized for capturing semantic meaning at the sentence level through transformer architectures. Document embedding models such as Doc2Vec and Longformer extend embeddings to longer texts by aggregating sentence representations or using attention mechanisms designed for extended context. Both approaches leverage pretrained language models like BERT or GPT as foundational components, enhancing tasks like semantic search, text classification, and summarization.

Performance Comparison: Accuracy and Efficiency

Sentence embedding techniques excel in capturing fine-grained semantic nuances, resulting in higher accuracy for tasks like sentiment analysis and question answering. Document embedding methods offer efficient performance by aggregating sentence vectors into a unified representation, reducing computational complexity for large-scale information retrieval. Benchmark evaluations often show sentence embeddings outperform document embeddings in precision metrics, while document embeddings provide superior processing speed and resource management.

Challenges in Embedding Sentences vs. Documents

Sentence embedding presents challenges in capturing nuanced context and polysemy due to limited textual information, making semantic representation less robust compared to document embedding. Document embedding benefits from extensive contextual data, enabling more comprehensive semantic understanding, but it struggles with increased computational complexity and the risk of diluting key information through noise. Balancing granularity and context remains critical for enhancing performance in tasks like semantic search and natural language understanding.

Future Trends in Embedding Technologies

Future trends in embedding technologies emphasize the integration of multimodal data to enhance context understanding, enabling more accurate sentence and document embeddings. Advances in transformer architectures and self-supervised learning are driving improvements in embedding quality, scalability, and efficiency for diverse AI applications. Emerging models aim to dynamically adapt embeddings based on user intent and domain specificity, pushing the boundaries of semantic representation in natural language processing.

Sentence Embedding vs Document Embedding Infographic

techiny.com

techiny.com