Convolutional Neural Networks (CNNs) excel at processing spatial data such as images by capturing local patterns through convolutional layers, making them ideal for tasks like image recognition and classification. Recurrent Neural Networks (RNNs) specialize in sequential data analysis by maintaining temporal dependencies through looping connections, which is essential for language modeling and time-series prediction. While CNNs prioritize spatial feature extraction, RNNs handle sequence information, and choosing between them depends on the nature of the input data and the specific application requirements.

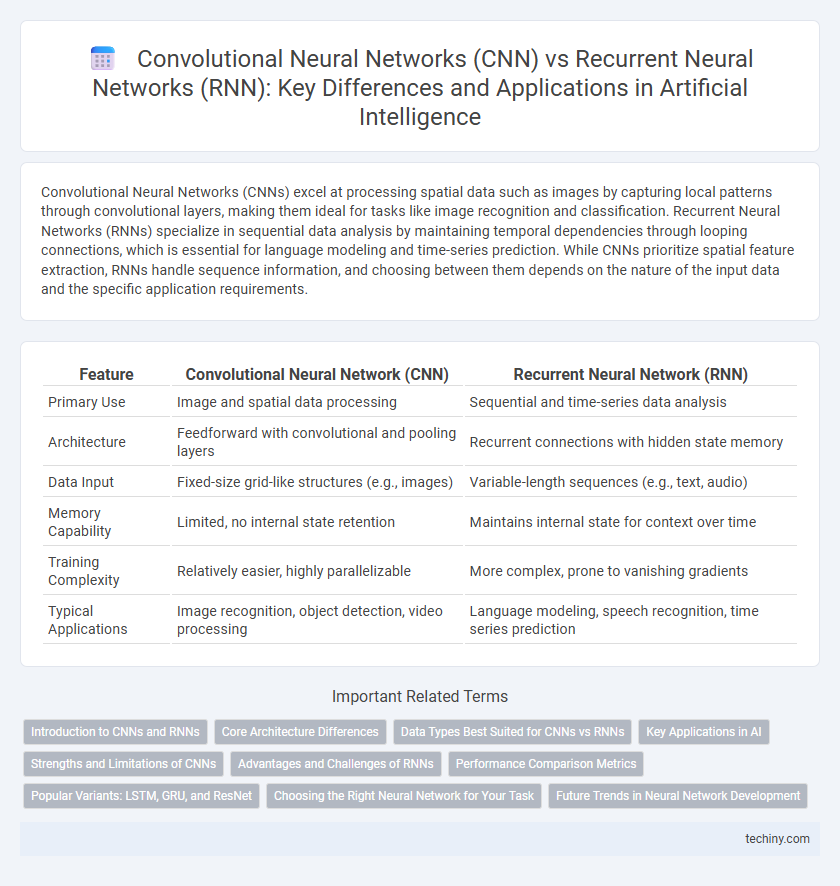

Table of Comparison

| Feature | Convolutional Neural Network (CNN) | Recurrent Neural Network (RNN) |

|---|---|---|

| Primary Use | Image and spatial data processing | Sequential and time-series data analysis |

| Architecture | Feedforward with convolutional and pooling layers | Recurrent connections with hidden state memory |

| Data Input | Fixed-size grid-like structures (e.g., images) | Variable-length sequences (e.g., text, audio) |

| Memory Capability | Limited, no internal state retention | Maintains internal state for context over time |

| Training Complexity | Relatively easier, highly parallelizable | More complex, prone to vanishing gradients |

| Typical Applications | Image recognition, object detection, video processing | Language modeling, speech recognition, time series prediction |

Introduction to CNNs and RNNs

Convolutional Neural Networks (CNNs) excel at processing grid-like data structures such as images by utilizing convolutional layers to capture spatial hierarchies and patterns. Recurrent Neural Networks (RNNs) specialize in sequential data analysis by maintaining hidden states that carry information across time steps, making them ideal for tasks like language modeling and time series prediction. Both CNNs and RNNs are fundamental architectures in deep learning, enabling advances in computer vision and natural language processing respectively.

Core Architecture Differences

Convolutional Neural Networks (CNNs) utilize spatial hierarchies of features through convolutional layers that capture local patterns with fixed-size filters, excelling in image and spatial data processing. Recurrent Neural Networks (RNNs) incorporate sequential information by maintaining hidden states that loop over time steps, making them suitable for time series, language, and sequence modeling tasks. CNNs rely on layer-wise feature extraction with spatial locality, while RNNs emphasize temporal dependency through recurrent connections, resulting in fundamentally different core architectures optimized for distinct data structures.

Data Types Best Suited for CNNs vs RNNs

Convolutional Neural Networks (CNNs) excel in processing spatial data such as images and videos by capturing local patterns through convolutional layers. Recurrent Neural Networks (RNNs) are tailored for sequential data like time series, natural language, and speech, leveraging their internal memory to model temporal dependencies. CNNs are optimal for structured grid-like data, while RNNs are best suited for ordered sequences with temporal context.

Key Applications in AI

Convolutional Neural Networks (CNNs) excel in image recognition, object detection, and computer vision tasks by effectively capturing spatial hierarchies in visual data. Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM) units, are optimized for sequential data such as natural language processing, speech recognition, and time-series prediction. Both architectures serve crucial roles in AI, with CNNs dominating visual data interpretation and RNNs powering language understanding and temporal sequence modeling.

Strengths and Limitations of CNNs

Convolutional Neural Networks (CNNs) excel in spatial data processing, making them ideal for image recognition and classification due to their ability to detect hierarchical patterns through convolutional layers. Their strengths include translation invariance and reduced parameter count via weight sharing, which enhances computational efficiency for grid-like data. However, CNNs are limited in handling sequential or temporal dependencies, making them less suitable for tasks involving time-series data or natural language processing compared to Recurrent Neural Networks (RNNs).

Advantages and Challenges of RNNs

Recurrent Neural Networks (RNNs) excel at processing sequential data such as time series, natural language, and speech due to their ability to maintain temporal dependencies through internal memory states. However, RNNs face challenges like vanishing and exploding gradient problems during training, which can limit their effectiveness in capturing long-range dependencies. Advanced variants like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) mitigate these issues, improving performance in tasks like language modeling and sequence prediction.

Performance Comparison Metrics

Convolutional Neural Networks (CNNs) excel in image and spatial data processing, often achieving higher accuracy and faster training times on visual tasks compared to Recurrent Neural Networks (RNNs). RNNs, designed for sequential data, perform better in tasks requiring context retention over time, such as language modeling or time series prediction, but may suffer from vanishing gradient issues affecting long-term dependencies. Performance metrics like accuracy, F1-score, computational complexity, and training time vary significantly based on the data type and task, with CNNs generally outperforming RNNs in static pattern recognition and RNNs being superior for temporal sequence analysis.

Popular Variants: LSTM, GRU, and ResNet

Convolutional Neural Networks (CNNs) excel in spatial data processing, with ResNet as a popular variant known for its deep residual learning that mitigates vanishing gradient issues. Recurrent Neural Networks (RNNs) are designed for sequential data, with Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) being prominent variants that address the vanishing gradient problem through gating mechanisms. These models are fundamental in tasks like image recognition for CNNs and natural language processing for RNNs, leveraging their structural advantages for enhanced performance.

Choosing the Right Neural Network for Your Task

Convolutional Neural Networks (CNNs) excel at processing spatial data such as images by capturing local patterns through convolutional layers, making them ideal for image recognition and classification tasks. Recurrent Neural Networks (RNNs), including variants like LSTM and GRU, are designed to handle sequential data by maintaining temporal dependencies, which suits tasks such as natural language processing and time series analysis. Selecting the right neural network depends on the data structure and task requirements: choose CNNs for spatial feature extraction and RNNs for modeling temporal or sequential information.

Future Trends in Neural Network Development

Convolutional Neural Networks (CNNs) are evolving with advancements in spatial feature extraction, enabling improved performance in image recognition and video analysis, while Recurrent Neural Networks (RNNs) advance through innovations like gated architectures and attention mechanisms, enhancing sequential data processing in natural language understanding and time-series prediction. Future trends in neural network development emphasize hybrid models combining CNNs and RNNs to leverage both spatial and temporal data, driving breakthroughs in applications such as autonomous systems and real-time analytics. Emerging research in neural architecture search (NAS) and efficient training algorithms promises to optimize model scalability and adaptability across diverse AI tasks.

Convolutional Neural Network (CNN) vs Recurrent Neural Network (RNN) Infographic

techiny.com

techiny.com