A Markov Process models state transitions with probabilistic outcomes based solely on the current state, lacking any control or decision-making actions. In contrast, a Markov Decision Process (MDP) incorporates actions that influence state transitions, optimizing decisions to maximize cumulative rewards over time. MDPs are fundamental for reinforcement learning, enabling agents to learn optimal policies in uncertain environments.

Table of Comparison

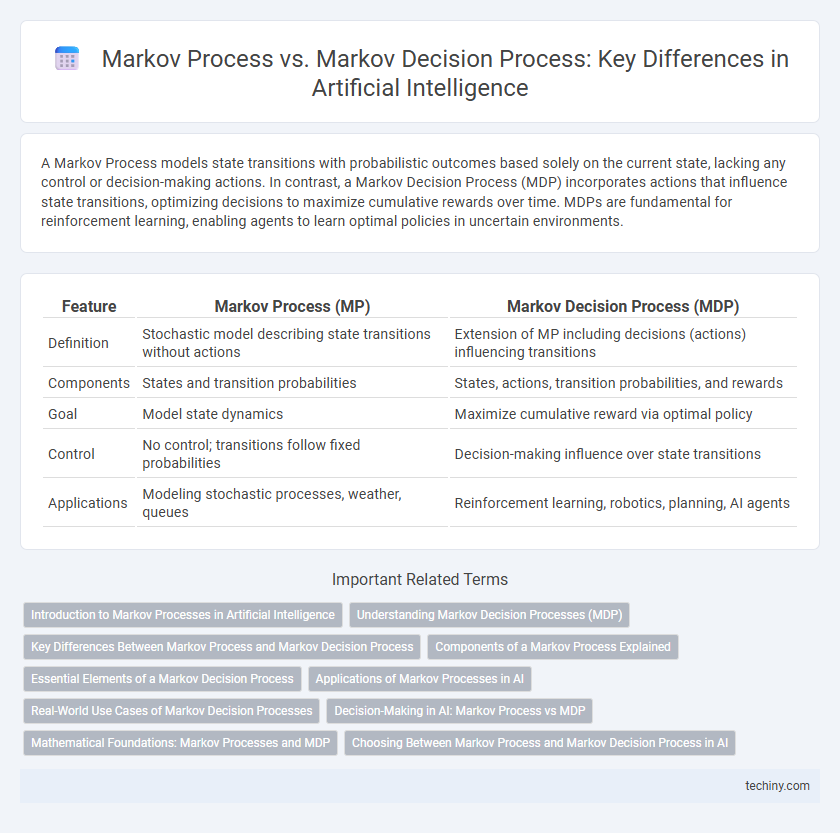

| Feature | Markov Process (MP) | Markov Decision Process (MDP) |

|---|---|---|

| Definition | Stochastic model describing state transitions without actions | Extension of MP including decisions (actions) influencing transitions |

| Components | States and transition probabilities | States, actions, transition probabilities, and rewards |

| Goal | Model state dynamics | Maximize cumulative reward via optimal policy |

| Control | No control; transitions follow fixed probabilities | Decision-making influence over state transitions |

| Applications | Modeling stochastic processes, weather, queues | Reinforcement learning, robotics, planning, AI agents |

Introduction to Markov Processes in Artificial Intelligence

Markov Processes model stochastic systems where future states depend solely on the current state, making them essential for probabilistic reasoning in Artificial Intelligence. These processes underpin algorithms in areas like speech recognition, natural language processing, and robotic navigation by providing a mathematical framework for predicting state transitions. Markov Decision Processes extend this concept by incorporating actions and rewards, enabling AI agents to make optimal decisions under uncertainty.

Understanding Markov Decision Processes (MDP)

Markov Decision Processes (MDPs) extend Markov Processes by incorporating actions and rewards, enabling decision-making in stochastic environments. MDPs model sequential decision problems through states, actions, transition probabilities, and reward functions, optimizing policies for long-term objectives. Understanding MDPs is essential for reinforcement learning, as they provide a mathematical framework for agents to learn optimal strategies in uncertain, dynamic settings.

Key Differences Between Markov Process and Markov Decision Process

Markov Process (MP) models systems where outcomes are solely determined by current states and transition probabilities without control actions, while Markov Decision Process (MDP) incorporates decision-making by including actions that influence transitions and rewards. In MDPs, agents optimize policies based on reward functions to maximize expected returns, contrasting MPs which lack policy-driven decision frameworks. The critical difference lies in MDPs' explicit inclusion of actions and rewards, enabling strategic planning versus MPs' passive predictive modeling.

Components of a Markov Process Explained

A Markov Process consists of states, transition probabilities, and a Markov property that ensures the future state depends only on the current state, not the prior history. States represent the possible conditions of the system, while transition probabilities define the likelihood of moving from one state to another. This framework provides a foundation for modeling stochastic processes without decision-making elements, distinguishing it from the Markov Decision Process (MDP) by the absence of actions and rewards.

Essential Elements of a Markov Decision Process

A Markov Decision Process (MDP) extends a Markov Process by incorporating actions, rewards, and policies, essential for modeling decision-making in stochastic environments. Key elements include a set of states, a set of possible actions per state, transition probabilities that capture the dynamics of moving between states given an action, and a reward function that quantifies the immediate benefit of state-action pairs. The policy, a mapping from states to actions, guides decision-making to maximize cumulative rewards over time, distinguishing MDPs from simpler Markov Processes without control inputs.

Applications of Markov Processes in AI

Markov Processes are widely applied in AI for modeling stochastic systems where outcomes depend solely on the current state, such as in natural language processing for sequence prediction and speech recognition. Reinforcement learning algorithms leverage Markov Decision Processes (MDPs) to optimize decision-making policies in dynamic environments by incorporating actions and rewards. In contrast, pure Markov Processes are primarily used for probabilistic state transitions without control inputs, useful in areas like hidden Markov models for pattern recognition and time series analysis.

Real-World Use Cases of Markov Decision Processes

Markov Decision Processes (MDPs) are widely used in robotics for path planning and autonomous navigation, enabling systems to make optimal decisions under uncertainty. In finance, MDPs assist in portfolio optimization by modeling sequential decisions and probabilistic outcomes to maximize returns. Additionally, healthcare applications leverage MDPs for personalized treatment planning, optimizing patient outcomes through adaptive decision-making frameworks.

Decision-Making in AI: Markov Process vs MDP

Markov Processes (MP) model stochastic state transitions without decision-making elements, essential for predicting future states in AI. Markov Decision Processes (MDP) extend MPs by incorporating actions and rewards, enabling AI systems to make optimal decisions under uncertainty. MDPs are fundamental for reinforcement learning, where agents learn policies that maximize cumulative rewards through sequential decision-making.

Mathematical Foundations: Markov Processes and MDP

Markov Processes are mathematical frameworks characterized by state transitions with probabilities depending solely on the current state, enabling the modeling of stochastic systems without decision-making elements. Markov Decision Processes (MDPs) extend this framework by incorporating actions and rewards, allowing for optimal decision policies in stochastic environments through the Bellman equation. The mathematical foundations of MDPs rely on value functions and transition dynamics that balance immediate rewards and future state values to determine policy strategies.

Choosing Between Markov Process and Markov Decision Process in AI

Choosing between a Markov Process and a Markov Decision Process in AI depends on the presence of decision-making elements and control over state transitions. Markov Processes model stochastic state transitions without actions, suitable for passive environment modeling. In contrast, Markov Decision Processes incorporate actions and rewards, enabling AI systems to optimize decision policies under uncertainty for tasks like reinforcement learning.

Markov Process vs Markov Decision Process Infographic

techiny.com

techiny.com