Natural Language Inference (NLI) and Textual Entailment both involve determining the relationship between a premise and a hypothesis, but NLI encompasses a broader range of inference types including contradiction and neutrality, while Textual Entailment specifically focuses on whether the hypothesis logically follows from the premise. Advances in deep learning have enhanced the accuracy of models distinguishing entailment from neutral or contradictory statements, improving applications such as question answering and information retrieval. Understanding the subtle differences between NLI and Textual Entailment is crucial for developing more sophisticated AI systems capable of nuanced language understanding.

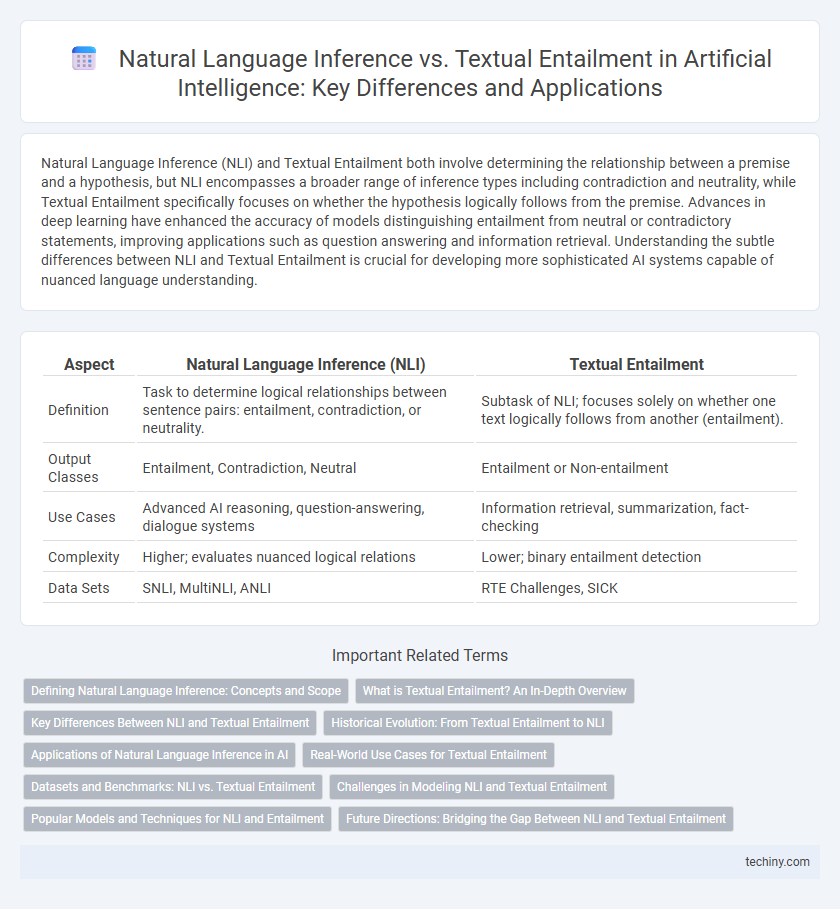

Table of Comparison

| Aspect | Natural Language Inference (NLI) | Textual Entailment |

|---|---|---|

| Definition | Task to determine logical relationships between sentence pairs: entailment, contradiction, or neutrality. | Subtask of NLI; focuses solely on whether one text logically follows from another (entailment). |

| Output Classes | Entailment, Contradiction, Neutral | Entailment or Non-entailment |

| Use Cases | Advanced AI reasoning, question-answering, dialogue systems | Information retrieval, summarization, fact-checking |

| Complexity | Higher; evaluates nuanced logical relations | Lower; binary entailment detection |

| Data Sets | SNLI, MultiNLI, ANLI | RTE Challenges, SICK |

Defining Natural Language Inference: Concepts and Scope

Natural Language Inference (NLI) involves determining the logical relationship between a premise and a hypothesis, categorizing outcomes as entailment, contradiction, or neutrality. NLI expands the scope of Textual Entailment by encompassing a broader range of semantic relationships and reasoning patterns beyond straightforward entailment recognition. Its applications include enhancing question answering, information retrieval, and machine reading comprehension, making it a critical task in understanding and modeling human language semantics.

What is Textual Entailment? An In-Depth Overview

Textual entailment is a fundamental task in natural language processing where the goal is to determine if a given text logically follows or is implied by another text, often referred to as the premise and hypothesis. It involves understanding semantic relationships and inferential reasoning between sentences to assess whether the hypothesis can be inferred from the premise with high confidence. This concept plays a critical role in applications such as question answering, information retrieval, and machine comprehension by enabling machines to infer implicit knowledge from explicit statements.

Key Differences Between NLI and Textual Entailment

Natural Language Inference (NLI) encompasses a broader task of determining the logical relationship between a premise and a hypothesis, categorizing outcomes into entailment, contradiction, or neutrality, whereas Textual Entailment specifically focuses on identifying whether the hypothesis can be logically inferred from the premise. NLI models are designed to handle multiple inference classes and often require deeper reasoning and context understanding beyond simple entailment detection. Textual Entailment serves as a foundational component within the NLI framework, typically representing one class of inference among others in more complex semantic evaluations.

Historical Evolution: From Textual Entailment to NLI

Textual Entailment emerged in the early 2000s as a key task in Natural Language Processing, focusing on determining if one text logically follows from another. Natural Language Inference (NLI) expanded on this foundation by introducing more nuanced reasoning capabilities and diverse linguistic phenomena, evolving into a standard benchmark for understanding language relationships. The progression from Textual Entailment to NLI reflects advancements in machine learning and datasets, enabling models to capture deeper semantic inference beyond binary entailment judgments.

Applications of Natural Language Inference in AI

Natural Language Inference (NLI) powers AI applications by enabling machines to understand relationships between sentences, such as entailment, contradiction, or neutrality. NLI enhances question answering, conversational agents, and information retrieval by improving context comprehension and reasoning. These capabilities allow AI systems to perform complex language understanding tasks crucial for natural language processing advancements.

Real-World Use Cases for Textual Entailment

Textual entailment plays a crucial role in real-world applications such as automated customer support, where systems verify if user queries imply certain responses, enhancing accuracy and efficiency. In information retrieval, entailment helps determine whether retrieved documents logically support a given hypothesis or question, improving relevance. Textual entailment also aids in plagiarism detection by checking if one text semantically follows from another, ensuring content originality and integrity.

Datasets and Benchmarks: NLI vs. Textual Entailment

Natural Language Inference (NLI) datasets like SNLI and MultiNLI are designed to test models on hypothesis-premise pairs with rich linguistic phenomena and varied domains, emphasizing reasoning about entailment, contradiction, and neutrality. Textual Entailment benchmarks, such as RTE and the GLUE benchmark, focus more narrowly on determining if a hypothesis logically follows from a premise, offering smaller but more diverse datasets that challenge models on linguistic variability and real-world texts. Both types of datasets drive advancements in AI understanding by providing comprehensive evaluation frameworks for semantic inference, yet NLI datasets typically encompass broader inferential categories and larger-scale data.

Challenges in Modeling NLI and Textual Entailment

Modeling Natural Language Inference (NLI) and Textual Entailment faces challenges in capturing nuanced semantic relationships, such as implicit knowledge and linguistic variability. Ambiguities inherent in human language, including polysemy and context-dependent meanings, complicate accurate inference generation. Effective models require advanced contextual embeddings and reasoning capabilities to handle complex inference tasks and reduce errors in entailment detection.

Popular Models and Techniques for NLI and Entailment

Popular models for Natural Language Inference (NLI) and Textual Entailment include transformer-based architectures such as BERT, RoBERTa, and DeBERTa, which leverage pre-training on large corpora followed by fine-tuning on specific NLI datasets like SNLI and MNLI. Techniques often incorporate attention mechanisms and sentence encoding strategies to capture semantic relationships and infer entailment, contradiction, or neutrality between text pairs. Recent advancements employ multi-task learning and contrastive learning approaches to enhance model robustness and generalization in diverse inference scenarios.

Future Directions: Bridging the Gap Between NLI and Textual Entailment

Future directions in Artificial Intelligence emphasize bridging the gap between Natural Language Inference (NLI) and Textual Entailment by developing unified models that leverage deep contextual embeddings such as BERT and GPT. Advances in transfer learning and cross-lingual understanding aim to enhance model generalization across diverse datasets and languages. Integrating commonsense reasoning and multimodal data promises to improve inference accuracy, enabling AI systems to better comprehend nuanced semantic relationships.

Natural Language Inference vs Textual Entailment Infographic

techiny.com

techiny.com