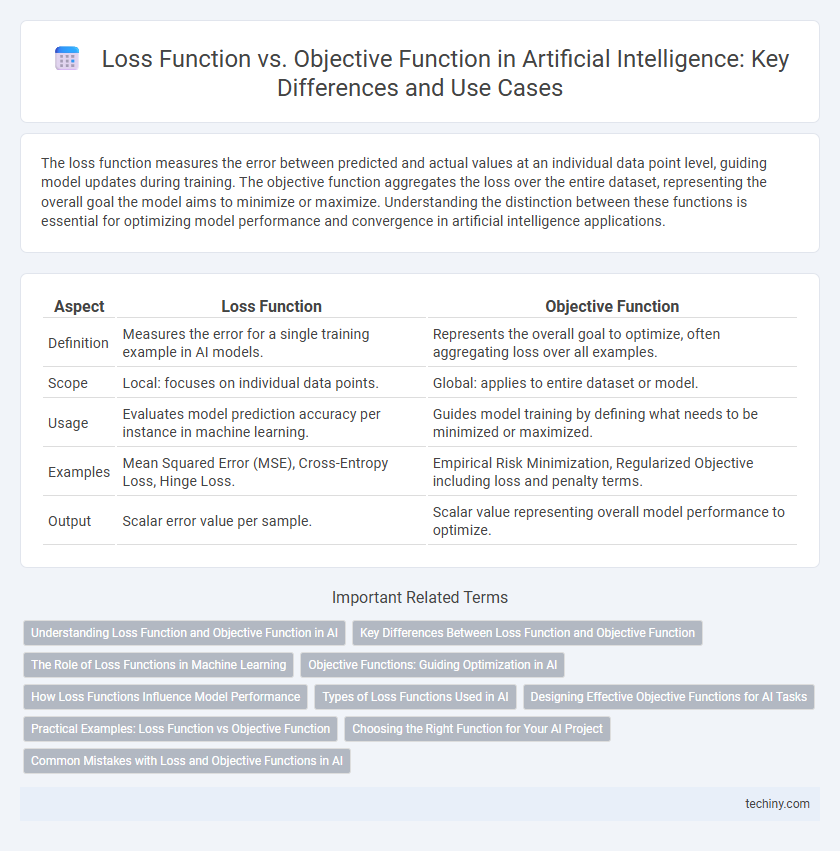

The loss function measures the error between predicted and actual values at an individual data point level, guiding model updates during training. The objective function aggregates the loss over the entire dataset, representing the overall goal the model aims to minimize or maximize. Understanding the distinction between these functions is essential for optimizing model performance and convergence in artificial intelligence applications.

Table of Comparison

| Aspect | Loss Function | Objective Function |

|---|---|---|

| Definition | Measures the error for a single training example in AI models. | Represents the overall goal to optimize, often aggregating loss over all examples. |

| Scope | Local: focuses on individual data points. | Global: applies to entire dataset or model. |

| Usage | Evaluates model prediction accuracy per instance in machine learning. | Guides model training by defining what needs to be minimized or maximized. |

| Examples | Mean Squared Error (MSE), Cross-Entropy Loss, Hinge Loss. | Empirical Risk Minimization, Regularized Objective including loss and penalty terms. |

| Output | Scalar error value per sample. | Scalar value representing overall model performance to optimize. |

Understanding Loss Function and Objective Function in AI

Loss functions in AI quantify the difference between predicted outputs and actual targets, serving as a measure of model error during training. Objective functions represent the overall goal optimized by algorithms, often encompassing loss functions along with regularization terms to guide model improvements. Understanding how loss functions contribute to minimizing errors within the broader objective function framework is crucial for developing effective machine learning models.

Key Differences Between Loss Function and Objective Function

Loss functions measure the error between predicted and actual values on individual data points, serving as a crucial metric for model training. Objective functions represent the overall goal of optimization by aggregating loss across the entire dataset, often incorporating regularization terms to improve generalization. Key differences include granularity--loss functions deal with single samples, while objective functions encompass the entire dataset--and scope, with objective functions guiding the optimization algorithm to find model parameters that minimize the aggregated loss.

The Role of Loss Functions in Machine Learning

Loss functions play a critical role in machine learning by quantifying the error between predicted outputs and actual target values, guiding the model's optimization process. They serve as a specific measure of model performance, enabling algorithms to minimize prediction errors through gradient descent or other optimization techniques. Objective functions often encompass loss functions but may also include regularization terms to improve model generalization and prevent overfitting.

Objective Functions: Guiding Optimization in AI

Objective functions serve as the core guiding metric in AI optimization, directing algorithms toward desired outcomes by quantifying how well a model performs given specific parameters. Distinct from loss functions, which typically measure prediction errors on training data, objective functions encompass broader optimization goals, including constraints and performance metrics tailored to the task. Effective design of objective functions significantly influences convergence speed and model accuracy in machine learning and deep learning applications.

How Loss Functions Influence Model Performance

Loss functions quantify the error between predicted outputs and actual targets, directly guiding the model's parameter updates during training. Selecting an appropriate loss function, such as mean squared error for regression or cross-entropy for classification, significantly impacts convergence speed and accuracy. Poor choice or misalignment of the loss function with the task leads to suboptimal model performance and increased training time.

Types of Loss Functions Used in AI

Types of loss functions used in artificial intelligence include mean squared error (MSE) for regression tasks, cross-entropy loss for classification problems, and hinge loss applied in support vector machines. These loss functions measure the discrepancy between predicted outputs and actual target values, guiding model training by minimizing errors. Selection of an appropriate loss function directly impacts model performance, convergence speed, and generalization ability in AI systems.

Designing Effective Objective Functions for AI Tasks

Designing effective objective functions for AI tasks requires a clear understanding of the relationship between loss functions and objective functions, where loss functions measure prediction errors on individual data points and objective functions aggregate these losses to guide model optimization. Objective functions often include regularization terms to prevent overfitting and improve generalization, making them critical for robust AI model performance. Tailoring objective functions to specific AI tasks, such as classification or reinforcement learning, directly impacts convergence speed, accuracy, and model interpretability.

Practical Examples: Loss Function vs Objective Function

Loss functions quantify the difference between predicted and actual values in machine learning models, with mean squared error (MSE) being a common example for regression tasks. Objective functions represent the overall goal of optimization, often combining the loss function with regularization terms to balance model accuracy and complexity; for instance, the regularized least squares objective minimizes both MSE and model complexity. In practice, minimizing the loss function directly impacts model predictions, while optimizing the objective function guides the training process to achieve better generalization.

Choosing the Right Function for Your AI Project

Selecting the appropriate loss function is crucial for training neural networks as it quantitatively measures the difference between predicted and actual outcomes, guiding model optimization. The objective function encompasses the loss function but also includes regularization terms to ensure generalization and prevent overfitting in AI models. Tailoring the loss and objective functions to the specific problem domain, such as classification or regression, enhances model performance and aligns with project goals in artificial intelligence development.

Common Mistakes with Loss and Objective Functions in AI

Confusing loss functions with objective functions often leads to optimization errors, as loss functions measure model error on individual examples while objective functions aggregate losses for parameter updates. Selecting inappropriate loss functions like mean squared error for classification tasks can degrade model performance by misaligning with the optimization goal. Ignoring the distinction causes training instability and hampers convergence due to mismatched optimization targets in AI models.

Loss Function vs Objective Function Infographic

techiny.com

techiny.com