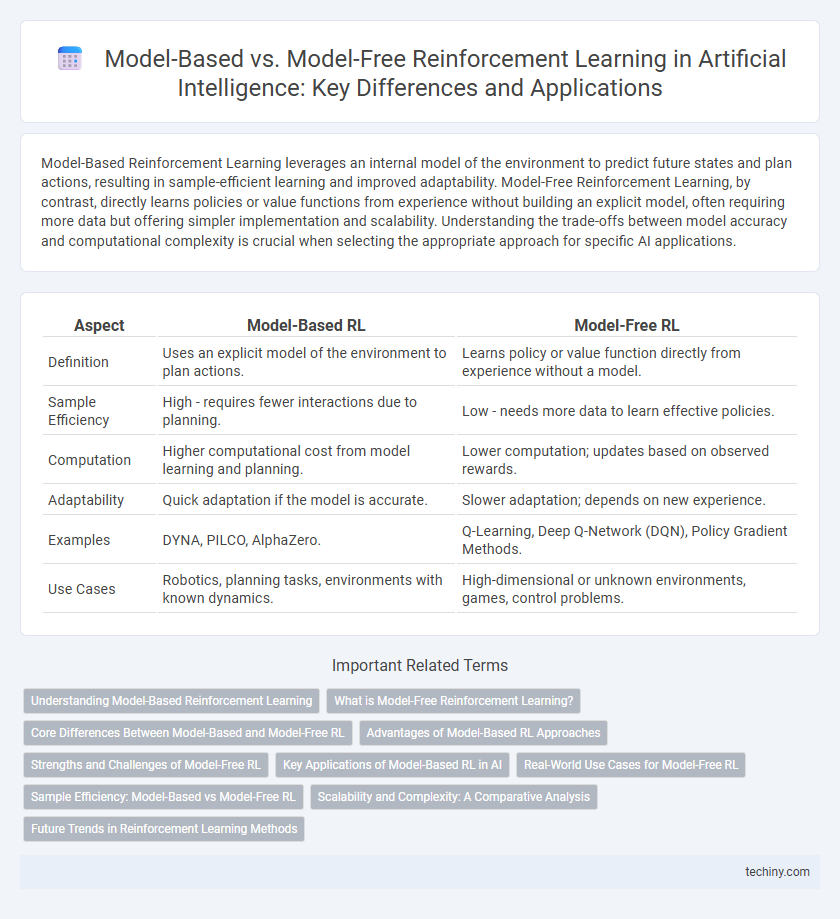

Model-Based Reinforcement Learning leverages an internal model of the environment to predict future states and plan actions, resulting in sample-efficient learning and improved adaptability. Model-Free Reinforcement Learning, by contrast, directly learns policies or value functions from experience without building an explicit model, often requiring more data but offering simpler implementation and scalability. Understanding the trade-offs between model accuracy and computational complexity is crucial when selecting the appropriate approach for specific AI applications.

Table of Comparison

| Aspect | Model-Based RL | Model-Free RL |

|---|---|---|

| Definition | Uses an explicit model of the environment to plan actions. | Learns policy or value function directly from experience without a model. |

| Sample Efficiency | High - requires fewer interactions due to planning. | Low - needs more data to learn effective policies. |

| Computation | Higher computational cost from model learning and planning. | Lower computation; updates based on observed rewards. |

| Adaptability | Quick adaptation if the model is accurate. | Slower adaptation; depends on new experience. |

| Examples | DYNA, PILCO, AlphaZero. | Q-Learning, Deep Q-Network (DQN), Policy Gradient Methods. |

| Use Cases | Robotics, planning tasks, environments with known dynamics. | High-dimensional or unknown environments, games, control problems. |

Understanding Model-Based Reinforcement Learning

Model-Based Reinforcement Learning (MB-RL) accelerates policy optimization by leveraging a learned or given model of the environment's dynamics to simulate future states and rewards. This approach contrasts with Model-Free Reinforcement Learning, which directly estimates value functions or policies through trial-and-error interaction without explicit environmental models. Understanding MB-RL involves grasping its capacity to reduce sample complexity and improve planning efficiency by predicting outcomes and adjusting strategies before real-world execution.

What is Model-Free Reinforcement Learning?

Model-Free Reinforcement Learning (RL) is a type of RL where an agent learns optimal policies directly from interactions with the environment without building an explicit model of the environment's dynamics. It relies on value functions or policy approximations to estimate expected rewards based on past experience, enabling decision-making under uncertainty. Techniques like Q-Learning and Policy Gradient methods exemplify model-free approaches, excelling in environments with complex or unknown dynamics.

Core Differences Between Model-Based and Model-Free RL

Model-Based Reinforcement Learning (RL) relies on an explicit model of the environment's dynamics to predict future states and rewards, enabling planning and more sample-efficient learning. Model-Free RL, in contrast, learns policies or value functions directly from interactions without constructing an environment model, often requiring more data but offering greater simplicity and robustness. Core differences include the reliance on environment models, sample efficiency, and the trade-off between computational complexity and adaptability.

Advantages of Model-Based RL Approaches

Model-Based Reinforcement Learning (RL) offers significant advantages such as higher sample efficiency by utilizing a learned model of the environment to plan and predict future states, reducing the need for extensive trial-and-error interactions. This approach enhances adaptability and generalization across various tasks due to its ability to simulate multiple scenarios internally. Model-Based RL also enables better interpretability and control, allowing for more precise policy adjustments and safer decision-making in complex, dynamic environments.

Strengths and Challenges of Model-Free RL

Model-Free Reinforcement Learning excels in environments with high-dimensional state spaces where explicit modeling is impractical, enabling agents to learn optimal policies directly from interactions without requiring transition dynamics. Its primary strength lies in its simplicity and scalability, allowing effective application in complex tasks such as game playing and robotic control. However, Model-Free RL faces challenges including sample inefficiency, slower convergence, and susceptibility to overfitting, which limit its performance in data-scarce scenarios and necessitate extensive training time.

Key Applications of Model-Based RL in AI

Model-Based Reinforcement Learning (MBRL) excels in robotics, where it enables efficient path planning and real-time adaptation to dynamic environments by leveraging predictive models of system dynamics. In autonomous driving, MBRL enhances decision-making by simulating future states, improving safety and navigation under uncertainty. It also plays a critical role in healthcare AI, optimizing personalized treatment strategies through accurate modeling of patient response trajectories.

Real-World Use Cases for Model-Free RL

Model-Free Reinforcement Learning (RL) excels in real-world applications such as robotics, autonomous driving, and game playing, where learning optimal policies without explicit environment models is crucial. Its ability to adapt to complex, high-dimensional environments makes it ideal for scenarios with unpredictable dynamics and sparse feedback. Model-Free RL algorithms like Deep Q-Networks (DQN) and Proximal Policy Optimization (PPO) demonstrate significant success in optimizing decisions solely from experience data.

Sample Efficiency: Model-Based vs Model-Free RL

Model-Based Reinforcement Learning (RL) achieves higher sample efficiency by utilizing explicit environment models to predict future states and rewards, reducing the need for extensive real-world interactions. In contrast, Model-Free RL relies solely on trial-and-error learning from actual experiences, requiring significantly more samples to converge to optimal policies. Consequently, Model-Based RL is preferred in environments where data collection is expensive or time-consuming, balancing computational complexity with improved learning speed.

Scalability and Complexity: A Comparative Analysis

Model-Based Reinforcement Learning (RL) demonstrates superior scalability by leveraging explicit environmental models to predict future states, reducing sample complexity compared to Model-Free RL approaches. However, Model-Based RL often involves increased computational complexity due to the necessity of maintaining and updating accurate models, which can limit its application in high-dimensional, dynamic environments. In contrast, Model-Free RL scales more effectively across diverse tasks with simpler architectures but typically requires larger datasets and longer training times to achieve comparable performance.

Future Trends in Reinforcement Learning Methods

Future trends in reinforcement learning emphasize hybrid approaches combining Model-Based RL's sample efficiency with Model-Free RL's robustness in complex environments. Advanced model architectures leveraging deep neural networks and meta-learning are expected to enhance predictive accuracy and policy adaptation in dynamic tasks. Research increasingly prioritizes explainability and safety mechanisms to address deployment challenges in real-world AI applications.

Model-Based RL vs Model-Free RL Infographic

techiny.com

techiny.com