Softmax activation transforms logits into a probability distribution across multiple classes, making it ideal for multi-class classification tasks. Sigmoid activation outputs values between 0 and 1 for each neuron independently, which suits binary classification or multi-label problems. Choosing between softmax and sigmoid depends on whether the model predicts exclusive classes or overlapping labels.

Table of Comparison

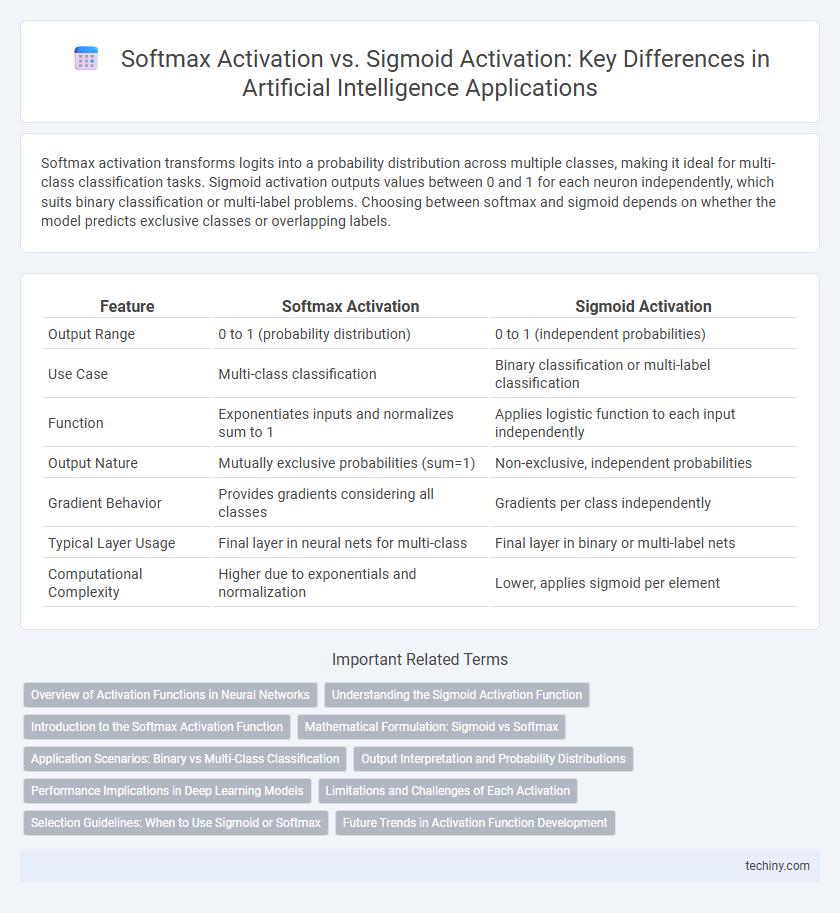

| Feature | Softmax Activation | Sigmoid Activation |

|---|---|---|

| Output Range | 0 to 1 (probability distribution) | 0 to 1 (independent probabilities) |

| Use Case | Multi-class classification | Binary classification or multi-label classification |

| Function | Exponentiates inputs and normalizes sum to 1 | Applies logistic function to each input independently |

| Output Nature | Mutually exclusive probabilities (sum=1) | Non-exclusive, independent probabilities |

| Gradient Behavior | Provides gradients considering all classes | Gradients per class independently |

| Typical Layer Usage | Final layer in neural nets for multi-class | Final layer in binary or multi-label nets |

| Computational Complexity | Higher due to exponentials and normalization | Lower, applies sigmoid per element |

Overview of Activation Functions in Neural Networks

Softmax activation is commonly used in multi-class classification tasks where it converts logits into probability distributions over multiple classes, ensuring outputs sum to one. Sigmoid activation maps input values to a range between 0 and 1, making it suitable for binary classification problems by producing probabilities for each class independently. Understanding the distinct roles of softmax and sigmoid functions is crucial for optimizing neural network performance based on specific task requirements.

Understanding the Sigmoid Activation Function

The Sigmoid activation function maps input values into the range between 0 and 1, making it ideal for binary classification problems where outputs represent probabilities. It is defined mathematically as (x) = 1 / (1 + e^(-x)), providing smooth gradients that help neural networks learn through backpropagation. Despite its advantages, the Sigmoid function can suffer from vanishing gradient problems, especially in deep networks, which limits its effectiveness compared to alternatives like Softmax in multi-class classification tasks.

Introduction to the Softmax Activation Function

The Softmax activation function converts logits into probability distributions by exponentiating each input and normalizing the sum to one, making it ideal for multi-class classification tasks. Unlike the Sigmoid activation, which outputs values between 0 and 1 for binary classification, Softmax provides a vector of probabilities representing class membership across multiple classes. This function enhances decision-making in neural networks by enabling clear probabilistic interpretation of output layers.

Mathematical Formulation: Sigmoid vs Softmax

Sigmoid activation function mathematically transforms input \( z \) using the formula \( \sigma(z) = \frac{1}{1 + e^{-z}} \), mapping values to a range between 0 and 1, which is ideal for binary classification tasks. Softmax activation generalizes this by computing \( \text{Softmax}(z_i) = \frac{e^{z_i}}{\sum_{j} e^{z_j}} \) for each component \( z_i \) in the input vector, converting logits into a probability distribution over multiple classes. The key difference lies in Softmax's normalization across all classes, making it suitable for multi-class classification, whereas Sigmoid operates independently on each class score.

Application Scenarios: Binary vs Multi-Class Classification

Softmax activation is ideal for multi-class classification problems as it outputs a probability distribution across multiple classes, ensuring the sum of probabilities equals one. Sigmoid activation, on the other hand, is suited for binary classification by producing independent probabilities for each class without enforcing mutually exclusive outcomes. In scenarios requiring the differentiation between multiple classes, such as image recognition with numerous categories, softmax provides clearer class distinctions, whereas sigmoid is preferred for tasks like binary sentiment analysis or spam detection.

Output Interpretation and Probability Distributions

Softmax activation outputs a probability distribution across multiple classes, ensuring the sum of all probabilities equals one, making it ideal for multi-class classification problems. Sigmoid activation produces a probability-like output between 0 and 1 for each class independently, suitable for binary classification or multi-label tasks where classes are not mutually exclusive. Softmax's output interpretation allows for clear class prediction by selecting the highest probability, while sigmoid provides independent confidence scores for each class without normalization.

Performance Implications in Deep Learning Models

Softmax activation function is ideal for multi-class classification tasks as it converts raw logits into a probability distribution over multiple classes, leading to better model interpretability and more stable gradient updates. Sigmoid activation, commonly used for binary classification, outputs values between 0 and 1 but can cause vanishing gradient problems in deep networks, impacting learning performance. Choosing softmax improves convergence and predictive accuracy in models involving mutually exclusive classes, while sigmoid remains effective for independent binary labels with simpler architectures.

Limitations and Challenges of Each Activation

Softmax activation often struggles with numerical instability due to exponential computations, especially when dealing with large input values, which can result in vanishing or exploding gradients. Sigmoid activation faces challenges such as output saturation, leading to vanishing gradients that hinder deep network training and reduce model convergence speed. Both activations exhibit limited differentiation in multi-class classification contexts, with Softmax being sensitive to class imbalance and Sigmoid prone to inappropriate probabilistic interpretations in multi-label scenarios.

Selection Guidelines: When to Use Sigmoid or Softmax

Use sigmoid activation for binary classification tasks where outputs represent independent probabilities for each class, especially when only one class is present or classes are not mutually exclusive. Select softmax activation for multi-class classification problems with mutually exclusive classes, as it normalizes outputs into a probability distribution summing to one, facilitating clear class prediction. Consider sigmoid for multi-label classification scenarios and softmax when a single class label must be predicted among several options.

Future Trends in Activation Function Development

Future trends in activation function development emphasize enhancing computational efficiency and mitigating issues like vanishing gradients, with emerging research exploring adaptive activation mechanisms beyond static functions like Softmax and Sigmoid. Neural architectures integrating dynamic activation functions tailored to specific layers or tasks show potential for improved model interpretability and generalization. Innovations in activation functions may also leverage bio-inspired and neuromorphic computing principles to better mimic complex brain processes, driving advances in artificial intelligence capabilities.

Softmax Activation vs Sigmoid Activation Infographic

techiny.com

techiny.com