Reinforcement learning trains agents through trial and error by maximizing cumulative rewards in dynamic environments. Imitation learning, on the other hand, models agent behavior by mimicking expert demonstrations, bypassing the need for explicit reward signals. Choosing between these approaches depends on data availability, task complexity, and the desired learning efficiency.

Table of Comparison

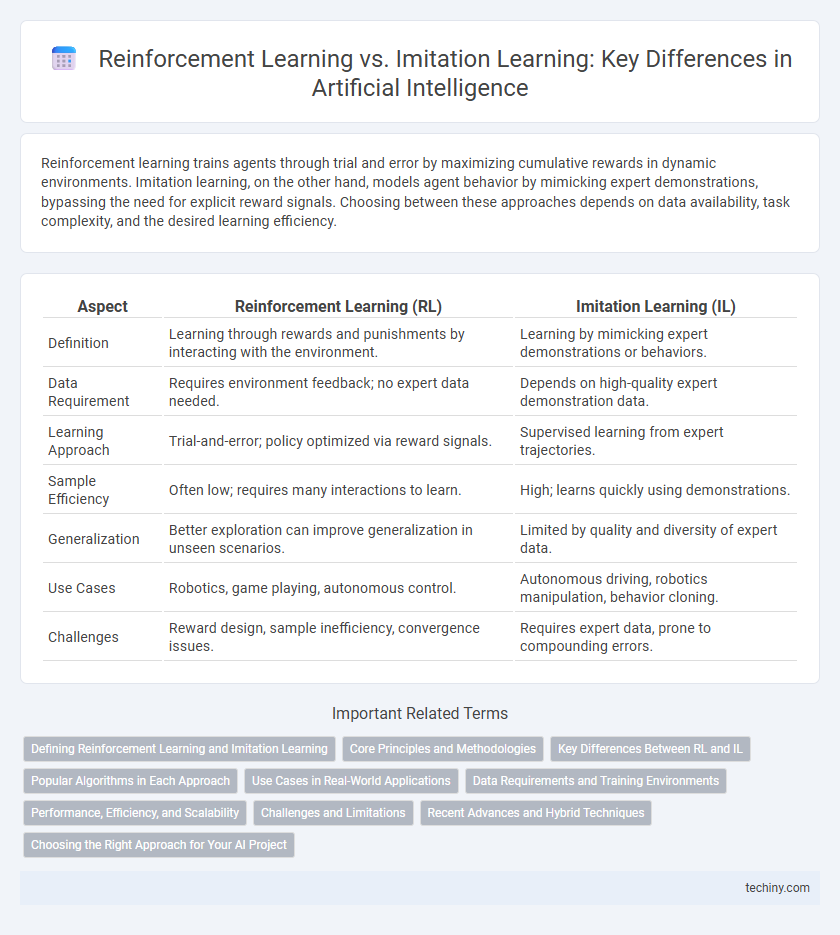

| Aspect | Reinforcement Learning (RL) | Imitation Learning (IL) |

|---|---|---|

| Definition | Learning through rewards and punishments by interacting with the environment. | Learning by mimicking expert demonstrations or behaviors. |

| Data Requirement | Requires environment feedback; no expert data needed. | Depends on high-quality expert demonstration data. |

| Learning Approach | Trial-and-error; policy optimized via reward signals. | Supervised learning from expert trajectories. |

| Sample Efficiency | Often low; requires many interactions to learn. | High; learns quickly using demonstrations. |

| Generalization | Better exploration can improve generalization in unseen scenarios. | Limited by quality and diversity of expert data. |

| Use Cases | Robotics, game playing, autonomous control. | Autonomous driving, robotics manipulation, behavior cloning. |

| Challenges | Reward design, sample inefficiency, convergence issues. | Requires expert data, prone to compounding errors. |

Defining Reinforcement Learning and Imitation Learning

Reinforcement Learning (RL) is a machine learning paradigm where an agent learns optimal behavior by interacting with an environment and receiving feedback in the form of rewards or penalties. Imitation Learning (IL) involves training an agent to mimic expert behavior by learning from demonstrations without requiring explicit reward signals. Both approaches aim to develop intelligent agents, but RL emphasizes trial-and-error exploration while IL relies on replicating observed expert actions.

Core Principles and Methodologies

Reinforcement learning relies on an agent learning optimal behaviors through trial-and-error interactions with an environment, maximizing cumulative rewards based on feedback signals. Imitation learning, by contrast, centers on teaching agents by mimicking expert demonstrations without explicit reward functions, effectively transferring knowledge through observed behavior patterns. Core methodologies in reinforcement learning involve policy optimization and value function approximation, while imitation learning employs techniques such as behavior cloning and inverse reinforcement learning.

Key Differences Between RL and IL

Reinforcement Learning (RL) involves learning optimal policies through trial-and-error interactions with the environment, maximizing cumulative rewards based on feedback signals. Imitation Learning (IL) relies on expert demonstrations to directly learn behavior without explicit reward signals, focusing on mimicking the demonstrated actions. The key difference lies in RL's exploration-driven, reward-based learning versus IL's reliance on supervised learning from expert data.

Popular Algorithms in Each Approach

Reinforcement Learning popular algorithms include Q-Learning, Deep Q-Networks (DQN), and Proximal Policy Optimization (PPO), which optimize decision-making by maximizing cumulative reward through trial and error. Imitation Learning primarily employs Behavioral Cloning and Generative Adversarial Imitation Learning (GAIL), leveraging expert demonstrations to train agents without explicit reward signals. These approaches differ fundamentally in data requirements and learning paradigms but both contribute significantly to advancements in autonomous systems and robotics.

Use Cases in Real-World Applications

Reinforcement learning excels in dynamic environments like robotics for autonomous navigation and game playing, where agents learn optimal policies through rewards and trial-and-error interactions. Imitation learning is widely applied in scenarios requiring human expertise replication, such as autonomous driving and surgical robotics, by training models on expert demonstrations. Both paradigms enable advanced decision-making systems but differ in data dependency, with reinforcement learning relying on exploration and imitation learning depending on labeled expert behavior.

Data Requirements and Training Environments

Reinforcement learning requires extensive interaction with dynamic training environments to learn optimal policies through trial-and-error feedback signals, often demanding substantial computational resources and time. Imitation learning relies on large datasets of expert demonstrations, enabling faster training by directly mimicking observed behaviors but may struggle with generalization in unseen scenarios. The choice between these methods depends on data availability and environment complexity, with reinforcement learning excelling in complex, unknown settings and imitation learning benefiting from high-quality expert data.

Performance, Efficiency, and Scalability

Reinforcement Learning (RL) excels in long-term performance by enabling agents to learn optimal policies through trial-and-error interactions with complex environments, often achieving higher final rewards than Imitation Learning (IL). IL offers greater efficiency during training by leveraging expert demonstrations, reducing the need for extensive exploration but may suffer from limited scalability when expert data is scarce or costly to obtain. Scalability challenges in RL arise from high computational demands and sample inefficiency, whereas IL scales better with demonstration quality but struggles to generalize beyond seen behaviors, impacting overall adaptability.

Challenges and Limitations

Reinforcement learning faces challenges such as sparse reward signals, high sample complexity, and difficulties in balancing exploration and exploitation, which often leads to slow convergence and instability. Imitation learning struggles with covariate shift and distribution mismatch, where the learned policy may fail when exposed to states not covered by expert demonstrations. Both methods encounter limitations in scalability and generalization to complex, dynamic environments, restricting their effective deployment in real-world applications.

Recent Advances and Hybrid Techniques

Recent advances in reinforcement learning (RL) leverage deep neural networks to enhance exploration and decision-making efficiency, enabling agents to outperform traditional benchmarks in dynamic environments. Imitation learning (IL) has evolved with techniques such as generative adversarial imitation learning (GAIL), which better mimic expert behaviors by capturing complex data distributions. Hybrid methods that integrate RL and IL capitalize on the strengths of both paradigms, improving sample efficiency and robustness by using expert demonstrations to guide exploration while still allowing autonomous learning and adaptation.

Choosing the Right Approach for Your AI Project

Reinforcement Learning excels in environments where trial-and-error interaction leads to optimized decision-making, making it ideal for dynamic, complex tasks requiring continuous adaptation. Imitation Learning leverages expert demonstrations to train models efficiently, reducing the need for extensive exploration and enabling faster convergence in structured scenarios. Selecting between these methods depends on the availability of expert data, computational resources, and the specific goals of your AI project.

Reinforcement Learning vs Imitation Learning Infographic

techiny.com

techiny.com