Accuracy measures the overall correctness of a classification model by dividing the number of correct predictions by the total predictions, making it intuitive but potentially misleading in imbalanced datasets. F1 score balances precision and recall, providing a more robust evaluation for models where false positives and false negatives carry different consequences. Selecting between accuracy and F1 score depends on the problem's emphasis on either overall correctness or class-specific performance.

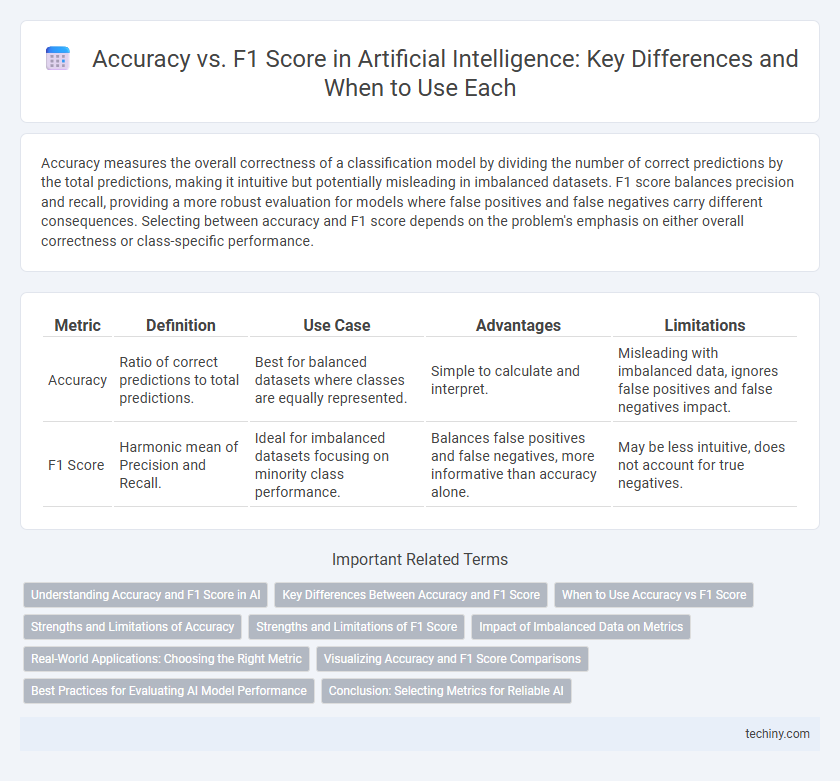

Table of Comparison

| Metric | Definition | Use Case | Advantages | Limitations |

|---|---|---|---|---|

| Accuracy | Ratio of correct predictions to total predictions. | Best for balanced datasets where classes are equally represented. | Simple to calculate and interpret. | Misleading with imbalanced data, ignores false positives and false negatives impact. |

| F1 Score | Harmonic mean of Precision and Recall. | Ideal for imbalanced datasets focusing on minority class performance. | Balances false positives and false negatives, more informative than accuracy alone. | May be less intuitive, does not account for true negatives. |

Understanding Accuracy and F1 Score in AI

Accuracy measures the proportion of correctly predicted instances among all predictions in AI models, providing a straightforward metric for overall performance evaluation. F1 Score balances precision and recall, making it crucial for assessing models in imbalanced datasets where false positives and false negatives have different impacts. Selecting between accuracy and F1 Score depends on the specific AI application and the importance of false positive versus false negative errors in the given context.

Key Differences Between Accuracy and F1 Score

Accuracy measures the proportion of correctly predicted instances among all predictions, making it suitable for balanced datasets but less reliable with imbalanced classes. F1 Score, the harmonic mean of precision and recall, provides a more informative metric for imbalanced datasets by balancing false positives and false negatives. Key differences between Accuracy and F1 Score lie in their sensitivity to class imbalance and their ability to capture the trade-off between precision and recall in classification tasks.

When to Use Accuracy vs F1 Score

Accuracy is ideal for balanced datasets where the classes are evenly distributed, providing a straightforward measure of overall correctness. F1 Score excels in situations with imbalanced classes, emphasizing the harmonic mean of precision and recall to better capture the performance on minority classes. Choosing between Accuracy and F1 Score depends on the importance of false positives and false negatives in the specific AI application.

Strengths and Limitations of Accuracy

Accuracy measures the proportion of correctly predicted instances among all predictions, making it intuitive and easy to interpret for balanced datasets. It performs well when classes are evenly distributed but can be misleading in imbalanced datasets, as high accuracy may result from simply predicting the majority class. Accuracy does not account for the trade-offs between precision and recall, limiting its effectiveness in evaluating models where false positives and false negatives carry different consequences.

Strengths and Limitations of F1 Score

F1 Score excels in evaluating models on imbalanced datasets by balancing precision and recall, providing a more comprehensive measure of performance than accuracy alone. It highlights the trade-off between false positives and false negatives, which is critical in applications like fraud detection and medical diagnosis. However, F1 Score can be less informative when class distribution is balanced or when the costs of different error types vary significantly.

Impact of Imbalanced Data on Metrics

Accuracy can be misleading in imbalanced datasets, as it may reflect high performance by favoring the majority class while neglecting the minority class. F1 Score provides a better balance between precision and recall, offering a more reliable evaluation of models under class imbalance conditions. Imbalanced data significantly impacts these metrics, making F1 Score a preferred choice when minority class detection is critical.

Real-World Applications: Choosing the Right Metric

In real-world applications of artificial intelligence, the choice between accuracy and F1 score depends on the problem's class distribution and the cost of false positives versus false negatives. Accuracy is suitable for balanced datasets where overall correctness matters, while the F1 score excels in imbalanced datasets by balancing precision and recall to ensure minority classes are properly evaluated. Selecting the right metric directly impacts model performance evaluation and decision-making in domains like healthcare, fraud detection, and natural language processing.

Visualizing Accuracy and F1 Score Comparisons

Visualizing accuracy and F1 score comparisons enhances understanding of model performance by highlighting differences between overall correctness and balance between precision and recall. Accuracy measures the ratio of correct predictions to total predictions, while the F1 score captures the harmonic mean of precision and recall, proving crucial for imbalanced datasets. Graphical representations such as bar charts, confusion matrices, and precision-recall curves allow for intuitive comparison, aiding in selecting optimal AI models for classification tasks.

Best Practices for Evaluating AI Model Performance

Accuracy measures the proportion of correctly predicted instances over the total instances, making it suitable for balanced datasets. F1 Score, the harmonic mean of precision and recall, is preferable for imbalanced datasets where false positives and false negatives impact performance evaluation. Best practices recommend using F1 Score for classification tasks with skewed classes and combining multiple metrics to achieve a comprehensive assessment of AI model performance.

Conclusion: Selecting Metrics for Reliable AI

Selecting metrics such as accuracy or F1 score depends on the specific AI application and its class distribution. Accuracy is suitable for balanced datasets, while F1 score provides a better measure for imbalanced classes by balancing precision and recall. Reliable AI systems prioritize metrics aligned with their operational goals to ensure effective model evaluation and deployment.

Accuracy vs F1 Score Infographic

techiny.com

techiny.com