Perplexity measures how well a language model predicts a sample, reflecting its uncertainty and lower values indicate better performance. Accuracy evaluates the correctness of the model's predictions by comparing them to the actual outcomes, providing a clear metric of reliability. Balancing perplexity and accuracy is crucial for optimizing AI systems to generate coherent and precise results.

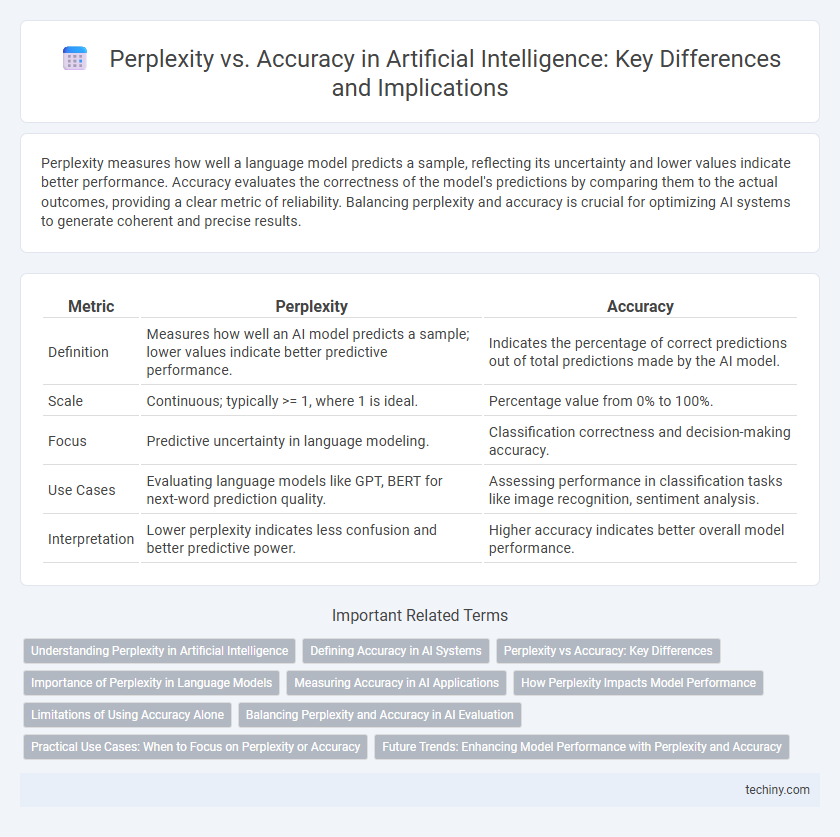

Table of Comparison

| Metric | Perplexity | Accuracy |

|---|---|---|

| Definition | Measures how well an AI model predicts a sample; lower values indicate better predictive performance. | Indicates the percentage of correct predictions out of total predictions made by the AI model. |

| Scale | Continuous; typically >= 1, where 1 is ideal. | Percentage value from 0% to 100%. |

| Focus | Predictive uncertainty in language modeling. | Classification correctness and decision-making accuracy. |

| Use Cases | Evaluating language models like GPT, BERT for next-word prediction quality. | Assessing performance in classification tasks like image recognition, sentiment analysis. |

| Interpretation | Lower perplexity indicates less confusion and better predictive power. | Higher accuracy indicates better overall model performance. |

Understanding Perplexity in Artificial Intelligence

Perplexity measures how well a language model predicts a sample, quantifying uncertainty by calculating the inverse probability of the test set normalized by the number of words. In artificial intelligence, lower perplexity indicates better predictive performance and a model's higher confidence in generating accurate text sequences. Understanding perplexity enables researchers to calibrate language models effectively while balancing model complexity and generalization for improved accuracy.

Defining Accuracy in AI Systems

Accuracy in AI systems measures the proportion of correct predictions or classifications made by a model relative to the total number of cases assessed, reflecting its overall effectiveness in task performance. It is calculated by dividing the number of true positive and true negative results by the total number of predictions, providing a straightforward metric for evaluating model reliability. Unlike perplexity, which assesses the uncertainty in language models, accuracy directly quantifies the correctness of outputs in classification and decision-making tasks.

Perplexity vs Accuracy: Key Differences

Perplexity measures the uncertainty of a language model when predicting a sample, indicating how well the model predicts a distribution of words, while accuracy evaluates the proportion of correct predictions against the total predictions made. Lower perplexity values signify better model performance by displaying less uncertainty, whereas higher accuracy percentages directly reflect the correctness of specific predictions. Understanding the distinction between perplexity and accuracy is crucial for optimizing natural language processing models, as perplexity assesses probabilistic prediction quality and accuracy focuses on discrete classification outcomes.

Importance of Perplexity in Language Models

Perplexity is a critical metric in evaluating language models, reflecting how well a model predicts a sample by measuring uncertainty in word sequences. Lower perplexity indicates a higher likelihood that the model accurately anticipates the next word, directly impacting the fluency and coherence of generated text. While accuracy measures binary correctness, perplexity captures the probabilistic nature of language, providing a more nuanced assessment of model performance in natural language processing tasks.

Measuring Accuracy in AI Applications

Measuring accuracy in AI applications involves evaluating how well a model's predictions match the true outcomes, with accuracy typically expressed as the proportion of correct predictions over total predictions. Perplexity, often used in language models, quantifies how well a probability distribution predicts a sample, where lower perplexity indicates better model performance. Comparing perplexity to accuracy highlights that while accuracy directly measures classification correctness, perplexity provides insight into the model's uncertainty and predictive confidence in natural language processing tasks.

How Perplexity Impacts Model Performance

Perplexity quantifies how well an AI language model predicts a sample, with lower perplexity indicating better predictive performance and improved handling of language patterns. High perplexity suggests uncertainty in the model's predictions, leading to less accurate and coherent outputs, especially in natural language processing tasks. Reducing perplexity directly enhances model accuracy by refining probability distributions over possible next words, resulting in more reliable and contextually relevant results.

Limitations of Using Accuracy Alone

Accuracy alone fails to capture the nuances of model performance in artificial intelligence, especially in imbalanced datasets where high accuracy can be misleading. Perplexity offers a more granular measure of predictive uncertainty, reflecting how well a language model predicts a sample, thus providing deeper insights into its capabilities. Relying solely on accuracy risks overlooking critical errors and model biases that perplexity can help identify, ensuring a more comprehensive evaluation.

Balancing Perplexity and Accuracy in AI Evaluation

Balancing perplexity and accuracy in AI evaluation is critical for developing robust language models that generate coherent and contextually relevant text. Perplexity measures the model's uncertainty in predicting the next word, while accuracy reflects the correctness of specific predictions, necessitating a trade-off to optimize overall performance. Effective AI systems minimize perplexity to improve fluency without compromising accuracy, ensuring reliable and meaningful outputs.

Practical Use Cases: When to Focus on Perplexity or Accuracy

In practical AI applications, perplexity serves as a key metric for evaluating language model performance by measuring uncertainty in predicting text sequences, which is crucial during model development and tuning phases. Accuracy becomes more relevant in tasks demanding precise predictions, such as classification or decision-making systems, where the correctness of each output directly impacts the user experience or outcome. Choosing between perplexity and accuracy depends on the specific use case: prioritize perplexity for generative models and language understanding, and accuracy for deterministic tasks like image recognition or sentiment analysis.

Future Trends: Enhancing Model Performance with Perplexity and Accuracy

Future trends in artificial intelligence emphasize the synergistic improvement of perplexity and accuracy to enhance model performance, leveraging advanced algorithms and larger, more diverse datasets. Researchers are exploring hybrid evaluation metrics that combine perplexity's measure of uncertainty with accuracy's correctness to create more robust natural language processing models. Innovations in unsupervised learning and reinforcement learning techniques aim to reduce perplexity while simultaneously driving higher accuracy, enabling smarter and more reliable AI systems.

Perplexity vs Accuracy Infographic

techiny.com

techiny.com