Q-Learning and SARSA are reinforcement learning algorithms used to optimize decision-making policies through trial and error in uncertain environments. Q-Learning updates its value estimates based on the maximum future reward, making it an off-policy algorithm focused on learning the optimal policy regardless of the agent's actions. SARSA, as an on-policy algorithm, updates values using the action actually taken by the agent, resulting in more conservative policies that consider the agent's behavior during learning.

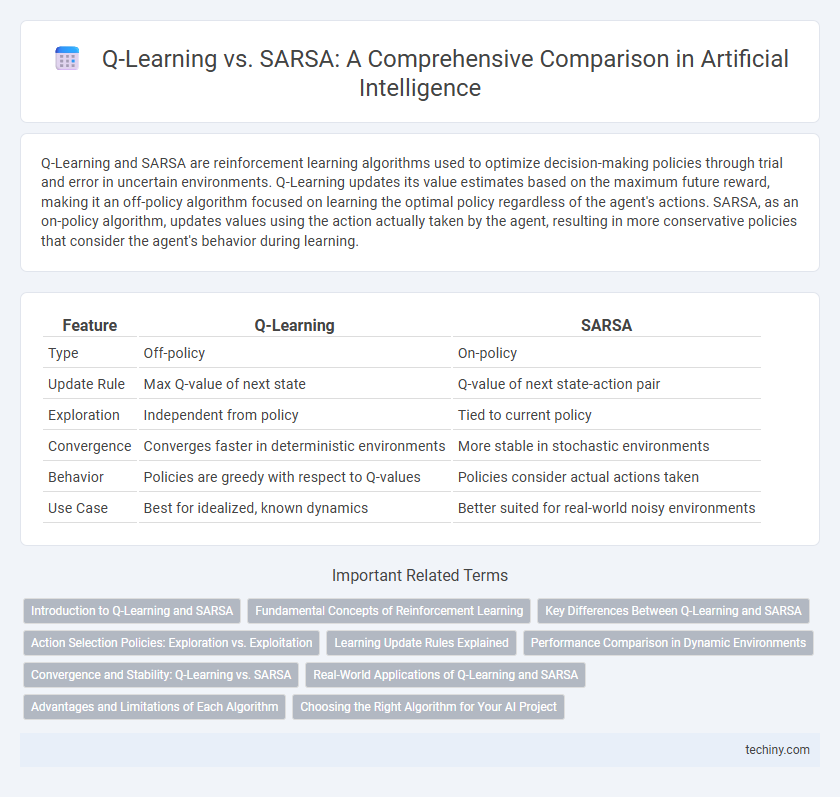

Table of Comparison

| Feature | Q-Learning | SARSA |

|---|---|---|

| Type | Off-policy | On-policy |

| Update Rule | Max Q-value of next state | Q-value of next state-action pair |

| Exploration | Independent from policy | Tied to current policy |

| Convergence | Converges faster in deterministic environments | More stable in stochastic environments |

| Behavior | Policies are greedy with respect to Q-values | Policies consider actual actions taken |

| Use Case | Best for idealized, known dynamics | Better suited for real-world noisy environments |

Introduction to Q-Learning and SARSA

Q-Learning and SARSA are two prominent reinforcement learning algorithms used to find the optimal policy in decision-making tasks. Q-Learning is an off-policy method that updates the action-value function by maximizing the expected future rewards, independent of the agent's current policy. SARSA, an on-policy algorithm, updates the action-value function based on the action actually taken by the agent, considering the current policy's behavior.

Fundamental Concepts of Reinforcement Learning

Q-Learning and SARSA are core algorithms in reinforcement learning, both relying on the Markov Decision Process (MDP) framework to optimize agent behavior. Q-Learning is an off-policy method that updates its Q-values using the maximum possible reward from the next state, promoting exploration of optimal policies. SARSA, on the other hand, is an on-policy algorithm that updates Q-values based on the actual actions taken, integrating the agent's current policy to balance exploration and exploitation.

Key Differences Between Q-Learning and SARSA

Q-Learning employs an off-policy approach, learning the optimal policy independently of the agent's actions by updating values based on the maximum expected future rewards, whereas SARSA uses an on-policy method, updating values based on the agent's actual actions and experiences. The difference in updating rules means Q-Learning tends to converge faster toward the optimal policy but may be less stable in environments with stochastic rewards, while SARSA provides safer, more conservative learning by accounting for the policy being followed. Understanding these distinctions is crucial for selecting the appropriate reinforcement learning algorithm tailored to specific environment dynamics and exploration strategies.

Action Selection Policies: Exploration vs. Exploitation

Q-Learning employs a greedy action selection policy that prioritizes exploitation by choosing the action with the highest estimated future reward while incorporating e-greedy strategies to ensure sufficient exploration. SARSA, on the other hand, follows an on-policy approach where the action selection is based on the current policy, balancing exploration and exploitation by updating estimates according to the actual action taken. This fundamental difference affects how each algorithm navigates the exploration-exploitation trade-off and adapts to dynamic environments in reinforcement learning tasks.

Learning Update Rules Explained

Q-Learning updates its value function by maximizing the expected future rewards using the formula: Q(s,a) - Q(s,a) + a [r + g max_a' Q(s',a') - Q(s,a)], where the next action is chosen greedily to promote optimal policies. SARSA updates differently by using the actual next action taken in the policy, following the rule: Q(s,a) - Q(s,a) + a [r + g Q(s',a') - Q(s,a)], which accounts for the agent's exploratory decisions. These distinctions in update rules impact convergence and policy behavior in reinforcement learning environments.

Performance Comparison in Dynamic Environments

Q-Learning often outperforms SARSA in dynamic environments due to its off-policy nature, enabling it to learn the optimal policy without directly following the current policy's actions. SARSA, being an on-policy algorithm, adapts more cautiously by considering the agent's actual behavior, which can lead to slower convergence but potentially safer exploration in highly uncertain states. Performance in dynamic settings depends on factors like state transition variability and reward structure, with Q-Learning favoring faster adaptability and SARSA providing robustness under evolving environmental conditions.

Convergence and Stability: Q-Learning vs. SARSA

Q-Learning typically converges faster than SARSA due to its off-policy nature, optimizing for the best possible action regardless of current policy, which can sometimes lead to instability in highly dynamic environments. SARSA, as an on-policy algorithm, updates action values based on the current policy's actions, providing greater stability and smoother convergence by accounting for the exploration strategy. Both algorithms rely on learning rates and exploration parameters that critically impact convergence speed and stability in reinforcement learning tasks.

Real-World Applications of Q-Learning and SARSA

Q-Learning excels in environments where exploration is less costly, such as autonomous robotics and personalized recommendation systems, by efficiently learning optimal policies through off-policy updates. SARSA is favored in safety-critical applications like autonomous driving and healthcare decision support, as its on-policy approach accounts for the current policy's behavior, reducing the risk of unsafe actions. Both algorithms enhance decision-making processes in complex, dynamic settings, with Q-Learning providing faster convergence and SARSA offering more cautious policy updates.

Advantages and Limitations of Each Algorithm

Q-Learning offers the advantage of learning optimal policies by estimating the highest future rewards, making it effective in deterministic and stochastic environments; however, it can suffer from overestimations leading to suboptimal policies in some cases. SARSA, on the other hand, updates policies based on the current action's outcome, providing more conservative learning that aligns better with the actual policy execution, but it may converge more slowly and be less efficient in exploring optimal actions. Both algorithms struggle with scalability in large state-action spaces, necessitating function approximation techniques such as deep neural networks for practical applications in complex problems.

Choosing the Right Algorithm for Your AI Project

Selecting between Q-Learning and SARSA hinges on the specific environment dynamics and risk tolerance of your AI project. Q-Learning offers off-policy control that can lead to faster convergence in deterministic settings by learning optimal policies regardless of the agent's actions. SARSA's on-policy approach adapts more cautiously to stochastic environments by learning from the agent's actual behavior, reducing the chance of risky decisions and improving safety in uncertain scenarios.

Q-Learning vs SARSA Infographic

techiny.com

techiny.com