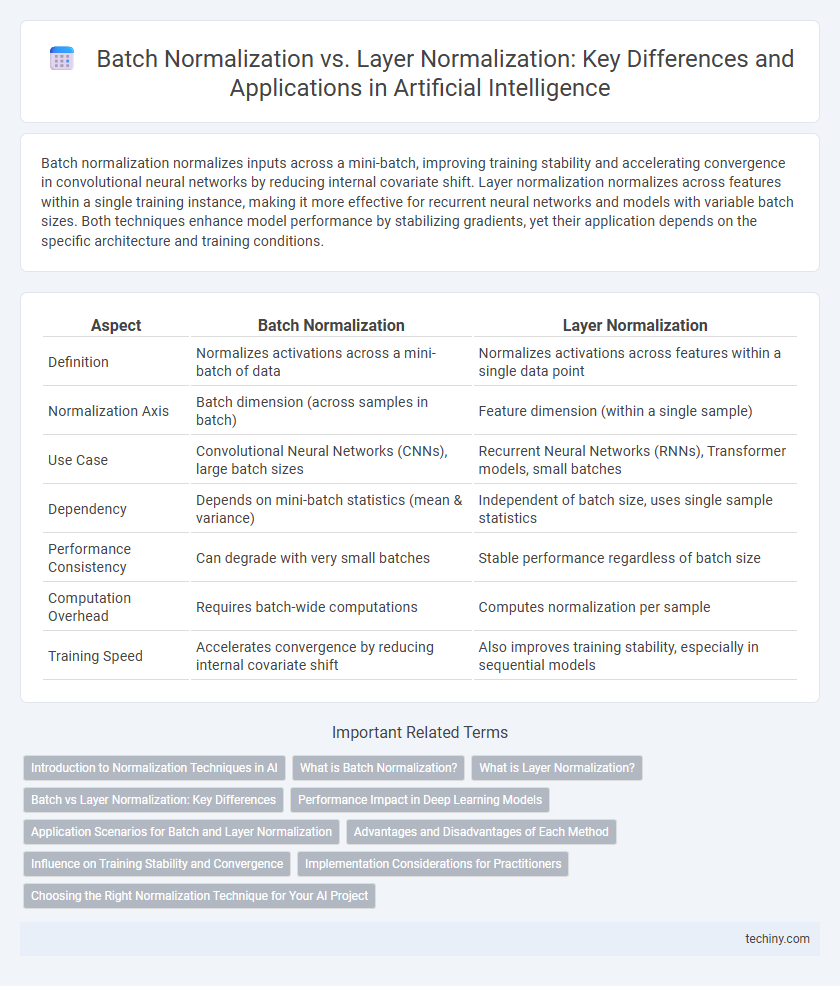

Batch normalization normalizes inputs across a mini-batch, improving training stability and accelerating convergence in convolutional neural networks by reducing internal covariate shift. Layer normalization normalizes across features within a single training instance, making it more effective for recurrent neural networks and models with variable batch sizes. Both techniques enhance model performance by stabilizing gradients, yet their application depends on the specific architecture and training conditions.

Table of Comparison

| Aspect | Batch Normalization | Layer Normalization |

|---|---|---|

| Definition | Normalizes activations across a mini-batch of data | Normalizes activations across features within a single data point |

| Normalization Axis | Batch dimension (across samples in batch) | Feature dimension (within a single sample) |

| Use Case | Convolutional Neural Networks (CNNs), large batch sizes | Recurrent Neural Networks (RNNs), Transformer models, small batches |

| Dependency | Depends on mini-batch statistics (mean & variance) | Independent of batch size, uses single sample statistics |

| Performance Consistency | Can degrade with very small batches | Stable performance regardless of batch size |

| Computation Overhead | Requires batch-wide computations | Computes normalization per sample |

| Training Speed | Accelerates convergence by reducing internal covariate shift | Also improves training stability, especially in sequential models |

Introduction to Normalization Techniques in AI

Batch Normalization and Layer Normalization are key techniques to stabilize and accelerate the training of deep neural networks by normalizing input activations. Batch Normalization normalizes inputs across the batch dimension, making it effective for convolutional neural networks where batch statistics remain consistent. Layer Normalization normalizes across the features within a single data point, proving advantageous in recurrent neural networks and transformers where batch statistics vary significantly.

What is Batch Normalization?

Batch Normalization is a technique used in deep learning to normalize the inputs of each mini-batch, stabilizing and accelerating the training process by reducing internal covariate shift. It works by standardizing the mean and variance of layer inputs across the batch dimension, which helps improve convergence and allows for higher learning rates. Commonly employed in convolutional neural networks (CNNs), Batch Normalization enhances model performance and generalization by maintaining consistent activation distributions during training.

What is Layer Normalization?

Layer Normalization is a technique used in artificial intelligence to normalize the inputs across the features within a single training example, stabilizing the learning process in deep neural networks. Unlike Batch Normalization, which normalizes over a batch of data points, Layer Normalization computes the mean and variance for each individual data sample, making it effective for recurrent neural networks and variable batch sizes. This method reduces internal covariate shift, leading to faster convergence and improved training stability in models such as transformers.

Batch vs Layer Normalization: Key Differences

Batch Normalization normalizes activations across the batch dimension, improving training stability and convergence speed in convolutional neural networks by reducing internal covariate shift. Layer Normalization normalizes across the feature dimension within each individual sample, making it more effective for recurrent neural networks and transformers where batch sizes vary or are small. The key difference lies in Batch Normalization relying on batch statistics, while Layer Normalization uses statistics computed independently for each sample, enabling consistent performance regardless of batch size.

Performance Impact in Deep Learning Models

Batch normalization stabilizes training and accelerates convergence in deep learning models by normalizing layer inputs across a batch, enhancing performance in convolutional neural networks with large mini-batches. Layer normalization normalizes across features within a single sample, improving training stability in recurrent neural networks and transformers, especially when batch sizes are small or variable. Performance impact varies with model architecture and batch size, where batch normalization excels in CNNs with large batches, while layer normalization is preferred for sequence models and transformer-based architectures.

Application Scenarios for Batch and Layer Normalization

Batch Normalization excels in convolutional neural networks and image recognition tasks where large batch sizes enable stable mean and variance estimation, improving training speed and model generalization. Layer Normalization is preferred in recurrent neural networks and natural language processing models, such as transformers, where batch sizes vary or are small, as it normalizes across features for each individual sample, ensuring consistent training dynamics. Selecting the appropriate normalization technique depends on the model architecture and data dependency, with Batch Normalization favoring spatial data and Layer Normalization enhancing sequential data processing.

Advantages and Disadvantages of Each Method

Batch Normalization improves training speed and stability by normalizing activations across a mini-batch, but its performance degrades with small batch sizes or varying batch statistics during inference. Layer Normalization normalizes across the features within a single sample, making it more suitable for recurrent neural networks and small batch scenarios, though it may converge slower than Batch Normalization in convolutional networks. Both methods reduce internal covariate shift, but the choice depends on the architecture and batch size constraints, balancing convergence speed with computational efficiency.

Influence on Training Stability and Convergence

Batch Normalization stabilizes training by normalizing inputs across the mini-batch, reducing internal covariate shift and enabling higher learning rates, which accelerates convergence in deep neural networks. Layer Normalization normalizes across the features within each individual sample, making it more effective in recurrent architectures and stabilizing training where batch sizes are small or variable. Both techniques contribute to improved convergence rates, but Batch Normalization generally offers stronger training stability in convolutional networks, while Layer Normalization excels in sequence models.

Implementation Considerations for Practitioners

Batch Normalization requires maintaining running estimates of batch statistics during training and inference, which can complicate implementation in models with variable batch sizes or sequential data. Layer Normalization, computed independently for each data sample, simplifies deployment in recurrent neural networks and real-time applications by avoiding dependence on batch statistics. Practitioners should consider model architecture and batch size constraints when choosing between these normalization techniques to ensure stability and performance.

Choosing the Right Normalization Technique for Your AI Project

Batch Normalization standardizes inputs across a mini-batch, improving training stability and accelerating convergence, making it ideal for convolutional neural networks with large batch sizes. Layer Normalization normalizes across features within a single data instance, offering consistent performance in recurrent neural networks and transformer architectures where batch sizes vary or are small. Selecting the appropriate normalization technique depends on your model architecture, batch size, and computational constraints for optimal AI project performance.

Batch Normalization vs Layer Normalization Infographic

techiny.com

techiny.com