Dropout and Batch Normalization are essential techniques to improve neural network performance and prevent overfitting. Dropout randomly deactivates neurons during training, forcing the network to learn robust features, while Batch Normalization normalizes layer inputs to stabilize and accelerate training. Combining both methods can enhance generalization and convergence rates in deep learning models.

Table of Comparison

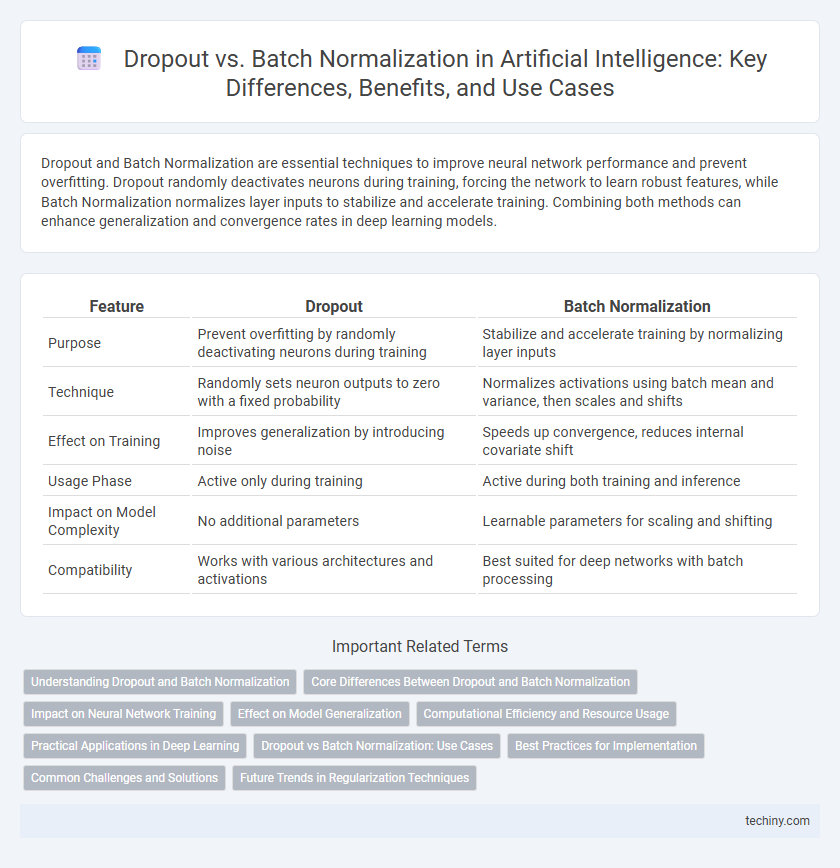

| Feature | Dropout | Batch Normalization |

|---|---|---|

| Purpose | Prevent overfitting by randomly deactivating neurons during training | Stabilize and accelerate training by normalizing layer inputs |

| Technique | Randomly sets neuron outputs to zero with a fixed probability | Normalizes activations using batch mean and variance, then scales and shifts |

| Effect on Training | Improves generalization by introducing noise | Speeds up convergence, reduces internal covariate shift |

| Usage Phase | Active only during training | Active during both training and inference |

| Impact on Model Complexity | No additional parameters | Learnable parameters for scaling and shifting |

| Compatibility | Works with various architectures and activations | Best suited for deep networks with batch processing |

Understanding Dropout and Batch Normalization

Dropout is a regularization technique that randomly deactivates a subset of neurons during training to prevent overfitting and improve model generalization. Batch normalization normalizes the inputs of each layer by adjusting and scaling activations, accelerating training convergence and stabilizing the learning process. Both methods enhance deep neural network performance but serve distinct functions: dropout reduces co-adaptation of neurons, while batch normalization mitigates internal covariate shift.

Core Differences Between Dropout and Batch Normalization

Dropout is a regularization technique that randomly deactivates neurons during training to prevent overfitting, whereas batch normalization normalizes layer inputs to stabilize and accelerate training. Dropout introduces noise to improve model generalization, while batch normalization reduces internal covariate shift by adjusting and scaling activations. Core differences lie in their objectives: dropout focuses on reducing overfitting, and batch normalization aims to improve training efficiency and convergence.

Impact on Neural Network Training

Dropout prevents overfitting by randomly deactivating neurons during training, promoting model generalization and reducing co-adaptation of features. Batch normalization normalizes input layers by adjusting and scaling activations, accelerating convergence and stabilizing gradients across mini-batches. Combining both techniques can improve neural network training by balancing regularization and training speed, enhancing model robustness and performance.

Effect on Model Generalization

Dropout improves model generalization by randomly deactivating neurons during training, which prevents overfitting and promotes robust feature learning. Batch normalization enhances generalization by stabilizing learning dynamics, reducing internal covariate shift, and allowing higher learning rates without destabilizing the model. Both techniques contribute to improved model performance, but dropout primarily targets overfitting, while batch normalization optimizes training efficiency and convergence.

Computational Efficiency and Resource Usage

Dropout reduces overfitting by randomly deactivating neurons during training, which adds minimal computational overhead but can slow convergence. Batch normalization normalizes activations across the mini-batch, requiring additional computations for mean and variance but often accelerates training and improves model generalization. In terms of resource usage, dropout demands less memory as it does not maintain extra parameters, whereas batch normalization uses additional memory for storing batch statistics and learned scaling factors.

Practical Applications in Deep Learning

Dropout reduces overfitting in deep neural networks by randomly deactivating neurons during training, enhancing model generalization in applications like image recognition and natural language processing. Batch normalization stabilizes training by normalizing layer inputs, accelerating convergence and improving performance in large-scale tasks such as speech recognition and autonomous driving. Combining dropout with batch normalization requires careful tuning, as batch normalization already provides regularization by reducing internal covariate shift in architectures like convolutional neural networks.

Dropout vs Batch Normalization: Use Cases

Dropout is primarily used in neural networks to prevent overfitting by randomly deactivating neurons during training, making it effective in smaller datasets and models prone to co-adaptation of neurons. Batch normalization stabilizes and accelerates training by normalizing layer inputs, commonly applied in deep architectures and convolutional networks to improve convergence and generalization. Dropout suits models requiring regularization, while batch normalization is ideal for speeding up training and maintaining model stability across diverse neural network layers.

Best Practices for Implementation

Dropout effectively reduces overfitting by randomly deactivating neurons during training, while Batch Normalization stabilizes learning by normalizing layer inputs, accelerating convergence. Best practices suggest applying Batch Normalization before activation functions and Dropout after, ensuring optimized training dynamics and generalization. Combining Batch Normalization with moderate Dropout rates usually results in improved model robustness and performance across diverse datasets.

Common Challenges and Solutions

Dropout and Batch Normalization both address the challenge of overfitting in deep neural networks by improving model generalization. Dropout randomly deactivates neurons during training to prevent co-adaptation, while Batch Normalization stabilizes learning by normalizing layer inputs, reducing internal covariate shift. Combining these techniques requires careful tuning since Batch Normalization can reduce the need for Dropout, and improper integration may lead to training instability or diminished performance.

Future Trends in Regularization Techniques

Emerging regularization techniques in artificial intelligence integrate adaptive dropout rates with dynamic batch normalization to enhance model generalization and stability. Future trends highlight the fusion of these methods with meta-learning algorithms to automatically optimize regularization parameters based on training data distributions. Advances in neural architecture search increasingly incorporate hybrid regularization strategies, driving improvements in deep learning robustness and efficiency.

Dropout vs Batch normalization Infographic

techiny.com

techiny.com