Model training in artificial intelligence involves feeding large datasets into algorithms to enable machines to learn patterns, whereas model inference applies the trained model to new data for predictions or decision-making. Training requires significant computational resources and time, while inference is optimized for speed and efficiency in real-time applications. Balancing these processes ensures effective deployment of AI systems in various industries.

Table of Comparison

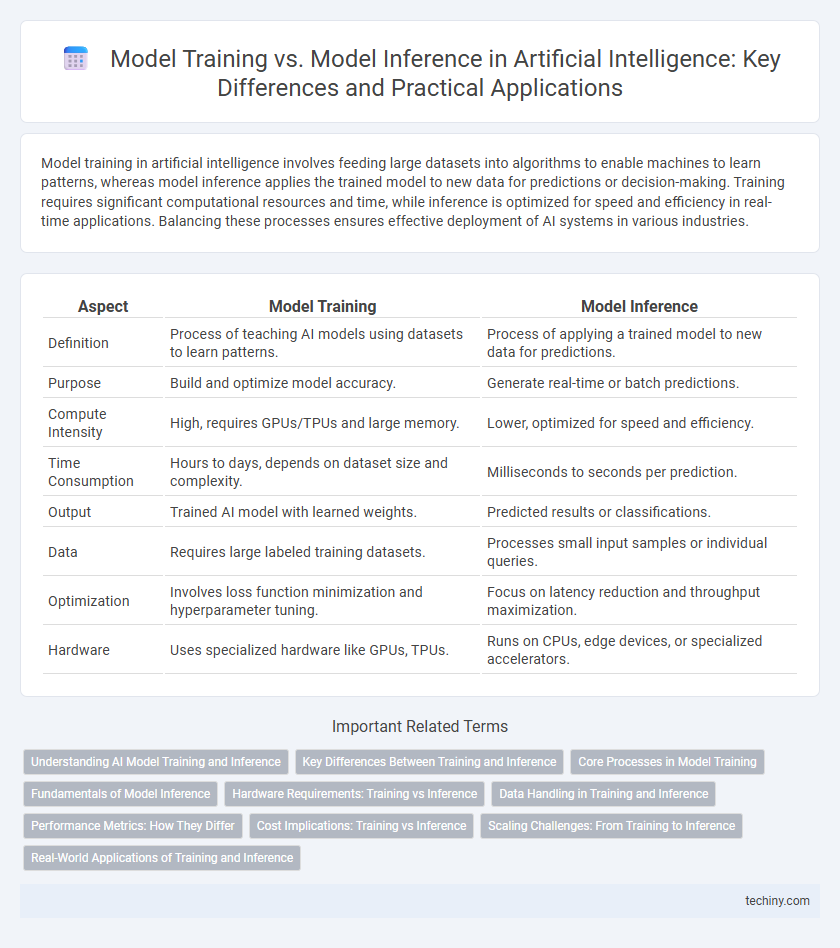

| Aspect | Model Training | Model Inference |

|---|---|---|

| Definition | Process of teaching AI models using datasets to learn patterns. | Process of applying a trained model to new data for predictions. |

| Purpose | Build and optimize model accuracy. | Generate real-time or batch predictions. |

| Compute Intensity | High, requires GPUs/TPUs and large memory. | Lower, optimized for speed and efficiency. |

| Time Consumption | Hours to days, depends on dataset size and complexity. | Milliseconds to seconds per prediction. |

| Output | Trained AI model with learned weights. | Predicted results or classifications. |

| Data | Requires large labeled training datasets. | Processes small input samples or individual queries. |

| Optimization | Involves loss function minimization and hyperparameter tuning. | Focus on latency reduction and throughput maximization. |

| Hardware | Uses specialized hardware like GPUs, TPUs. | Runs on CPUs, edge devices, or specialized accelerators. |

Understanding AI Model Training and Inference

Model training in artificial intelligence involves feeding large datasets into algorithms to enable them to learn patterns and make predictions, requiring significant computational resources and time. Model inference, on the other hand, is the process where a trained AI model applies learned knowledge to new data for real-time predictions or decision-making, emphasizing speed and efficiency. Understanding the distinction between training and inference is crucial for optimizing AI deployment and ensuring scalability across various applications.

Key Differences Between Training and Inference

Model training in artificial intelligence involves processing large datasets to adjust algorithm parameters and optimize accuracy through techniques like backpropagation and gradient descent. Model inference uses the trained model to make predictions or decisions on new, unseen data, focusing on speed and efficiency rather than parameter updates. Key differences include computational resource requirements, where training demands significant GPU power and time, while inference prioritizes low-latency execution suitable for real-time applications.

Core Processes in Model Training

Model training in artificial intelligence involves iterative optimization of model parameters through exposure to labeled datasets, minimizing loss functions to improve predictive accuracy. This process requires significant computational resources for tasks such as gradient calculation, backpropagation, and weight updates within neural networks. Effective training is critical for creating models that generalize well to unseen data during inference, ensuring reliable AI performance.

Fundamentals of Model Inference

Model inference involves using a trained artificial intelligence model to make predictions or decisions based on new data inputs, relying on previously learned patterns and parameters. It requires efficient computation to ensure rapid response times, especially in real-time applications like autonomous driving or voice assistants. The fundamental process converts raw input data through the model's architecture to generate actionable outputs without further altering model parameters.

Hardware Requirements: Training vs Inference

Model training demands high-performance hardware such as GPUs or TPUs with extensive memory and parallel processing capabilities to handle large datasets and complex algorithms. In contrast, model inference requires less computational power, often optimized for CPUs or edge devices to deliver real-time predictions with low latency. Efficient hardware allocation improves overall AI system performance and cost-effectiveness.

Data Handling in Training and Inference

Model training in artificial intelligence requires extensive data preprocessing, augmentation, and batching to optimize learning and improve model accuracy. During inference, data handling is streamlined for rapid input transformation and real-time prediction, emphasizing minimal latency and resource efficiency. Efficient memory management and data pipeline optimization are critical for maintaining performance across both training and inference phases.

Performance Metrics: How They Differ

Model training and model inference differ significantly in performance metrics, with training focusing on accuracy, loss functions, and convergence speed to optimize model parameters. Inference prioritizes latency, throughput, and resource efficiency to ensure real-time or near-real-time predictions. Evaluating these distinct metrics is crucial for balancing robust model development and efficient deployment in AI applications.

Cost Implications: Training vs Inference

Model training in artificial intelligence involves substantial computational resources and energy consumption, leading to higher upfront costs due to the need for powerful GPUs and extended processing time. Inference, on the other hand, typically requires significantly less computational power, resulting in lower operational expenses when deploying models in production environments. Understanding the cost disparity between training and inference is critical for optimizing AI budgets and scaling AI applications efficiently.

Scaling Challenges: From Training to Inference

Scaling challenges in AI manifest differently between model training and inference phases. Training demands extensive computational resources and memory bandwidth to process vast datasets and update billions of parameters efficiently, often relying on distributed systems and specialized hardware like GPUs or TPUs. Inference prioritizes low latency and high throughput to serve real-time predictions, necessitating optimized model compression, quantization techniques, and scalable deployment architectures to handle massive user requests without compromising accuracy.

Real-World Applications of Training and Inference

Model training involves feeding large datasets into algorithms to enable machines to learn patterns, essential for developing accurate predictive models in applications like autonomous driving and medical diagnosis. Model inference applies the trained model to new data in real-time, powering functionalities such as voice recognition in virtual assistants and fraud detection in financial transactions. Balancing computational resources between intensive training phases and efficient inference processes is critical for deploying scalable AI solutions across industries.

Model Training vs Model Inference Infographic

techiny.com

techiny.com