Early stopping prevents overfitting by halting training once the model's performance on a validation set starts to degrade, ensuring optimal generalization. Dropout, on the other hand, randomly deactivates neurons during training to reduce co-adaptation and improve model robustness. Both techniques enhance model performance but operate through distinct mechanisms--early stopping monitors training progress while dropout introduces noise to the network.

Table of Comparison

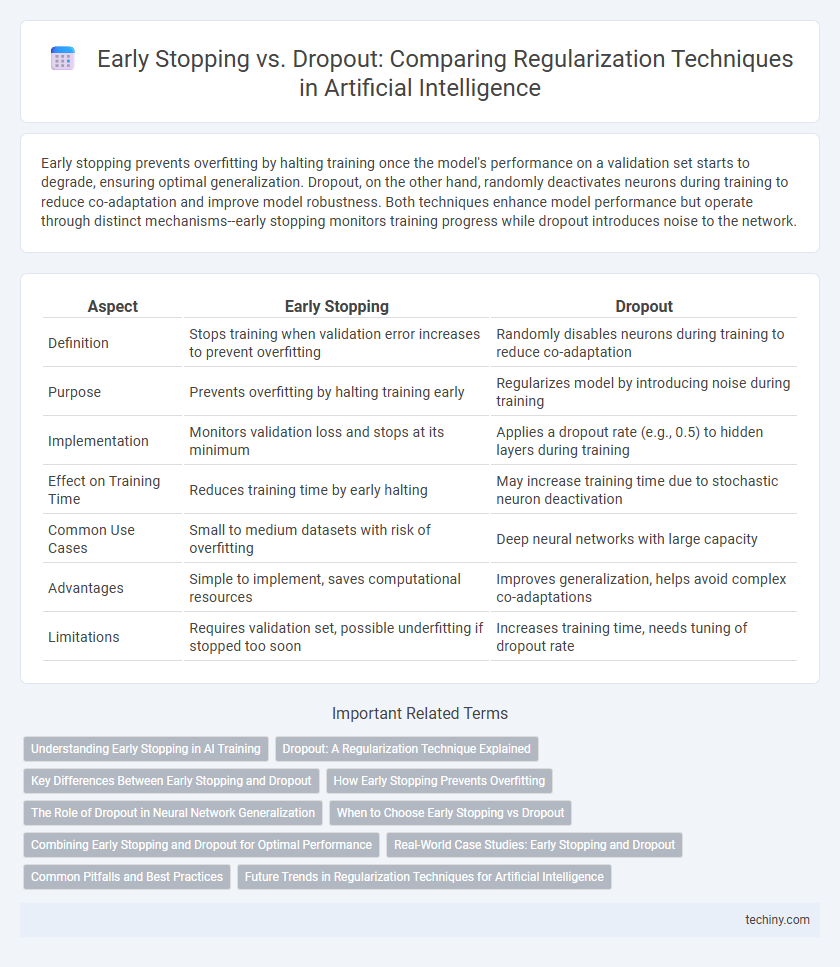

| Aspect | Early Stopping | Dropout |

|---|---|---|

| Definition | Stops training when validation error increases to prevent overfitting | Randomly disables neurons during training to reduce co-adaptation |

| Purpose | Prevents overfitting by halting training early | Regularizes model by introducing noise during training |

| Implementation | Monitors validation loss and stops at its minimum | Applies a dropout rate (e.g., 0.5) to hidden layers during training |

| Effect on Training Time | Reduces training time by early halting | May increase training time due to stochastic neuron deactivation |

| Common Use Cases | Small to medium datasets with risk of overfitting | Deep neural networks with large capacity |

| Advantages | Simple to implement, saves computational resources | Improves generalization, helps avoid complex co-adaptations |

| Limitations | Requires validation set, possible underfitting if stopped too soon | Increases training time, needs tuning of dropout rate |

Understanding Early Stopping in AI Training

Early stopping is a regularization technique in AI training that halts the learning process once the model's performance on a validation set starts to degrade, preventing overfitting. It monitors the validation loss after each epoch and stops training when no improvement is detected for a specified number of iterations, called patience. This method improves generalization by ensuring that the model maintains optimal weights without excessive training on noisy or irrelevant patterns.

Dropout: A Regularization Technique Explained

Dropout is a powerful regularization technique that mitigates overfitting in neural networks by randomly deactivating a subset of neurons during each training iteration, forcing the model to develop redundant representations. This stochastic process promotes robustness and reduces co-adaptation of neurons, enhancing generalization performance on unseen data. Effective tuning of dropout rates, typically between 0.2 and 0.5, is crucial for balancing model complexity and training efficacy in deep learning applications.

Key Differences Between Early Stopping and Dropout

Early stopping monitors model performance on a validation set to halt training before overfitting occurs, while dropout randomly deactivates neurons during training to prevent co-adaptation and improve generalization. Early stopping primarily addresses overfitting by controlling training duration, whereas dropout acts as a regularization technique integrated within each training iteration. Both methods enhance model robustness but differ fundamentally in their approach: early stopping adjusts the training timeline, and dropout modifies network architecture dynamically.

How Early Stopping Prevents Overfitting

Early stopping prevents overfitting by monitoring the model's performance on a validation set and halting training once the validation error starts to increase, indicating the onset of overfitting. This technique ensures the model maintains optimal generalization by avoiding excessive adaptation to training data noise. Unlike dropout, which introduces randomness by temporarily deactivating neurons, early stopping directly controls training duration based on validation metrics.

The Role of Dropout in Neural Network Generalization

Dropout enhances neural network generalization by randomly deactivating neurons during training, which forces the model to develop redundant and robust feature representations. This regularization technique prevents overfitting by reducing the co-adaptation of neurons, leading to improved performance on unseen data. By promoting independence among neuron activations, dropout helps achieve more stable and generalized neural networks compared to relying solely on early stopping.

When to Choose Early Stopping vs Dropout

Early stopping is ideal when training time is limited and the model has a risk of overfitting on small datasets, as it halts training once validation performance deteriorates. Dropout is preferable in large neural networks with abundant data, introducing noise during training to prevent co-adaptation of neurons and improve generalization. Choosing early stopping suits scenarios requiring faster iteration cycles, while dropout is effective for robust regularization during extensive training.

Combining Early Stopping and Dropout for Optimal Performance

Combining Early Stopping and Dropout enhances neural network generalization by mitigating both overfitting and underfitting risks. Early Stopping monitors validation loss to halt training before model overfitting occurs, while Dropout randomly deactivates neurons during training to prevent co-adaptation. This integrated approach leverages Dropout's regularization with Early Stopping's training control, resulting in improved model accuracy and robustness on unseen data.

Real-World Case Studies: Early Stopping and Dropout

Early stopping and dropout are widely utilized regularization techniques in artificial intelligence to prevent overfitting during neural network training. Real-world case studies show early stopping effectively conserves computational resources by halting training once validation performance plateaus, as demonstrated in image recognition tasks like the CIFAR-10 dataset. Dropout enhances model generalization by randomly deactivating neurons during training, evidenced by its success in natural language processing applications such as sentiment analysis on the IMDB dataset.

Common Pitfalls and Best Practices

Early stopping often faces pitfalls such as premature halting that leads to underfitting and reliance on a validation set that may not represent real-world data. Dropout challenges include improper dropout rates causing either insufficient regularization or excessive information loss, which hinders model convergence. Best practices involve tuning early stopping patience parameters carefully and applying dropout selectively in network layers based on empirical validation performance.

Future Trends in Regularization Techniques for Artificial Intelligence

Future trends in regularization techniques for artificial intelligence emphasize adaptive methods that combine early stopping and dropout to optimize model generalization dynamically. Integration of meta-learning approaches enables AI systems to autonomously adjust regularization strength based on training progress and data complexity. Advances in explainable AI also drive the development of regularization strategies that enhance model interpretability while preventing overfitting.

Early Stopping vs Dropout Infographic

techiny.com

techiny.com