Strong supervision in artificial intelligence relies on fully labeled datasets, enabling models to learn precise patterns from clear, unambiguous examples. Weak supervision leverages limited or noisy labels, combining diverse imperfect sources to generate training data and improve model performance despite sparse annotations. Balancing the use of strong and weak supervision techniques enhances AI systems by optimizing data efficiency and reducing the cost of manual labeling.

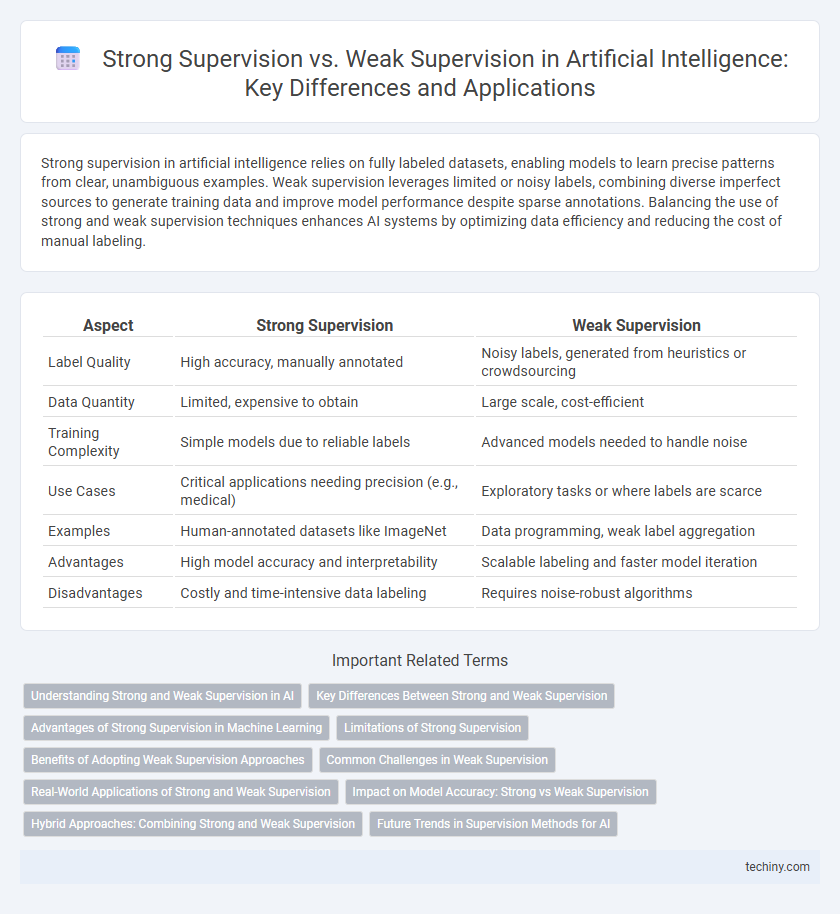

Table of Comparison

| Aspect | Strong Supervision | Weak Supervision |

|---|---|---|

| Label Quality | High accuracy, manually annotated | Noisy labels, generated from heuristics or crowdsourcing |

| Data Quantity | Limited, expensive to obtain | Large scale, cost-efficient |

| Training Complexity | Simple models due to reliable labels | Advanced models needed to handle noise |

| Use Cases | Critical applications needing precision (e.g., medical) | Exploratory tasks or where labels are scarce |

| Examples | Human-annotated datasets like ImageNet | Data programming, weak label aggregation |

| Advantages | High model accuracy and interpretability | Scalable labeling and faster model iteration |

| Disadvantages | Costly and time-intensive data labeling | Requires noise-robust algorithms |

Understanding Strong and Weak Supervision in AI

Strong supervision in AI relies on large datasets with accurately labeled examples, enabling models to learn precise input-output mappings and achieve high predictive performance. Weak supervision uses noisy, limited, or imprecise labels from heuristic rules, crowdsourced annotations, or incomplete data, allowing the training of models even when fully annotated datasets are unavailable. Understanding the trade-offs between strong and weak supervision is crucial for selecting appropriate learning strategies, especially in applications where data labeling is costly or scarce.

Key Differences Between Strong and Weak Supervision

Strong supervision relies on fully labeled datasets where each data point is accurately annotated, enabling precise model training and higher accuracy. Weak supervision uses partially labeled, noisy, or imprecise labels generated from heuristics, rules, or external sources, offering scalability with less manual effort but often resulting in lower model performance. The key differences lie in the quality and completeness of the labeled data, the trade-off between labeling cost and accuracy, and applicability to complex real-world problems.

Advantages of Strong Supervision in Machine Learning

Strong supervision in machine learning leverages accurately labeled data, leading to higher model precision and reliable performance across diverse tasks such as image recognition and natural language processing. This approach facilitates faster convergence during training by providing clear, unambiguous feedback, enhancing the model's ability to generalize effectively. Strongly supervised models demonstrate robustness in complex environments, reducing errors and improving predictive capabilities compared to weakly supervised counterparts.

Limitations of Strong Supervision

Strong supervision in artificial intelligence demands large volumes of accurately labeled data, which is often expensive and time-consuming to obtain. This dependency limits scalability and adaptability across diverse domains where annotated datasets are scarce or unavailable. Consequently, strong supervision struggles with real-world applications requiring rapid learning from limited or noisy data.

Benefits of Adopting Weak Supervision Approaches

Weak supervision significantly reduces the reliance on large labeled datasets by utilizing noisy, imprecise, or incomplete sources, accelerating the training process for artificial intelligence models. This approach enables scalable data annotation through automation or heuristic methods, lowering costs and expanding dataset diversity. Consequently, weak supervision enhances model generalization and adaptability across varied domains where labeled data is scarce or expensive to obtain.

Common Challenges in Weak Supervision

Weak supervision faces key challenges including noisy and imprecise labels that reduce model accuracy and reliability. Incomplete or inconsistent annotation sources often lead to ambiguous training data, complicating the learning process. Addressing these limitations requires advanced techniques like noise-aware algorithms and data programming to enhance label quality and model performance.

Real-World Applications of Strong and Weak Supervision

Strong supervision utilizes fully labeled datasets, enabling AI models to achieve high accuracy in applications such as medical imaging diagnostics and autonomous vehicle navigation. Weak supervision leverages noisy, incomplete, or imprecise labels from diverse sources, effectively scaling training for large-scale natural language processing tasks and sentiment analysis in social media. Real-world deployments often balance these methods to optimize performance while minimizing manual labeling costs.

Impact on Model Accuracy: Strong vs Weak Supervision

Strong supervision provides high-quality, explicitly labeled datasets that significantly enhance model accuracy by allowing precise error correction and feature learning. Weak supervision employs noisy, incomplete, or imprecise labels, resulting in lower accuracy but enabling scalable data annotation across large datasets. The trade-off between strong and weak supervision centers on balancing label quality with data volume to optimize overall model performance.

Hybrid Approaches: Combining Strong and Weak Supervision

Hybrid approaches in artificial intelligence leverage both strong supervision, characterized by high-quality labeled data, and weak supervision, which utilizes noisier or incomplete labels to enhance model training efficiency. By integrating these methods, models achieve improved accuracy and generalization while reducing reliance on costly manual annotation. Techniques such as co-training, multi-view learning, and semi-supervised methods exemplify the practical application of merging strong and weak supervision to optimize performance in complex AI tasks.

Future Trends in Supervision Methods for AI

Future trends in AI supervision methods emphasize hybrid approaches combining strong supervision's precision with weak supervision's scalability. Advances in semi-supervised learning, self-training, and data programming enable models to leverage limited labeled data alongside massive unlabeled datasets efficiently. Enhanced AI explainability and active learning frameworks will further refine supervision quality, driving more robust and adaptable intelligent systems.

Strong Supervision vs Weak Supervision Infographic

techiny.com

techiny.com