Markov Decision Processes (MDPs) assume full observability of the environment's state, enabling precise decision-making based on known probabilities and rewards. In contrast, Partially Observable Markov Decision Processes (POMDPs) address uncertainty by incorporating incomplete or noisy observations, requiring agents to maintain belief states that estimate the underlying true state. This distinction makes POMDPs more suitable for real-world applications where the agent lacks complete information, demanding advanced algorithms to optimize policies under uncertainty.

Table of Comparison

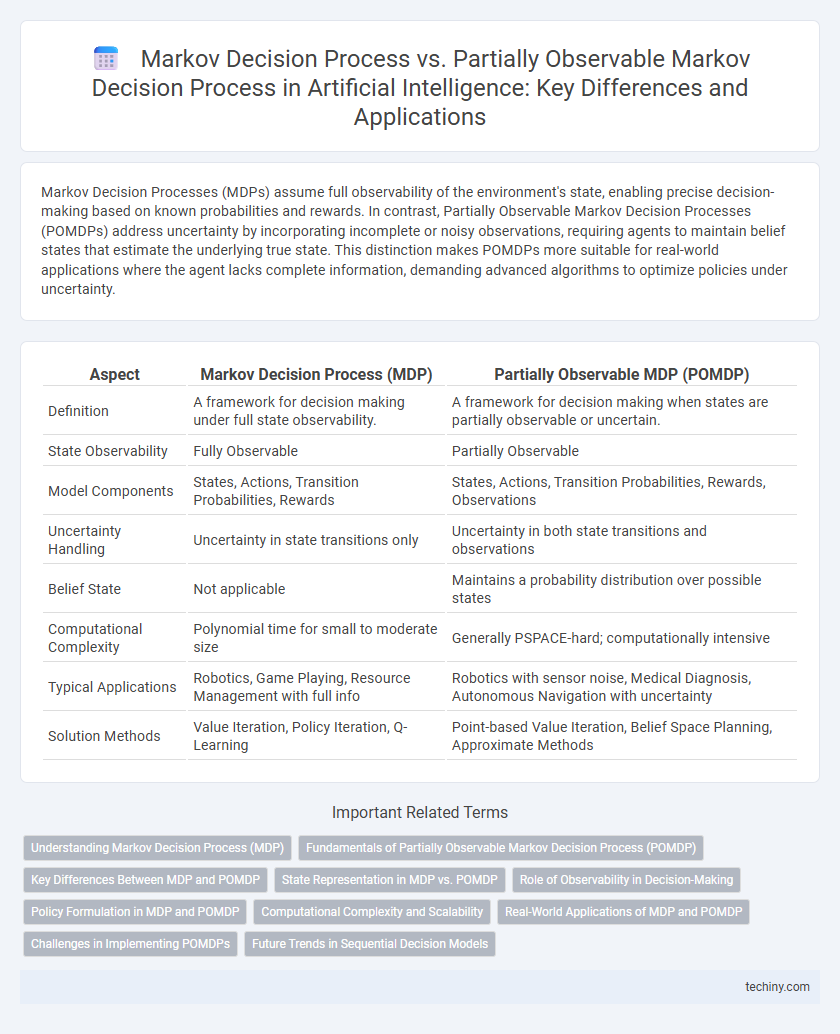

| Aspect | Markov Decision Process (MDP) | Partially Observable MDP (POMDP) |

|---|---|---|

| Definition | A framework for decision making under full state observability. | A framework for decision making when states are partially observable or uncertain. |

| State Observability | Fully Observable | Partially Observable |

| Model Components | States, Actions, Transition Probabilities, Rewards | States, Actions, Transition Probabilities, Rewards, Observations |

| Uncertainty Handling | Uncertainty in state transitions only | Uncertainty in both state transitions and observations |

| Belief State | Not applicable | Maintains a probability distribution over possible states |

| Computational Complexity | Polynomial time for small to moderate size | Generally PSPACE-hard; computationally intensive |

| Typical Applications | Robotics, Game Playing, Resource Management with full info | Robotics with sensor noise, Medical Diagnosis, Autonomous Navigation with uncertainty |

| Solution Methods | Value Iteration, Policy Iteration, Q-Learning | Point-based Value Iteration, Belief Space Planning, Approximate Methods |

Understanding Markov Decision Process (MDP)

Markov Decision Process (MDP) is a mathematical framework used to model decision-making in environments with fully observable states, where an agent chooses actions to maximize long-term rewards. It consists of a set of states, actions, transition probabilities, and reward functions that define the environment's dynamics and outcomes. Compared to Partially Observable Markov Decision Process (POMDP), MDP assumes complete knowledge of the current state, simplifying computation and policy optimization.

Fundamentals of Partially Observable Markov Decision Process (POMDP)

Partially Observable Markov Decision Processes (POMDPs) extend Markov Decision Processes (MDPs) by addressing uncertainty in state information, where the true state is not fully observable. POMDP fundamentals involve maintaining a belief state, a probability distribution over all possible states, to make optimal decisions despite incomplete or noisy observations. This framework enables intelligent agents to plan and act effectively in environments characterized by partial observability and stochastic dynamics.

Key Differences Between MDP and POMDP

Markov Decision Processes (MDPs) assume full observability of the environment's state at each decision step, enabling straightforward state-action optimization. Partially Observable Markov Decision Processes (POMDPs) address uncertainty by modeling scenarios where the agent receives only incomplete or noisy observations, requiring belief state estimation for effective policy development. The key difference lies in the observability assumption: MDPs operate on known states, while POMDPs incorporate partial and uncertain state information, significantly increasing computational complexity and algorithmic requirements.

State Representation in MDP vs. POMDP

Markov Decision Processes (MDPs) represent states with full observability, where the agent has complete knowledge of the environment's current state, enabling precise decision-making. In contrast, Partially Observable Markov Decision Processes (POMDPs) incorporate uncertainty by representing states as probability distributions over possible real states, reflecting partial or noisy observations. This fundamental difference in state representation allows POMDPs to model complex environments with incomplete information, improving decision policies under uncertainty.

Role of Observability in Decision-Making

Markov Decision Process (MDP) assumes full observability of the environment's state, enabling optimal decision-making based on complete information. In contrast, Partially Observable Markov Decision Process (POMDP) addresses scenarios where the agent has limited or noisy observations, requiring the use of belief states to represent uncertainty. The role of observability is crucial, as it directly influences the complexity and strategies of policy formulation in AI decision-making frameworks.

Policy Formulation in MDP and POMDP

Markov Decision Process (MDP) policy formulation involves determining an optimal policy based on fully observable states, leveraging transition probabilities and rewards to maximize expected cumulative returns. In contrast, Partially Observable Markov Decision Process (POMDP) policy formulation requires maintaining a belief state, a probability distribution over possible states, to address uncertainty in state observation while optimizing decision-making. The complexity of POMDP policies arises from balancing exploration and exploitation under incomplete information, typically solved using value iteration or policy search in the belief space.

Computational Complexity and Scalability

Markov Decision Processes (MDPs) typically offer lower computational complexity due to their fully observable state space, enabling scalable solutions with dynamic programming methods like value iteration and policy iteration. Partially Observable Markov Decision Processes (POMDPs) introduce significant computational challenges as the agent must maintain and update a belief state over a continuous probability distribution, leading to exponential growth in complexity with the size of the state and observation spaces. Consequently, scalability in POMDPs is often limited, requiring approximation algorithms and heuristics to manage the intractable computational demands inherent in real-world applications.

Real-World Applications of MDP and POMDP

Markov Decision Processes (MDPs) are widely utilized in robotics and automated control systems where full state observability is achievable, enabling efficient decision-making in known environments such as inventory management and autonomous navigation. Partially Observable Markov Decision Processes (POMDPs) excel in real-world scenarios with incomplete or uncertain information, including medical diagnosis systems, spoken dialogue systems, and intelligent tutoring where the agent must infer the hidden state from noisy observations. Industries like healthcare, finance, and autonomous vehicles increasingly rely on POMDP frameworks to model uncertainty and optimize sequential decisions under partial observability.

Challenges in Implementing POMDPs

Implementing Partially Observable Markov Decision Processes (POMDPs) presents significant challenges due to the complexity of maintaining and updating belief states from incomplete and noisy observations, which exponentially increases computational demands compared to Markov Decision Processes (MDPs). The requirement for sophisticated approximation algorithms to solve high-dimensional belief spaces hinders real-time decision-making in large-scale applications. Scalability and ensuring robust policy performance under uncertainty remain critical obstacles in deploying POMDP frameworks across diverse artificial intelligence domains.

Future Trends in Sequential Decision Models

Future trends in sequential decision models emphasize the integration of Markov Decision Processes (MDPs) and Partially Observable Markov Decision Processes (POMDPs) with deep reinforcement learning to enhance scalability and adaptability in complex environments. Advances in approximate inference and belief state representation are driving improvements in POMDP solutions, enabling better handling of uncertainty and partial observability. The development of hierarchical and meta-learning frameworks promises more efficient decision-making over long horizons in uncertain and dynamic real-world scenarios.

Markov Decision Process vs Partially Observable Markov Decision Process Infographic

techiny.com

techiny.com