Fine-tuning refines a pre-trained AI model on specific datasets to improve performance on targeted tasks, while pre-training involves training a model on large, general datasets to develop broad knowledge and patterns. Pre-training establishes foundational language understanding and representations, making fine-tuning more efficient and effective by adapting these capabilities to specialized applications. This distinction optimizes AI development by balancing broad learning with task-specific customization.

Table of Comparison

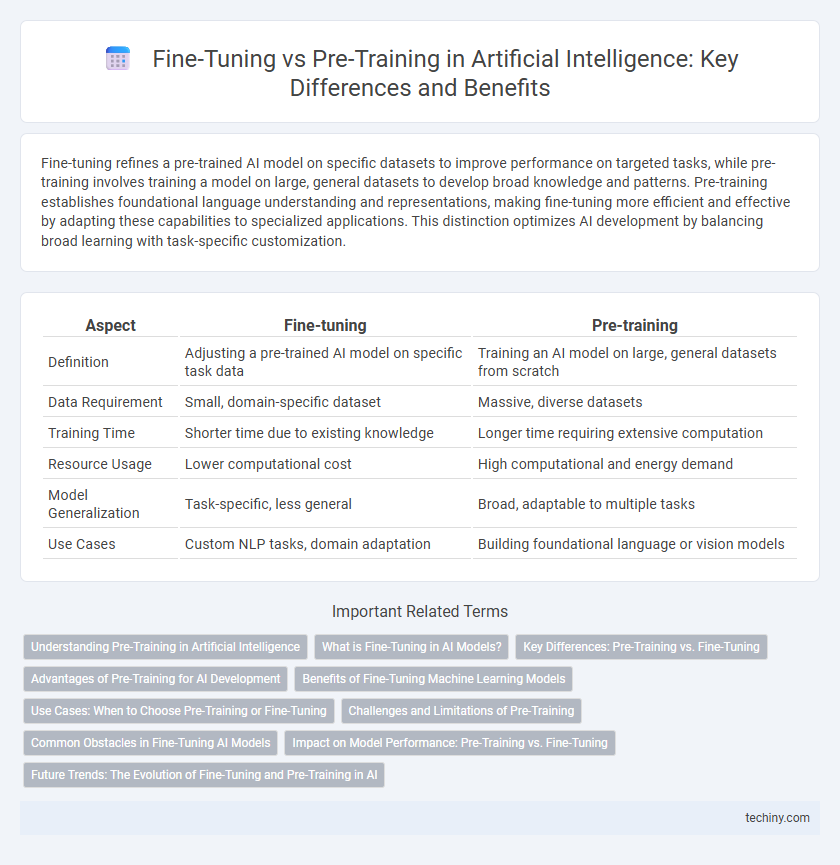

| Aspect | Fine-tuning | Pre-training |

|---|---|---|

| Definition | Adjusting a pre-trained AI model on specific task data | Training an AI model on large, general datasets from scratch |

| Data Requirement | Small, domain-specific dataset | Massive, diverse datasets |

| Training Time | Shorter time due to existing knowledge | Longer time requiring extensive computation |

| Resource Usage | Lower computational cost | High computational and energy demand |

| Model Generalization | Task-specific, less general | Broad, adaptable to multiple tasks |

| Use Cases | Custom NLP tasks, domain adaptation | Building foundational language or vision models |

Understanding Pre-Training in Artificial Intelligence

Pre-training in artificial intelligence involves training a model on a large, diverse dataset to learn general features and representations before applying it to specific tasks. This phase enables the model to acquire foundational knowledge, such as language patterns or image textures, which can be transferred across various applications. Understanding pre-training allows researchers to optimize models for better accuracy and efficiency when fine-tuning on domain-specific datasets.

What is Fine-Tuning in AI Models?

Fine-tuning in AI models involves adjusting a pre-trained neural network on a smaller, domain-specific dataset to improve performance on targeted tasks. This process refines the model's weights based on new data, enabling it to adapt to specialized applications without training from scratch. Fine-tuning enhances accuracy and efficiency by leveraging learned features from large-scale pre-training while addressing unique contextual requirements.

Key Differences: Pre-Training vs. Fine-Tuning

Pre-training involves training an AI model on a large, general dataset to learn broad features and patterns, establishing foundational knowledge. Fine-tuning adjusts this pre-trained model on a smaller, task-specific dataset to optimize performance for particular applications. Key differences include the scope of data used, training duration, and specificity of the resulting model's capabilities.

Advantages of Pre-Training for AI Development

Pre-training in artificial intelligence leverages vast datasets to develop generalized knowledge representations, enabling models to understand diverse linguistic and contextual patterns effectively. This approach significantly reduces the amount of labeled data required for downstream tasks, cutting costs and accelerating development timelines. Pre-trained models enhance performance across various AI applications by providing a robust foundation that fine-tuning can further specialize with minimal effort.

Benefits of Fine-Tuning Machine Learning Models

Fine-tuning machine learning models enhances performance by adapting pre-trained models to specific tasks or domains, significantly improving accuracy and efficiency. It reduces the need for large labeled datasets and computational resources compared to training from scratch. Fine-tuning also enables faster deployment and better generalization in real-world applications by leveraging previous knowledge embedded in the base model.

Use Cases: When to Choose Pre-Training or Fine-Tuning

Pre-training is ideal for developing broad language models capable of understanding diverse contexts, making it suitable for applications requiring general knowledge like chatbots or search engines. Fine-tuning excels in specialized use cases where domain-specific accuracy is critical, such as medical diagnosis or legal document analysis. Selecting between pre-training and fine-tuning depends on the availability of labeled data, computational resources, and the specificity of the task at hand.

Challenges and Limitations of Pre-Training

Pre-training large language models requires vast amounts of computational resources and extensive labeled or unlabeled datasets, which can be cost-prohibitive and environmentally taxing. The static nature of pre-trained models often leads to challenges in adapting to specific downstream tasks without fine-tuning, resulting in suboptimal performance. Moreover, pre-training can embed biases present in the training data, perpetuating fairness and ethical concerns in AI applications.

Common Obstacles in Fine-Tuning AI Models

Fine-tuning AI models often faces common obstacles such as overfitting, which occurs when models adapt too closely to the fine-tuning dataset, reducing generalization to new data. Limited labeled data poses a significant challenge, hindering the model's ability to learn effectively during fine-tuning. Computational resource constraints and the risk of catastrophic forgetting, where the model loses previously learned knowledge, also complicate the fine-tuning process.

Impact on Model Performance: Pre-Training vs. Fine-Tuning

Pre-training establishes foundational knowledge by exposing models to vast datasets, enabling them to learn general language patterns and representations critical for diverse tasks. Fine-tuning refines these pre-trained models on task-specific data, significantly enhancing performance by adapting to specialized contexts and objectives. The combined approach leverages broad knowledge while optimizing accuracy, resulting in superior model effectiveness compared to relying solely on either method.

Future Trends: The Evolution of Fine-Tuning and Pre-Training in AI

Fine-tuning and pre-training are set to evolve with advancements in AI models by incorporating more efficient transfer learning techniques and adaptive algorithms that reduce data and computational resource requirements. Emerging trends highlight the integration of unsupervised and self-supervised learning during pre-training to generate more generalized representations, while fine-tuning becomes increasingly domain-specific and automated through meta-learning frameworks. Research is focusing on scalable multi-task fine-tuning and continual learning approaches to facilitate real-time model adaptation in dynamic environments across diverse applications.

Fine-tuning vs pre-training Infographic

techiny.com

techiny.com