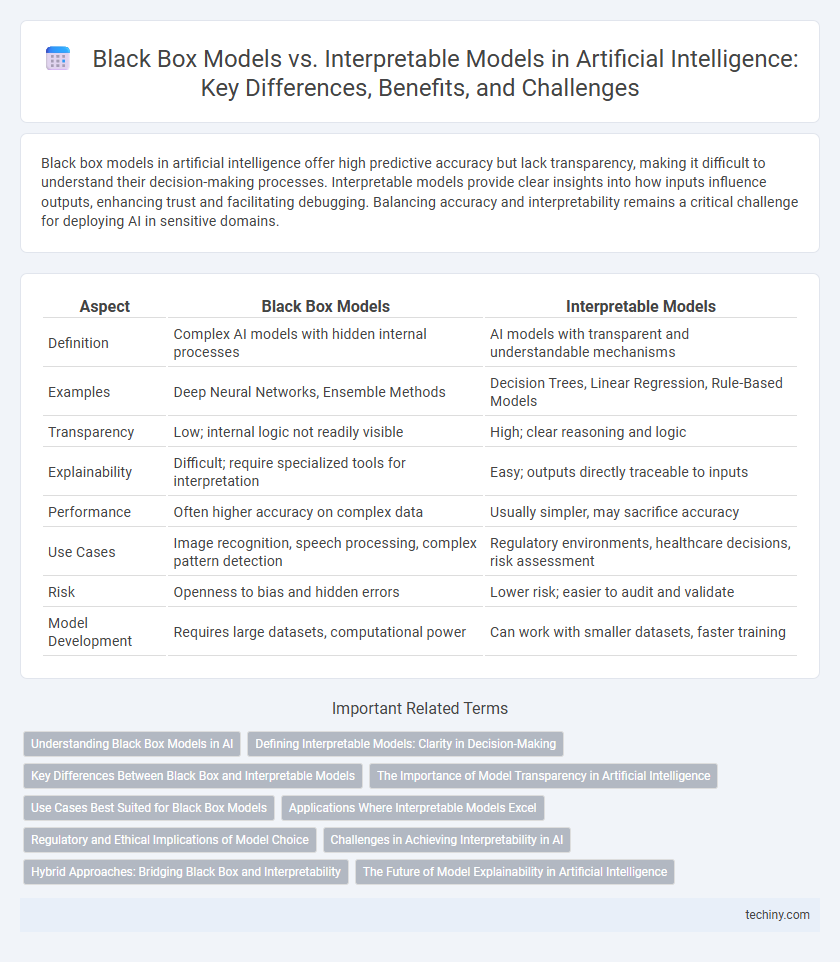

Black box models in artificial intelligence offer high predictive accuracy but lack transparency, making it difficult to understand their decision-making processes. Interpretable models provide clear insights into how inputs influence outputs, enhancing trust and facilitating debugging. Balancing accuracy and interpretability remains a critical challenge for deploying AI in sensitive domains.

Table of Comparison

| Aspect | Black Box Models | Interpretable Models |

|---|---|---|

| Definition | Complex AI models with hidden internal processes | AI models with transparent and understandable mechanisms |

| Examples | Deep Neural Networks, Ensemble Methods | Decision Trees, Linear Regression, Rule-Based Models |

| Transparency | Low; internal logic not readily visible | High; clear reasoning and logic |

| Explainability | Difficult; require specialized tools for interpretation | Easy; outputs directly traceable to inputs |

| Performance | Often higher accuracy on complex data | Usually simpler, may sacrifice accuracy |

| Use Cases | Image recognition, speech processing, complex pattern detection | Regulatory environments, healthcare decisions, risk assessment |

| Risk | Openness to bias and hidden errors | Lower risk; easier to audit and validate |

| Model Development | Requires large datasets, computational power | Can work with smaller datasets, faster training |

Understanding Black Box Models in AI

Black box models in artificial intelligence, such as deep neural networks and ensemble methods, often achieve high accuracy but lack transparency in their decision-making processes, making it challenging to understand how input features influence outputs. Techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) have been developed to interpret these complex models by approximating local behavior and attributing feature importance. Understanding black box models is crucial for deploying AI in sensitive domains like healthcare and finance, where explainability ensures trust, compliance, and ethical decision-making.

Defining Interpretable Models: Clarity in Decision-Making

Interpretable models offer transparency by providing clear, understandable reasoning behind their predictions, which is essential for trust and accountability in artificial intelligence applications. These models prioritize simplicity and explainability, often utilizing linear regressions, decision trees, or rule-based systems that allow users to trace how inputs are transformed into outputs. Clarity in decision-making reduces risks associated with black box models, enabling stakeholders to validate, audit, and refine AI behavior effectively.

Key Differences Between Black Box and Interpretable Models

Black Box models, such as deep neural networks, provide high predictive accuracy but lack transparency, making it difficult to understand how inputs are transformed into outputs. Interpretable models, like decision trees and linear regression, offer clear and understandable reasoning paths, enabling easier validation and trustworthiness in critical applications. The key difference lies in the trade-off between model complexity and explainability, with black box models excelling in complex pattern recognition and interpretable models prioritizing transparency and user comprehension.

The Importance of Model Transparency in Artificial Intelligence

Model transparency in artificial intelligence is critical for ensuring trust, accountability, and ethical decision-making, especially when deploying black box models whose internal workings remain obscure. Interpretable models enable stakeholders to understand, validate, and challenge AI-driven outcomes, reducing biases and enhancing compliance with regulatory standards like GDPR. Transparent AI systems facilitate debugging and improvement by revealing the rationale behind predictions, promoting both reliability and user confidence in applications ranging from healthcare diagnostics to financial forecasting.

Use Cases Best Suited for Black Box Models

Black box models excel in complex pattern recognition tasks such as image and speech recognition, where high accuracy is paramount despite limited interpretability. These models are ideal for applications like fraud detection, medical diagnosis, and autonomous driving, where vast datasets and intricate relationships outperform simple rule-based systems. Organizations prioritize black box models when predictive performance outweighs the need for transparent decision-making explanations.

Applications Where Interpretable Models Excel

Interpretable models excel in high-stakes applications such as healthcare diagnostics, financial risk assessment, and legal decision-making where transparency and explainability are crucial. These models provide clear insights into their decision processes, enabling stakeholders to validate predictions and ensure compliance with regulatory standards. Their ability to foster trust and facilitate error analysis makes them indispensable in environments where accountability is paramount.

Regulatory and Ethical Implications of Model Choice

Black box models, often praised for their high accuracy, pose significant regulatory challenges due to their lack of transparency, making it difficult to ensure compliance with data protection and fairness laws such as GDPR and the AI Act. Interpretable models offer a clearer understanding of decision-making processes, supporting ethical accountability and facilitating audits required by regulatory bodies. Choosing interpretable AI models enhances trustworthiness and mitigates risks of bias and discrimination, aligning with emerging legal frameworks and societal expectations for responsible AI deployment.

Challenges in Achieving Interpretability in AI

Achieving interpretability in AI faces significant challenges due to the complexity and opacity of black box models such as deep neural networks and ensemble methods, which often prioritize accuracy over transparency. Interpretable models require clear, human-understandable reasoning paths, but scaling such models to handle high-dimensional data and complex patterns without sacrificing performance remains difficult. Furthermore, balancing model explainability with robustness and generalization across diverse datasets presents ongoing obstacles in developing trustworthy AI systems.

Hybrid Approaches: Bridging Black Box and Interpretability

Hybrid approaches in artificial intelligence combine black box models' high predictive accuracy with interpretable models' transparency, enhancing trust and usability. Techniques such as model distillation, attention mechanisms, and explainable AI frameworks enable the extraction of human-understandable insights from complex algorithms. These methods bridge the gap between performance and interpretability, facilitating better decision-making in critical applications like healthcare and finance.

The Future of Model Explainability in Artificial Intelligence

The future of model explainability in artificial intelligence hinges on advancing interpretable models that enhance transparency without sacrificing performance, enabling stakeholders to trust AI-driven decisions. Emerging techniques in explainable AI (XAI) aim to demystify black box models through methods like SHAP values, LIME, and counterfactual explanations, providing actionable insights into complex neural networks and ensemble models. Regulatory pressures and ethical considerations further drive the adoption of interpretable models, ensuring accountability and fairness in AI applications across healthcare, finance, and autonomous systems.

Black Box Models vs Interpretable Models Infographic

techiny.com

techiny.com