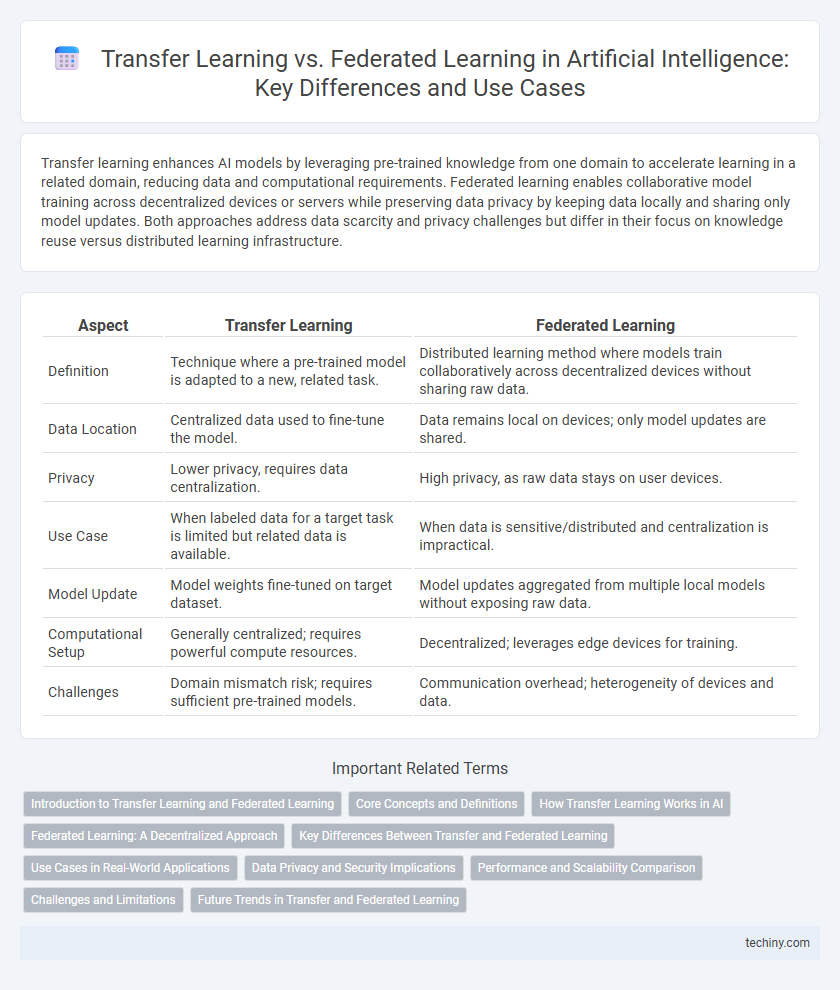

Transfer learning enhances AI models by leveraging pre-trained knowledge from one domain to accelerate learning in a related domain, reducing data and computational requirements. Federated learning enables collaborative model training across decentralized devices or servers while preserving data privacy by keeping data locally and sharing only model updates. Both approaches address data scarcity and privacy challenges but differ in their focus on knowledge reuse versus distributed learning infrastructure.

Table of Comparison

| Aspect | Transfer Learning | Federated Learning |

|---|---|---|

| Definition | Technique where a pre-trained model is adapted to a new, related task. | Distributed learning method where models train collaboratively across decentralized devices without sharing raw data. |

| Data Location | Centralized data used to fine-tune the model. | Data remains local on devices; only model updates are shared. |

| Privacy | Lower privacy, requires data centralization. | High privacy, as raw data stays on user devices. |

| Use Case | When labeled data for a target task is limited but related data is available. | When data is sensitive/distributed and centralization is impractical. |

| Model Update | Model weights fine-tuned on target dataset. | Model updates aggregated from multiple local models without exposing raw data. |

| Computational Setup | Generally centralized; requires powerful compute resources. | Decentralized; leverages edge devices for training. |

| Challenges | Domain mismatch risk; requires sufficient pre-trained models. | Communication overhead; heterogeneity of devices and data. |

Introduction to Transfer Learning and Federated Learning

Transfer learning enables AI models to leverage pre-trained knowledge from one task to improve learning efficiency on a related but distinct task, significantly reducing the need for large labeled datasets. Federated learning allows multiple decentralized devices to collaboratively train a shared model while keeping data localized, enhancing privacy and security. Both techniques address data scarcity and privacy challenges but differ fundamentally in their approach to data utilization and model training distribution.

Core Concepts and Definitions

Transfer Learning involves leveraging knowledge gained from a pre-trained model on one task to improve performance on a related but different task, enabling faster and more efficient model training with limited data. Federated Learning is a decentralized approach where multiple edge devices collaboratively train a shared model while keeping their data localized, enhancing privacy and reducing data transmission. Both methods address different challenges in AI: Transfer Learning accelerates model adaptation, whereas Federated Learning focuses on privacy-preserving, distributed model training.

How Transfer Learning Works in AI

Transfer learning in AI involves leveraging pre-trained models on large datasets to apply learned features and knowledge to new, related tasks with limited data. This process accelerates model training, reduces computational resources, and improves performance by fine-tuning the existing model rather than training from scratch. Transfer learning is particularly effective in domains where labeled data is scarce but domain-relevant features are transferable.

Federated Learning: A Decentralized Approach

Federated Learning enables decentralized model training by allowing multiple devices or servers to collaboratively learn a shared model while keeping the data localized, enhancing privacy and security. Unlike Transfer Learning, which relies on pre-trained models adapted to new tasks, Federated Learning emphasizes data sovereignty and communication efficiency across distributed nodes. This approach is crucial in industries like healthcare and finance, where sensitive data cannot be centralized but collective intelligence is required for robust AI development.

Key Differences Between Transfer and Federated Learning

Transfer Learning involves leveraging pre-trained models on large datasets to improve performance on related but different tasks by fine-tuning with smaller domain-specific data. Federated Learning enables decentralized model training across multiple devices or servers while preserving data privacy, aggregating locally computed updates without sharing raw data. Key differences lie in Transfer Learning's emphasis on knowledge reuse for task adaptation versus Federated Learning's focus on collaborative training under strict privacy constraints.

Use Cases in Real-World Applications

Transfer learning accelerates model development by leveraging pre-trained neural networks for tasks like medical imaging diagnosis and natural language processing, enabling efficient adaptation with limited data. Federated learning enhances data privacy in sectors such as finance and healthcare by training models across decentralized devices without sharing sensitive information. Both techniques optimize AI deployment in real-world applications, balancing data availability and privacy requirements.

Data Privacy and Security Implications

Transfer learning enables leveraging pre-trained models on large datasets, minimizing the need to share sensitive data, which enhances privacy by reducing direct exposure of personal information. Federated learning allows multiple decentralized devices to collaboratively train AI models while keeping raw data local, significantly improving data security by preventing centralized data storage and reducing the risk of breaches. Both methods address data privacy concerns, but federated learning offers stronger protection against data leakage through decentralized model updates and encrypted communications.

Performance and Scalability Comparison

Transfer learning enhances performance by leveraging pre-trained models on large datasets, enabling faster convergence and improved accuracy on related tasks with limited data. Federated learning excels in scalability by distributing training across diverse edge devices while maintaining data privacy, though it may face challenges like communication overhead and heterogeneous data impacting convergence speed. In scenarios requiring rapid model adaptation, transfer learning is preferred, whereas federated learning suits applications demanding decentralized, privacy-preserving collaboration at scale.

Challenges and Limitations

Transfer learning faces challenges such as domain mismatch and limited model generalization when source and target tasks differ significantly, impacting accuracy in diverse applications. Federated learning encounters limitations including communication overhead, data heterogeneity, and stringent privacy constraints, which complicate model aggregation and convergence. Both techniques require careful tuning to balance performance, computational cost, and security in distributed AI environments.

Future Trends in Transfer and Federated Learning

Emerging trends in transfer learning emphasize domain adaptation techniques that enhance model generalization across diverse data distributions, accelerating AI deployment in real-world applications. Federated learning advancements prioritize privacy-preserving algorithms and scalable framework designs to support decentralized AI training on edge devices, crucial for industries like healthcare and finance. Integration of both paradigms is expected to drive collaborative intelligence, enabling models to learn from heterogeneous, distributed data while maintaining robust privacy standards.

Transfer Learning vs Federated Learning Infographic

techiny.com

techiny.com