One-hot encoding represents categorical variables as binary vectors, which can become sparse and high-dimensional with many categories, leading to inefficiencies in storage and computation. Embedding maps categories to dense, low-dimensional vectors that capture semantic relationships and improve model performance in natural language processing and recommendation systems. Choosing embeddings over one-hot encoding reduces dimensionality and enhances the ability of AI models to learn meaningful patterns from data.

Table of Comparison

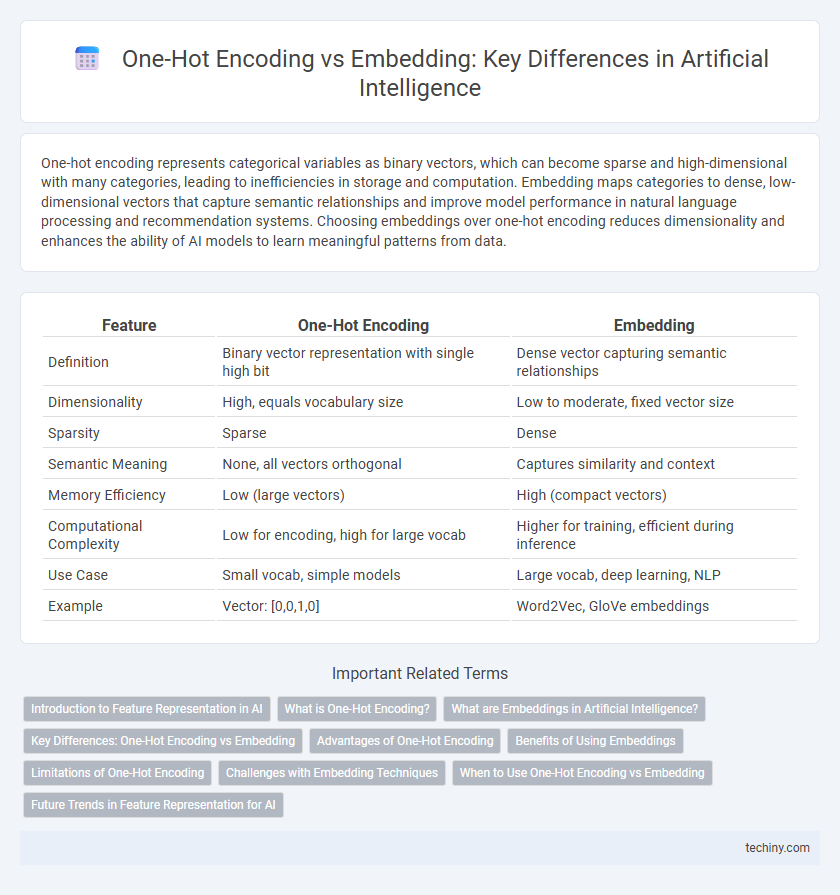

| Feature | One-Hot Encoding | Embedding |

|---|---|---|

| Definition | Binary vector representation with single high bit | Dense vector capturing semantic relationships |

| Dimensionality | High, equals vocabulary size | Low to moderate, fixed vector size |

| Sparsity | Sparse | Dense |

| Semantic Meaning | None, all vectors orthogonal | Captures similarity and context |

| Memory Efficiency | Low (large vectors) | High (compact vectors) |

| Computational Complexity | Low for encoding, high for large vocab | Higher for training, efficient during inference |

| Use Case | Small vocab, simple models | Large vocab, deep learning, NLP |

| Example | Vector: [0,0,1,0] | Word2Vec, GloVe embeddings |

Introduction to Feature Representation in AI

One-hot encoding transforms categorical variables into sparse binary vectors, enabling AI models to interpret discrete data without implying ordinal relationships. Embedding techniques represent features as dense, low-dimensional vectors, capturing semantic similarity and contextual relationships more effectively than one-hot encoding. These feature representation methods significantly influence model performance and scalability in natural language processing and other AI applications.

What is One-Hot Encoding?

One-Hot Encoding is a method used in artificial intelligence to represent categorical data as binary vectors, where each category is transformed into a vector with all zeros except for a single one indicating the presence of that category. This technique enables machine learning algorithms to process categorical variables by converting them into a numerical format without implying any ordinal relationship. One-Hot Encoding is commonly applied in natural language processing and classification tasks for effective handling of nominal data.

What are Embeddings in Artificial Intelligence?

Embeddings in Artificial Intelligence are dense vector representations of categorical data or words, capturing semantic relationships and contextual meanings in a continuous vector space. Unlike one-hot encoding, which creates sparse and high-dimensional binary vectors, embeddings reduce dimensionality and improve the efficiency and accuracy of machine learning models. Commonly used in natural language processing, embeddings enable algorithms to understand and process language data by encoding similarity and relational patterns between entities.

Key Differences: One-Hot Encoding vs Embedding

One-hot encoding represents categorical variables as sparse binary vectors, where each category corresponds to a unique dimension with a value of one, resulting in high-dimensional and memory-intensive data. In contrast, embeddings convert categories into dense, low-dimensional vectors that capture semantic relationships and similarities between categories through continuous-valued features. This allows embeddings to improve model performance and scalability in natural language processing and machine learning tasks by efficiently encoding contextual information.

Advantages of One-Hot Encoding

One-Hot Encoding offers simplicity and ease of implementation by transforming categorical data into a binary vector format that machine learning models can directly interpret. It ensures no loss of information or semantic bias, providing a clear and explicit representation of categories without implying relationships between them. This method excels in scenarios with a limited number of categories, where interpretability and straightforward data preparation are crucial.

Benefits of Using Embeddings

Embeddings capture semantic relationships between data points by mapping categorical variables into continuous vector spaces, enabling models to learn more nuanced patterns compared to one-hot encoding's sparse and high-dimensional vectors. This dense representation reduces memory usage and computational complexity, enhancing model efficiency and scalability in tasks like natural language processing and recommendation systems. Embeddings also facilitate transfer learning, allowing pretrained vectors to improve performance across various AI applications by leveraging contextual knowledge.

Limitations of One-Hot Encoding

One-Hot Encoding results in high-dimensional sparse vectors, leading to increased computational complexity and memory usage in AI models. It fails to capture semantic relationships or contextual similarities between categories, limiting model performance on natural language tasks. This lack of expressiveness often necessitates more advanced embedding techniques for meaningful feature representation.

Challenges with Embedding Techniques

Embedding techniques in artificial intelligence face challenges such as handling out-of-vocabulary words, which can lead to incomplete semantic representations. High computational costs and large memory requirements hinder real-time applications and scalability in complex models. Furthermore, embeddings may struggle with capturing polysemy and contextual nuances, affecting the accuracy of natural language understanding tasks.

When to Use One-Hot Encoding vs Embedding

One-hot encoding is ideal for categorical variables with a small number of unique classes, offering simplicity and interpretable features without introducing dimensionality issues. Embedding is better suited for high-cardinality categorical data or natural language processing tasks, as it captures semantic relationships by mapping categories into dense, continuous vectors. Use embeddings when model performance benefits from learned feature representations, especially in deep learning applications where capturing context and similarity is crucial.

Future Trends in Feature Representation for AI

Future trends in feature representation for AI emphasize a shift from traditional one-hot encoding to advanced embedding techniques that capture semantic relationships and contextual nuances more effectively. Embeddings leverage deep learning to transform categorical data into dense vectors, enabling improved model performance and scalability in complex tasks such as natural language processing and computer vision. Research focuses on dynamic, task-specific embeddings that adapt over time, enhancing the interpretability and efficiency of AI models in real-world applications.

One-Hot Encoding vs Embedding Infographic

techiny.com

techiny.com