Retrieval-Augmented Generation (RAG) enhances traditional generation models by integrating external knowledge sources during the text generation process, improving accuracy and relevance. Unlike traditional generation, which solely relies on pre-trained data, RAG dynamically retrieves pertinent information, enabling more context-aware and factually grounded outputs. This hybrid approach addresses limitations in model knowledge scope, resulting in more reliable and informative AI-generated content.

Table of Comparison

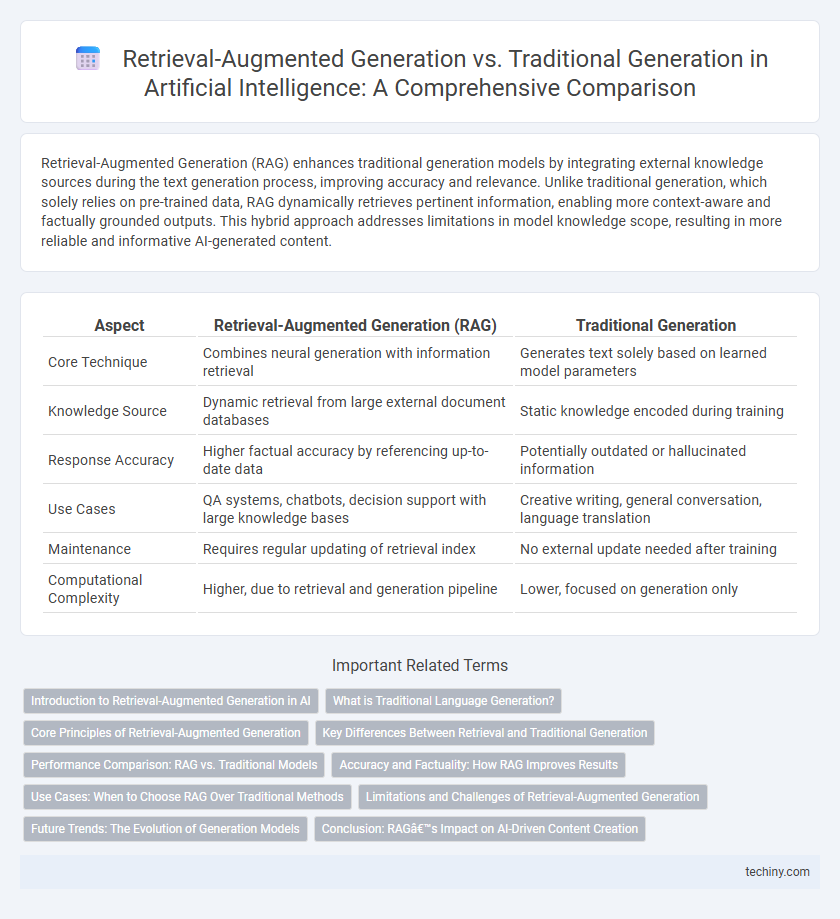

| Aspect | Retrieval-Augmented Generation (RAG) | Traditional Generation |

|---|---|---|

| Core Technique | Combines neural generation with information retrieval | Generates text solely based on learned model parameters |

| Knowledge Source | Dynamic retrieval from large external document databases | Static knowledge encoded during training |

| Response Accuracy | Higher factual accuracy by referencing up-to-date data | Potentially outdated or hallucinated information |

| Use Cases | QA systems, chatbots, decision support with large knowledge bases | Creative writing, general conversation, language translation |

| Maintenance | Requires regular updating of retrieval index | No external update needed after training |

| Computational Complexity | Higher, due to retrieval and generation pipeline | Lower, focused on generation only |

Introduction to Retrieval-Augmented Generation in AI

Retrieval-Augmented Generation (RAG) in AI combines pre-trained language models with external knowledge sources, enabling dynamic information retrieval during text generation. Unlike traditional generation methods relying solely on learned parameters, RAG improves accuracy and relevance by accessing up-to-date data from databases or documents. This hybrid approach enhances context understanding and reduces hallucination in generated outputs.

What is Traditional Language Generation?

Traditional language generation relies on pre-trained models that generate text solely based on learned patterns and probabilities from fixed training data without access to external information sources. These models produce responses by predicting the next word in a sequence using internal knowledge, which can lead to outdated or generic content. Unlike Retrieval-Augmented Generation, traditional generation lacks real-time access to specific, contextual data, limiting its accuracy and relevance in dynamic applications.

Core Principles of Retrieval-Augmented Generation

Retrieval-Augmented Generation (RAG) integrates external knowledge bases with machine learning models to enhance response accuracy and relevance by dynamically retrieving pertinent data during generation. Unlike Traditional Generation, which relies solely on pre-trained knowledge within the model, RAG employs a dual-stage process combining retrieval and generation components, enabling contextual adaptability and up-to-date information incorporation. This core principle of leveraging real-time access to large-scale databases significantly improves performance in complex query handling and open-domain question answering tasks.

Key Differences Between Retrieval and Traditional Generation

Retrieval-Augmented Generation (RAG) integrates external knowledge sources by retrieving relevant documents to enhance response accuracy, while Traditional Generation relies solely on pre-trained model parameters. RAG dynamically accesses vast, up-to-date information, improving factual correctness and contextual relevance, whereas Traditional Generation may produce outdated or hallucinated content. This fundamental difference enables RAG to combine retrieval mechanisms with generative models, significantly advancing performance in complex question answering and knowledge-intensive tasks.

Performance Comparison: RAG vs. Traditional Models

Retrieval-Augmented Generation (RAG) models outperform traditional generation models by integrating external knowledge sources, resulting in higher accuracy and relevance in responses. RAG leverages large-scale document retrieval alongside neural generation, reducing hallucination rates compared to purely generative approaches. Performance benchmarks consistently show RAG models achieving superior F1 scores and lower latency in complex query tasks than traditional models like GPT and BERT.

Accuracy and Factuality: How RAG Improves Results

Retrieval-Augmented Generation (RAG) significantly enhances accuracy and factuality by integrating external knowledge bases during the response creation process, enabling the model to access up-to-date and verifiable information. Unlike Traditional Generation models that rely solely on pre-trained data, RAG dynamically retrieves relevant documents, reducing hallucinations and improving the precision of generated content. This fusion of retrieval mechanisms with generative capabilities ensures more reliable and contextually accurate outputs in AI-driven language tasks.

Use Cases: When to Choose RAG Over Traditional Methods

Retrieval-Augmented Generation (RAG) excels in scenarios requiring up-to-date information or domain-specific knowledge, such as customer support and real-time data analysis, by integrating external documents into response generation. Traditional generation methods are preferable for creative writing and applications needing controlled, generalized outputs without reliance on external knowledge bases. RAG is especially beneficial when accuracy and context relevance depend on dynamically retrieved content rather than static training data.

Limitations and Challenges of Retrieval-Augmented Generation

Retrieval-Augmented Generation (RAG) faces challenges such as dependency on the quality and relevance of the retrieved documents, which can introduce inaccuracies or outdated information into generated responses. The integration of retrieval mechanisms increases computational complexity and latency compared to traditional generation models that rely solely on learned parameters. Ensuring seamless alignment between retrieved content and generative output remains a significant limitation, often requiring sophisticated fine-tuning and robust natural language understanding capabilities.

Future Trends: The Evolution of Generation Models

Retrieval-Augmented Generation (RAG) models combine large language models with external data sources, enabling more accurate and contextually relevant responses than Traditional Generation models that rely solely on pre-trained knowledge. Future trends indicate a shift toward hybrid architectures integrating retrieval mechanisms and fine-tuned generation, improving real-time adaptability and factual accuracy. Advances in scalable knowledge graphs and transformer-based retrieval techniques will drive the evolution of more efficient, explainable, and dynamic AI generation systems.

Conclusion: RAG’s Impact on AI-Driven Content Creation

Retrieval-Augmented Generation (RAG) significantly improves AI-driven content creation by integrating external knowledge sources, enabling more accurate and contextually relevant outputs than traditional generation methods. RAG models combine pretrained language models with retrieval components, which enhances factual correctness and reduces hallucinations common in standalone generative models. This hybrid approach accelerates content generation while maintaining high-quality information, revolutionizing applications in education, customer support, and creative industries.

Retrieval-Augmented Generation vs Traditional Generation Infographic

techiny.com

techiny.com