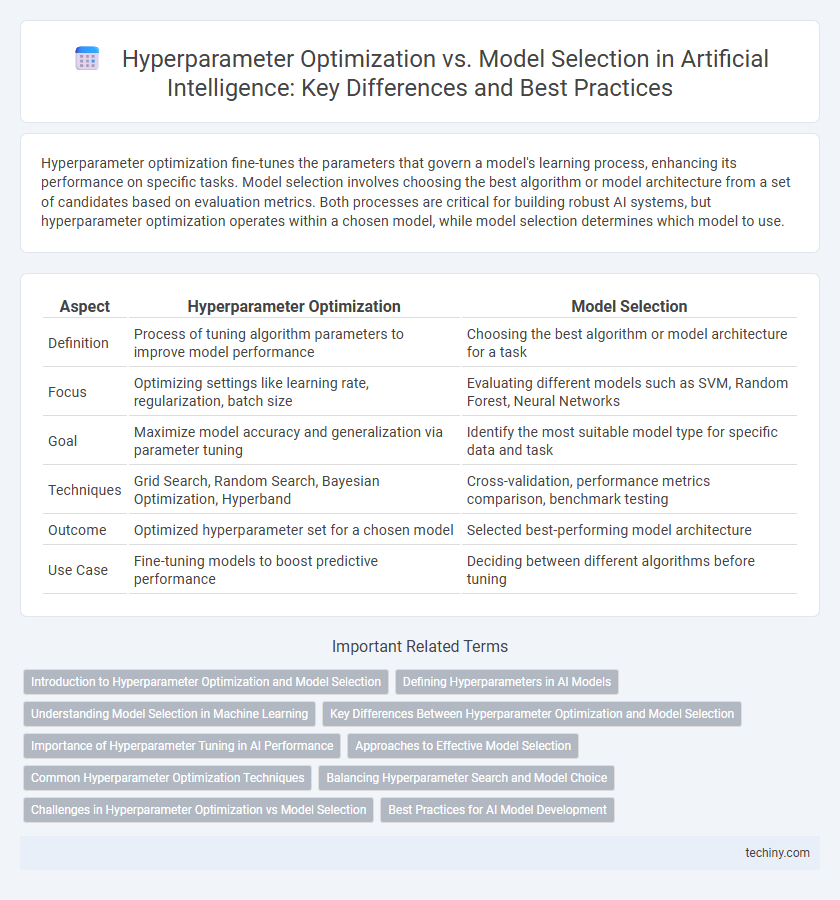

Hyperparameter optimization fine-tunes the parameters that govern a model's learning process, enhancing its performance on specific tasks. Model selection involves choosing the best algorithm or model architecture from a set of candidates based on evaluation metrics. Both processes are critical for building robust AI systems, but hyperparameter optimization operates within a chosen model, while model selection determines which model to use.

Table of Comparison

| Aspect | Hyperparameter Optimization | Model Selection |

|---|---|---|

| Definition | Process of tuning algorithm parameters to improve model performance | Choosing the best algorithm or model architecture for a task |

| Focus | Optimizing settings like learning rate, regularization, batch size | Evaluating different models such as SVM, Random Forest, Neural Networks |

| Goal | Maximize model accuracy and generalization via parameter tuning | Identify the most suitable model type for specific data and task |

| Techniques | Grid Search, Random Search, Bayesian Optimization, Hyperband | Cross-validation, performance metrics comparison, benchmark testing |

| Outcome | Optimized hyperparameter set for a chosen model | Selected best-performing model architecture |

| Use Case | Fine-tuning models to boost predictive performance | Deciding between different algorithms before tuning |

Introduction to Hyperparameter Optimization and Model Selection

Hyperparameter optimization involves systematically tuning parameters that govern the learning process of machine learning models to enhance their predictive performance. Model selection focuses on choosing the best algorithm or model architecture based on performance metrics evaluated on validation data. Both processes are critical for building effective artificial intelligence systems by balancing model complexity, accuracy, and generalization ability.

Defining Hyperparameters in AI Models

Hyperparameters in AI models are predefined settings that govern the training process and influence model performance, such as learning rate, batch size, and number of hidden layers. Unlike model parameters learned during training, hyperparameters must be set prior to the learning phase and are crucial for controlling aspects like convergence speed and generalization capability. Effective hyperparameter optimization systematically searches for the best combination of these values to enhance model accuracy and robustness.

Understanding Model Selection in Machine Learning

Model selection in machine learning involves choosing the most appropriate algorithm and its corresponding hyperparameters to achieve the best predictive performance on unseen data. It requires evaluating different models using techniques like cross-validation to balance bias and variance while avoiding overfitting. Effective model selection ensures that the chosen model generalizes well, optimizing accuracy, robustness, and computational efficiency for the specific task.

Key Differences Between Hyperparameter Optimization and Model Selection

Hyperparameter optimization involves fine-tuning parameters within a chosen machine learning algorithm to enhance its predictive performance, such as learning rate or batch size adjustments. Model selection refers to the process of choosing the best algorithm from a set of candidates, like comparing decision trees, support vector machines, or neural networks based on their accuracy or computational efficiency. The key difference lies in hyperparameter optimization focusing on improving a single model's performance, whereas model selection focuses on identifying the most suitable model architecture for a given task.

Importance of Hyperparameter Tuning in AI Performance

Hyperparameter tuning is crucial for maximizing AI model performance as it directly influences learning rates, regularization strength, and architecture configurations, which shape the model's ability to generalize and avoid overfitting. Effective hyperparameter optimization techniques such as grid search, random search, and Bayesian optimization significantly enhance predictive accuracy and training efficiency. Model selection complements this process by identifying the best algorithm suited for the task, but tuning hyperparameters within that model ensures optimal utilization of its potential.

Approaches to Effective Model Selection

Effective model selection in artificial intelligence relies on systematic hyperparameter optimization techniques such as grid search, random search, and Bayesian optimization to enhance model performance. Cross-validation methods, including k-fold and stratified sampling, ensure robustness and prevent overfitting by evaluating models on diverse data partitions. Emphasizing automated tools like AutoML frameworks accelerates the discovery of optimal model configurations tailored to specific AI tasks.

Common Hyperparameter Optimization Techniques

Common hyperparameter optimization techniques include grid search, random search, and Bayesian optimization, each aiming to improve model performance by systematically tuning algorithm parameters. Grid search exhaustively explores specified parameter values, ensuring comprehensive coverage but at a high computational cost. Random search offers efficiency by sampling parameter combinations randomly, while Bayesian optimization leverages probabilistic models to predict optimal hyperparameter settings and reduce evaluation time.

Balancing Hyperparameter Search and Model Choice

Balancing hyperparameter optimization and model selection is critical for maximizing AI performance while controlling computational costs. Efficient hyperparameter search techniques like Bayesian optimization reduce search space complexity, whereas model selection prioritizes architectural choices that align with specific tasks. Integrating both strategies ensures optimal predictive accuracy by fine-tuning model parameters and selecting robust model frameworks simultaneously.

Challenges in Hyperparameter Optimization vs Model Selection

Hyperparameter optimization faces challenges such as high computational costs and the curse of dimensionality when tuning numerous parameters across complex search spaces. Model selection struggles with balancing bias-variance trade-offs and ensuring generalizability while avoiding overfitting to validation datasets. Both processes require efficient strategies for validation and evaluation metrics to achieve optimal performance in machine learning pipelines.

Best Practices for AI Model Development

Hyperparameter optimization involves systematically tuning parameters such as learning rate, batch size, and regularization strength to enhance model performance, whereas model selection focuses on choosing the best algorithm or architecture among candidates like neural networks, decision trees, or support vector machines. Best practices for AI model development recommend combining grid search or Bayesian optimization for hyperparameter tuning with cross-validation techniques to ensure robust evaluation and prevent overfitting. Leveraging automated tools like AutoML platforms accelerates the iterative process of optimizing hyperparameters and selecting models, leading to efficient and accurate AI solutions.

Hyperparameter Optimization vs Model Selection Infographic

techiny.com

techiny.com