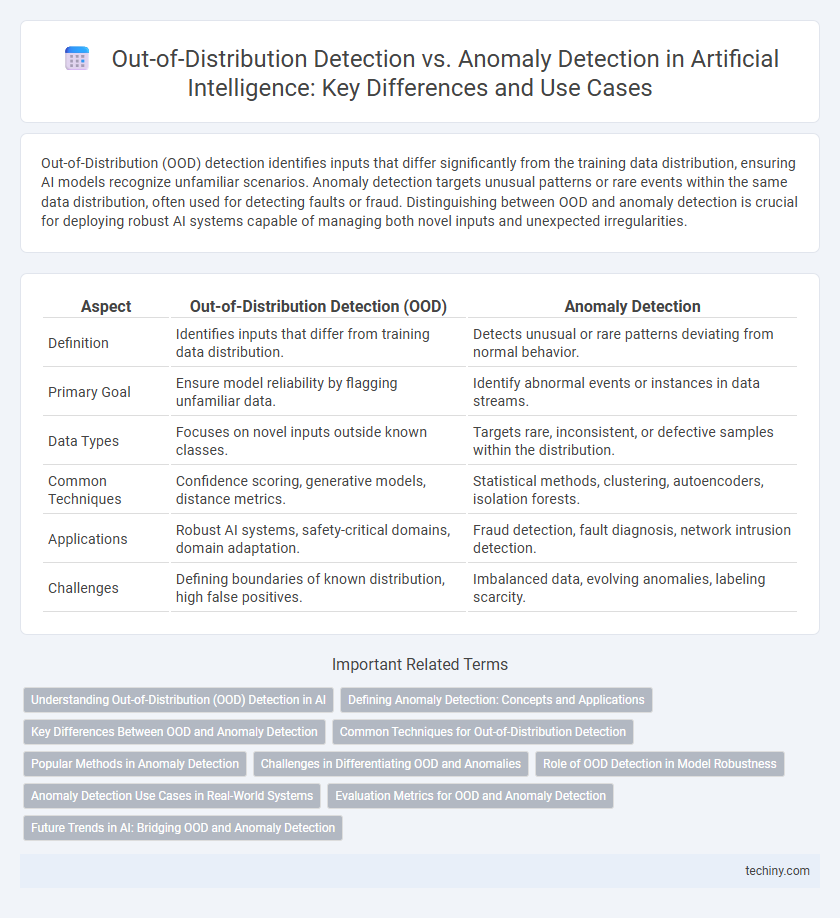

Out-of-Distribution (OOD) detection identifies inputs that differ significantly from the training data distribution, ensuring AI models recognize unfamiliar scenarios. Anomaly detection targets unusual patterns or rare events within the same data distribution, often used for detecting faults or fraud. Distinguishing between OOD and anomaly detection is crucial for deploying robust AI systems capable of managing both novel inputs and unexpected irregularities.

Table of Comparison

| Aspect | Out-of-Distribution Detection (OOD) | Anomaly Detection |

|---|---|---|

| Definition | Identifies inputs that differ from training data distribution. | Detects unusual or rare patterns deviating from normal behavior. |

| Primary Goal | Ensure model reliability by flagging unfamiliar data. | Identify abnormal events or instances in data streams. |

| Data Types | Focuses on novel inputs outside known classes. | Targets rare, inconsistent, or defective samples within the distribution. |

| Common Techniques | Confidence scoring, generative models, distance metrics. | Statistical methods, clustering, autoencoders, isolation forests. |

| Applications | Robust AI systems, safety-critical domains, domain adaptation. | Fraud detection, fault diagnosis, network intrusion detection. |

| Challenges | Defining boundaries of known distribution, high false positives. | Imbalanced data, evolving anomalies, labeling scarcity. |

Understanding Out-of-Distribution (OOD) Detection in AI

Out-of-Distribution (OOD) detection in AI involves identifying inputs that differ significantly from the training data distribution, ensuring model reliability in real-world applications. Unlike anomaly detection, which focuses on unusual or rare patterns within known data distributions, OOD detection specifically targets samples outside the learned domain, preventing erroneous predictions from irrelevant or novel inputs. Effective OOD detection enhances AI robustness by enabling models to recognize and properly handle unfamiliar data encountered during deployment.

Defining Anomaly Detection: Concepts and Applications

Anomaly detection identifies patterns in data that deviate significantly from established norms, signaling potential errors or rare events. It is widely applied in fraud detection, network security, and fault diagnosis to uncover irregularities that may indicate threats or system failures. Advanced machine learning algorithms enable the detection of subtle anomalies in high-dimensional data, improving accuracy and response times in critical applications.

Key Differences Between OOD and Anomaly Detection

Out-of-Distribution (OOD) detection identifies inputs that fall outside the training data distribution, ensuring AI models recognize unfamiliar classes or domains. Anomaly detection, however, focuses on spotting rare or abnormal patterns within the same data distribution, often highlighting errors or fraud. Key differences include OOD's emphasis on generalization to unseen populations versus anomaly detection's focus on deviations within known distributions.

Common Techniques for Out-of-Distribution Detection

Common techniques for out-of-distribution (OOD) detection include confidence-based methods such as maximum softmax probability and temperature-scaled softmax, which evaluate model uncertainty on inputs. Distance-based approaches like Mahalanobis distance leverage feature space metrics to differentiate in-distribution from OOD samples effectively. Generative models, including variational autoencoders and generative adversarial networks, estimate data likelihood or reconstruction error to identify distributional deviations in AI systems.

Popular Methods in Anomaly Detection

Popular methods in anomaly detection leverage statistical techniques, clustering algorithms, and deep learning models to identify deviations from normal patterns in data. Techniques such as Isolation Forest, One-Class SVM, and Autoencoders are widely used due to their effectiveness in detecting rare or unseen anomalies within complex datasets. These approaches are essential for maintaining system robustness by flagging irregularities that standard predictive models may overlook.

Challenges in Differentiating OOD and Anomalies

Distinguishing Out-of-Distribution (OOD) data from anomalies presents significant challenges in Artificial Intelligence due to overlapping characteristics and unclear boundary definitions. OOD detection requires models to identify data not represented in training distributions, while anomaly detection focuses on rare or unexpected variations within known distributions, complicating the separation process. This ambiguity leads to difficulties in model calibration and evaluation metrics, affecting the reliability of AI systems in real-world applications.

Role of OOD Detection in Model Robustness

Out-of-Distribution (OOD) detection enhances model robustness by identifying inputs that differ significantly from the training distribution, preventing erroneous or overconfident predictions. Unlike traditional anomaly detection that targets rare or abnormal patterns within the known distribution, OOD detection focuses on recognizing entirely novel or unseen data inputs. Incorporating OOD detection mechanisms strengthens AI models' reliability and safety, particularly in critical applications like autonomous driving and medical diagnostics.

Anomaly Detection Use Cases in Real-World Systems

Anomaly detection in artificial intelligence plays a critical role in diverse real-world systems such as fraud detection in financial transactions, predictive maintenance in manufacturing, and network security for identifying intrusions. These systems rely on machine learning models to detect patterns that deviate significantly from normal behavior, enabling early warnings and proactive interventions. Unlike out-of-distribution detection, which identifies inputs fundamentally different from the training data, anomaly detection focuses on spotting rare or unexpected events within known data distributions, ensuring robust operational performance.

Evaluation Metrics for OOD and Anomaly Detection

Evaluation metrics for Out-of-Distribution (OOD) detection and anomaly detection include the Area Under the Receiver Operating Characteristic curve (AUROC), Precision-Recall curve (AUPR), and False Positive Rate at 95% True Positive Rate (FPR@95TPR). AUROC measures the model's ability to distinguish between in-distribution and out-of-distribution samples, while AUPR focuses on the performance under class imbalance, which is crucial for rare anomaly detection. FPR@95TPR provides insight into the trade-off between false alarm rates and detection sensitivity, making it essential for real-world deployment scenarios.

Future Trends in AI: Bridging OOD and Anomaly Detection

Future trends in artificial intelligence emphasize the integration of Out-of-Distribution (OOD) detection and anomaly detection to improve model robustness and reliability. Advances in deep learning architectures and unsupervised learning algorithms enable more precise identification of rare or unseen data patterns, reducing false positives and enhancing decision-making. Emerging AI frameworks focus on combining domain adaptation techniques with uncertainty quantification to seamlessly bridge OOD and anomaly detection for real-world applications.

Out-of-Distribution Detection vs Anomaly Detection Infographic

techiny.com

techiny.com