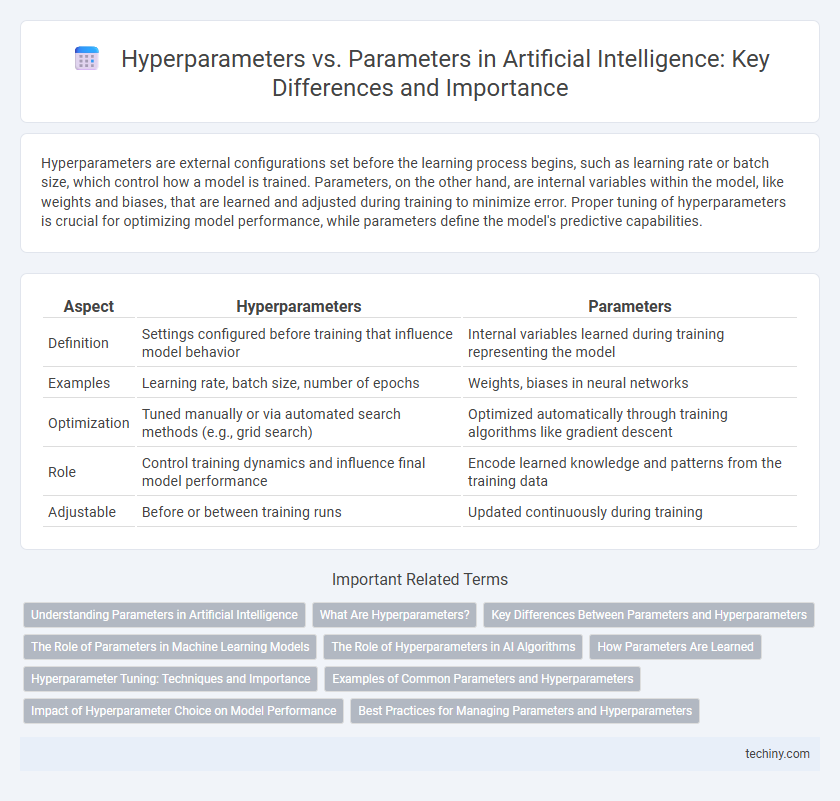

Hyperparameters are external configurations set before the learning process begins, such as learning rate or batch size, which control how a model is trained. Parameters, on the other hand, are internal variables within the model, like weights and biases, that are learned and adjusted during training to minimize error. Proper tuning of hyperparameters is crucial for optimizing model performance, while parameters define the model's predictive capabilities.

Table of Comparison

| Aspect | Hyperparameters | Parameters |

|---|---|---|

| Definition | Settings configured before training that influence model behavior | Internal variables learned during training representing the model |

| Examples | Learning rate, batch size, number of epochs | Weights, biases in neural networks |

| Optimization | Tuned manually or via automated search methods (e.g., grid search) | Optimized automatically through training algorithms like gradient descent |

| Role | Control training dynamics and influence final model performance | Encode learned knowledge and patterns from the training data |

| Adjustable | Before or between training runs | Updated continuously during training |

Understanding Parameters in Artificial Intelligence

Parameters in artificial intelligence are the internal variables of a model that are learned from training data, such as weights and biases in neural networks. These parameters directly affect the model's ability to make accurate predictions by capturing patterns and relationships within the data. Understanding the distinction between parameters and hyperparameters is crucial, as hyperparameters control the learning process, while parameters define the model's knowledge.

What Are Hyperparameters?

Hyperparameters in artificial intelligence are configuration settings used to control the learning process of machine learning models, such as learning rate, batch size, and number of epochs. Unlike parameters, which are learned from data during training, hyperparameters are set before training begins and influence model performance and convergence. Optimizing hyperparameters is crucial for enhancing model accuracy and generalization on unseen data.

Key Differences Between Parameters and Hyperparameters

Parameters in artificial intelligence refer to the internal variables of a model, such as weights and biases, that are learned from training data to minimize prediction errors. Hyperparameters are external configurations like learning rate, batch size, and number of epochs set before training begins, controlling the training process and model architecture. Key differences include that parameters are optimized during model training, whereas hyperparameters require tuning through techniques like grid search or random search to enhance model performance.

The Role of Parameters in Machine Learning Models

Parameters in machine learning models represent the internal coefficients or weights learned during training that directly influence the model's predictions and performance. These parameters adapt to the input data, enabling the model to capture complex patterns and make accurate forecasts. Understanding the distinction between parameters and hyperparameters is crucial, as parameters are optimized through training while hyperparameters are set before training to control the learning process.

The Role of Hyperparameters in AI Algorithms

Hyperparameters control the learning process and model architecture in AI algorithms, significantly influencing model performance and convergence speed. Unlike parameters, which are learned during training, hyperparameters are set before training and include learning rate, batch size, and number of layers. Proper tuning of hyperparameters is crucial for optimizing accuracy and preventing overfitting in machine learning models.

How Parameters Are Learned

Parameters in artificial intelligence models represent the weights and biases that are learned during training by minimizing a loss function through optimization algorithms like gradient descent. Hyperparameters, in contrast, are set prior to training and control the learning process, such as learning rate, batch size, and number of layers. While parameters adapt based on data input, hyperparameters guide the efficiency and effectiveness of this learning phase.

Hyperparameter Tuning: Techniques and Importance

Hyperparameter tuning is crucial for optimizing machine learning models, as hyperparameters control the learning process and directly affect performance metrics like accuracy and loss. Techniques such as grid search, random search, and Bayesian optimization systematically explore hyperparameter spaces to identify optimal configurations, improving model generalization and preventing overfitting. Effective hyperparameter tuning accelerates convergence and enhances predictive accuracy, making it a vital step in deploying robust artificial intelligence systems.

Examples of Common Parameters and Hyperparameters

Parameters in artificial intelligence models include weights and biases that are learned during training to minimize error, such as the coefficients in a neural network's layers. Hyperparameters, on the other hand, are set before training and control the learning process, examples include learning rate, batch size, number of epochs, and the architecture of the model like the number of hidden layers or neurons per layer. Optimizing hyperparameters like dropout rate or regularization strength can significantly impact model performance and generalization.

Impact of Hyperparameter Choice on Model Performance

Hyperparameters, unlike model parameters learned during training, directly influence the training process and model architecture, critically affecting model accuracy and generalization. Optimal hyperparameter tuning, such as adjusting learning rate, batch size, and number of layers, can significantly enhance performance metrics like precision, recall, and F1 score. Ineffective hyperparameter choices often lead to overfitting, underfitting, or prolonged training times, underscoring their pivotal role in efficient and robust AI model development.

Best Practices for Managing Parameters and Hyperparameters

Effective management of parameters and hyperparameters in artificial intelligence models requires systematic tuning and validation to enhance model performance and prevent overfitting. Parameters, which are learned during training, need to be optimized through algorithms like gradient descent, while hyperparameters must be carefully selected using techniques such as grid search, random search, or Bayesian optimization. Employing cross-validation and monitoring metrics like accuracy or loss ensures proper adjustment of both parameters and hyperparameters for robust AI model development.

Hyperparameters vs parameters Infographic

techiny.com

techiny.com