Policy Gradient methods optimize the policy directly by adjusting parameters to maximize expected rewards, enabling effective handling of continuous action spaces and stochastic policies. In contrast, Q-Learning learns the value of action-state pairs, making it suitable for discrete action spaces but often struggling with large or continuous domains. Choosing between Policy Gradient and Q-Learning depends on the complexity of the environment and the nature of the action space.

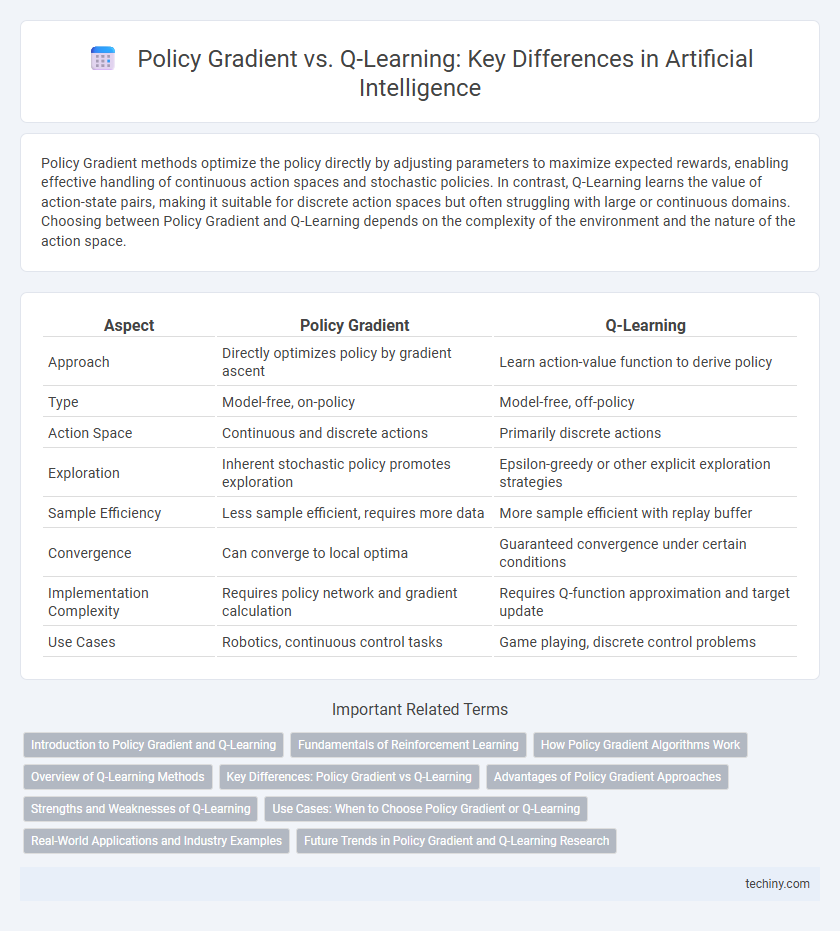

Table of Comparison

| Aspect | Policy Gradient | Q-Learning |

|---|---|---|

| Approach | Directly optimizes policy by gradient ascent | Learn action-value function to derive policy |

| Type | Model-free, on-policy | Model-free, off-policy |

| Action Space | Continuous and discrete actions | Primarily discrete actions |

| Exploration | Inherent stochastic policy promotes exploration | Epsilon-greedy or other explicit exploration strategies |

| Sample Efficiency | Less sample efficient, requires more data | More sample efficient with replay buffer |

| Convergence | Can converge to local optima | Guaranteed convergence under certain conditions |

| Implementation Complexity | Requires policy network and gradient calculation | Requires Q-function approximation and target update |

| Use Cases | Robotics, continuous control tasks | Game playing, discrete control problems |

Introduction to Policy Gradient and Q-Learning

Policy Gradient methods optimize a parameterized policy by directly maximizing expected rewards through gradient ascent, effectively handling continuous and high-dimensional action spaces. Q-Learning estimates the optimal action-value function by iteratively updating Q-values based on the Bellman equation, enabling effective learning in discrete action environments. Both approaches form foundational strategies in reinforcement learning, with Policy Gradient excelling in stochastic policies and Q-Learning excelling in value-based decision-making.

Fundamentals of Reinforcement Learning

Policy Gradient methods directly optimize the policy by adjusting parameters to maximize expected rewards, enabling continuous action spaces and stochastic policies. Q-Learning, a value-based method, estimates the optimal action-value function to inform policy decisions, typically operating in discrete action environments. Both approaches are fundamental to reinforcement learning, addressing the trade-off between exploration and exploitation through different optimization frameworks.

How Policy Gradient Algorithms Work

Policy Gradient algorithms optimize the policy directly by adjusting the parameters to maximize expected rewards using gradient ascent on the objective function. These methods compute the gradient of the expected return with respect to policy parameters, enabling continuous action spaces and stochastic policies. Unlike Q-Learning, which estimates value functions, Policy Gradient algorithms learn optimal policies without requiring a value function approximation.

Overview of Q-Learning Methods

Q-Learning is a model-free reinforcement learning algorithm that estimates the optimal action-value function by iteratively updating Q-values based on observed rewards and estimated future returns. It uses the Bellman equation to update the Q-values, enabling an agent to learn optimal policies without requiring a model of the environment. The algorithm balances exploration and exploitation by employing strategies like epsilon-greedy, making it robust for solving discrete action-space problems.

Key Differences: Policy Gradient vs Q-Learning

Policy Gradient methods optimize the policy directly by maximizing expected rewards through gradient ascent on policy parameters, enabling continuous action spaces and stochastic policies. Q-Learning estimates the optimal action-value function (Q-function) to derive deterministic policies, relying on the Bellman equation for discrete action spaces. Unlike Q-Learning's value-based approach, Policy Gradient's policy-based method offers better performance in high-dimensional or continuous action environments, but often requires more samples to converge.

Advantages of Policy Gradient Approaches

Policy Gradient approaches excel in handling continuous action spaces and stochastic policies, enabling more natural exploration and improved convergence in complex environments. They directly optimize the policy by maximizing expected rewards, which can lead to more stable and efficient learning compared to value-based methods like Q-Learning. This advantage makes Policy Gradient methods especially effective in solving high-dimensional and partially observable reinforcement learning tasks.

Strengths and Weaknesses of Q-Learning

Q-Learning excels in model-free reinforcement learning by directly estimating the optimal action-value function, which enables efficient learning in discrete and low-dimensional state spaces. However, it struggles with scalability and continuous action spaces due to the need for maintaining and updating a Q-table, leading to computational inefficiency and convergence issues. Its exploration strategy can be suboptimal, causing slower adaptation in complex environments compared to policy gradient methods.

Use Cases: When to Choose Policy Gradient or Q-Learning

Policy Gradient algorithms excel in continuous action spaces and high-dimensional environments, making them ideal for robotics control and game-playing applications where actions are not discrete. Q-Learning is more effective in discrete action spaces with simpler state representations, such as grid-world navigation and classic control problems. Choosing between these methods depends on the complexity of the environment and whether the action space is continuous or discrete.

Real-World Applications and Industry Examples

Policy Gradient methods excel in continuous action spaces and have been effectively applied in robotics for dynamic motion control and autonomous vehicle navigation. Q-Learning, with its discrete state-action framework, is widely used in recommendation systems, such as personalized content delivery in streaming platforms and inventory management in retail. Industries like finance leverage both approaches; Policy Gradient optimizes portfolio management via continuous decision-making, while Q-Learning supports credit scoring and fraud detection through discrete classification tasks.

Future Trends in Policy Gradient and Q-Learning Research

Future trends in policy gradient research emphasize improving sample efficiency and stability through advanced algorithms like Proximal Policy Optimization (PPO) and Trust Region Policy Optimization (TRPO). Q-learning advancements focus on leveraging deep neural networks for enhanced function approximation, exemplified by Deep Q-Networks (DQN) and its variants, addressing challenges such as overestimation bias and exploration. Hybrid approaches integrating policy gradient methods with Q-learning aim to balance bias-variance trade-offs, driving the development of more robust and scalable reinforcement learning systems.

Policy Gradient vs Q-Learning Infographic

techiny.com

techiny.com