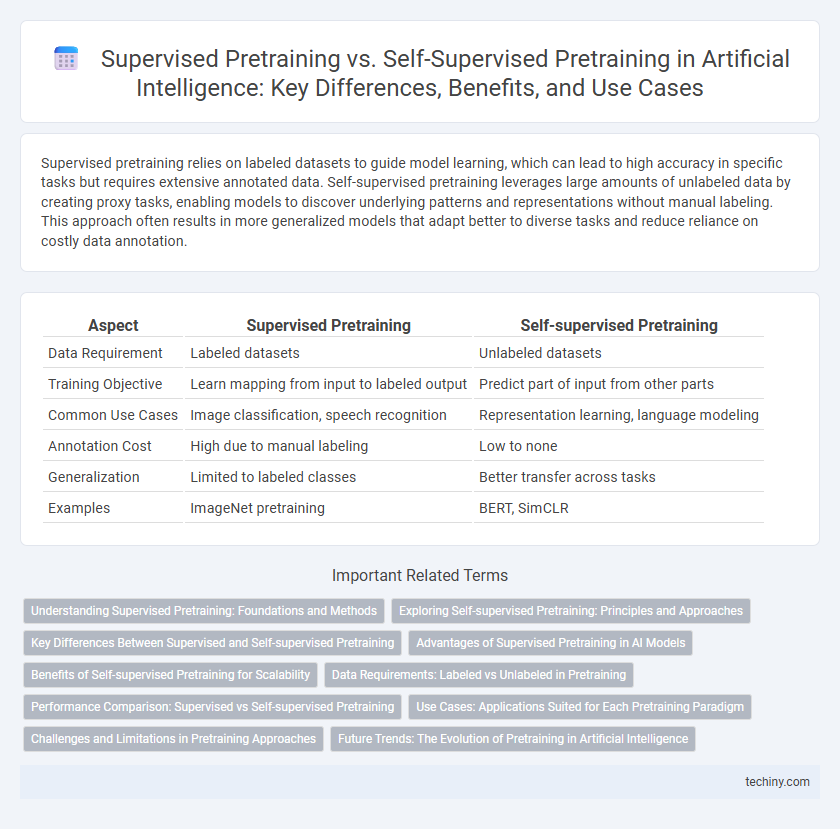

Supervised pretraining relies on labeled datasets to guide model learning, which can lead to high accuracy in specific tasks but requires extensive annotated data. Self-supervised pretraining leverages large amounts of unlabeled data by creating proxy tasks, enabling models to discover underlying patterns and representations without manual labeling. This approach often results in more generalized models that adapt better to diverse tasks and reduce reliance on costly data annotation.

Table of Comparison

| Aspect | Supervised Pretraining | Self-supervised Pretraining |

|---|---|---|

| Data Requirement | Labeled datasets | Unlabeled datasets |

| Training Objective | Learn mapping from input to labeled output | Predict part of input from other parts |

| Common Use Cases | Image classification, speech recognition | Representation learning, language modeling |

| Annotation Cost | High due to manual labeling | Low to none |

| Generalization | Limited to labeled classes | Better transfer across tasks |

| Examples | ImageNet pretraining | BERT, SimCLR |

Understanding Supervised Pretraining: Foundations and Methods

Supervised pretraining relies on labeled datasets to teach models explicit input-output mappings, leveraging annotated examples for tasks like image classification and natural language processing. Techniques such as transfer learning use large labeled corpora to develop foundational representations that improve downstream task performance. This method demands extensive labeled data but provides strong guidance that enhances model accuracy and generalization.

Exploring Self-supervised Pretraining: Principles and Approaches

Self-supervised pretraining leverages vast amounts of unlabeled data by creating proxy tasks that generate labels from the data itself, enabling models to learn meaningful representations without human annotation. Techniques such as contrastive learning, masked language modeling, and predictive coding allow AI systems to capture underlying data patterns, improving performance across diverse downstream tasks. This approach significantly reduces reliance on labeled datasets and enhances generalization by exploiting intrinsic data structures in domains like computer vision and natural language processing.

Key Differences Between Supervised and Self-supervised Pretraining

Supervised pretraining relies on labeled datasets where models learn to map inputs to explicit output labels, enabling precise task-specific feature extraction. Self-supervised pretraining leverages unlabeled data by creating surrogate tasks that generate intrinsic supervisory signals, enhancing model generalization and reducing dependency on costly annotations. Key differences include the requirement of labeled data in supervised approaches versus the autonomous learning mechanisms in self-supervised techniques, impacting scalability and applicability across diverse AI domains.

Advantages of Supervised Pretraining in AI Models

Supervised pretraining in AI models leverages large labeled datasets, enabling precise learning of task-specific features that enhance model accuracy and generalization. This method facilitates faster convergence and improved performance on downstream tasks by providing clear, annotated examples during training. The availability of high-quality labels drives effective feature representation, resulting in robust and reliable AI systems.

Benefits of Self-supervised Pretraining for Scalability

Self-supervised pretraining enables models to leverage vast amounts of unlabeled data, significantly enhancing scalability compared to supervised pretraining, which relies heavily on labeled datasets that are costly and time-consuming to produce. This approach facilitates the creation of more generalizable representations, reducing dependency on domain-specific annotations and enabling efficient adaptation across diverse tasks. Consequently, self-supervised pretraining accelerates the development of robust AI systems at scale by maximizing data utilization and minimizing manual labeling efforts.

Data Requirements: Labeled vs Unlabeled in Pretraining

Supervised pretraining relies on large volumes of labeled datasets, which demand extensive manual annotation to guide model learning effectively. Self-supervised pretraining leverages vast amounts of unlabeled data by generating surrogate tasks, significantly reducing the dependence on costly and time-consuming data labeling processes. This fundamental difference in data requirements impacts scalability and applicability across diverse AI domains, where access to labeled data is limited.

Performance Comparison: Supervised vs Self-supervised Pretraining

Supervised pretraining relies on labeled datasets, often achieving higher accuracy on specific downstream tasks due to explicit guidance during learning. Self-supervised pretraining leverages unlabeled data through proxy tasks, enabling models to learn more generalized representations and improving performance on diverse or less-defined tasks. Recent benchmarks demonstrate self-supervised models closing the performance gap with supervised counterparts, especially in scenarios with limited labeled data and in transfer learning applications.

Use Cases: Applications Suited for Each Pretraining Paradigm

Supervised pretraining excels in applications requiring precise labeled data, such as image classification and natural language processing tasks like sentiment analysis, where explicit annotations guide model learning. Self-supervised pretraining is ideal for scenarios with large unlabeled datasets, including speech recognition, anomaly detection, and language modeling, leveraging innate data structures to learn representations without extensive labeling. Selecting between paradigms depends on data availability and task specificity, with supervised methods providing accuracy for well-defined labels and self-supervised approaches offering scalability and adaptability across diverse domains.

Challenges and Limitations in Pretraining Approaches

Supervised pretraining requires vast labeled datasets, posing challenges in data collection and annotation costs that limit scalability. Self-supervised pretraining mitigates labeling dependency but faces difficulties in designing effective pretext tasks and ensuring representation quality across diverse domains. Both approaches struggle with domain adaptation issues, where pretrained models may underperform on tasks with distributions differing significantly from training data.

Future Trends: The Evolution of Pretraining in Artificial Intelligence

Future trends in Artificial Intelligence highlight a shift from traditional supervised pretraining, which relies heavily on labeled datasets, towards self-supervised pretraining that leverages vast amounts of unlabeled data to improve model generalization and robustness. Emerging techniques in self-supervised learning, such as contrastive learning and masked data modeling, demonstrate significant potential for scaling AI systems more efficiently across diverse applications. The evolution of pretraining methodologies is set to accelerate innovation by reducing dependency on costly annotations and enhancing the ability of AI models to adapt to complex, real-world environments.

Supervised Pretraining vs Self-supervised Pretraining Infographic

techiny.com

techiny.com