Feedforward neural networks process data in a single direction from input to output, enabling efficient pattern recognition and classification without cycles or loops. In contrast, feedback neural networks incorporate cycles by allowing signals to travel backward, which enhances their ability to model temporal sequences and dynamic behaviors. Understanding the distinction between these architectures is crucial for selecting appropriate models in tasks ranging from image recognition to time-series prediction.

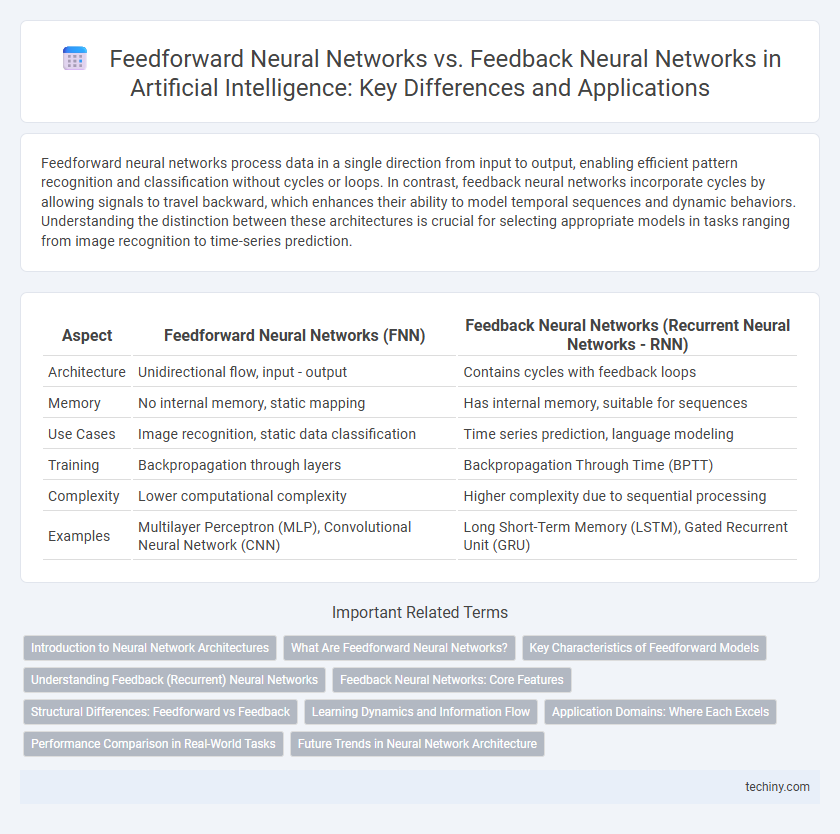

Table of Comparison

| Aspect | Feedforward Neural Networks (FNN) | Feedback Neural Networks (Recurrent Neural Networks - RNN) |

|---|---|---|

| Architecture | Unidirectional flow, input - output | Contains cycles with feedback loops |

| Memory | No internal memory, static mapping | Has internal memory, suitable for sequences |

| Use Cases | Image recognition, static data classification | Time series prediction, language modeling |

| Training | Backpropagation through layers | Backpropagation Through Time (BPTT) |

| Complexity | Lower computational complexity | Higher complexity due to sequential processing |

| Examples | Multilayer Perceptron (MLP), Convolutional Neural Network (CNN) | Long Short-Term Memory (LSTM), Gated Recurrent Unit (GRU) |

Introduction to Neural Network Architectures

Feedforward Neural Networks process data in one direction, from input to output layers, minimizing complexity and improving speed in tasks like image recognition. Feedback Neural Networks incorporate loops allowing outputs to influence previous layers, enhancing dynamic learning in time-dependent applications such as speech recognition. These architectures form the foundational models that dictate neural network capabilities and application suitability in artificial intelligence.

What Are Feedforward Neural Networks?

Feedforward Neural Networks (FNNs) are a type of artificial neural network where connections between the nodes do not form cycles, allowing information to move in only one direction--from input to output layers. These networks excel in pattern recognition and classification tasks due to their straightforward architecture and ability to approximate complex functions. Unlike Feedback Neural Networks, FNNs lack internal memory, making them less suited for sequential data or time-dependent tasks.

Key Characteristics of Feedforward Models

Feedforward neural networks are characterized by unidirectional data flow from input to output layers without cycles, ensuring straightforward and efficient training through backpropagation. These models excel in tasks involving static pattern recognition, such as image classification and simple function approximation. Their lack of internal memory and feedback loops distinguishes them from recurrent architectures, enabling faster convergence but limiting temporal sequence processing capabilities.

Understanding Feedback (Recurrent) Neural Networks

Feedback Neural Networks, also known as Recurrent Neural Networks (RNNs), are designed to process sequential data by maintaining a hidden state that captures information from previous inputs, enabling dynamic temporal behavior. Unlike Feedforward Neural Networks, which operate in a unidirectional flow from input to output, RNNs incorporate cycles allowing feedback loops that model dependencies over time. This architecture makes RNNs particularly effective for tasks like language modeling, time series prediction, and speech recognition where context and order matter.

Feedback Neural Networks: Core Features

Feedback Neural Networks, also known as recurrent neural networks (RNNs), possess core features such as the presence of cycles allowing information to persist across time steps, enabling the modeling of sequential and temporal data. These networks maintain internal states or memory by feeding outputs back into the network, which facilitates context-aware processing essential for tasks like language modeling and time series prediction. The recursive structure in feedback neural networks supports dynamic behavior and learning from past information, distinguishing them from feedforward architectures.

Structural Differences: Feedforward vs Feedback

Feedforward neural networks consist of layers where data moves strictly in one direction, from input to output, without cycles or loops, enabling efficient and straightforward training. Feedback neural networks incorporate recurrent connections, allowing outputs to be fed back into previous layers, which facilitates handling temporal sequences and dynamic states. Structural differences influence their application domains, with feedforward architectures excelling in static pattern recognition and feedback networks in tasks requiring memory and context retention.

Learning Dynamics and Information Flow

Feedforward neural networks process data in a unidirectional flow from input to output, facilitating straightforward learning dynamics through layer-by-layer error propagation during backpropagation. Feedback neural networks incorporate recurrent connections, enabling information to circulate and influence subsequent activations, which enhances temporal learning and memory retention in tasks involving sequential data. The iterative information flow in feedback networks supports complex dynamic behaviors and adaptive learning, contrasting with the static processing in feedforward architectures.

Application Domains: Where Each Excels

Feedforward Neural Networks excel in applications requiring straightforward pattern recognition such as image classification, speech recognition, and medical diagnosis due to their ability to map inputs directly to outputs without cycles. Feedback Neural Networks, also known as Recurrent Neural Networks (RNNs), are ideal for sequential data processing tasks including natural language processing, time series prediction, and speech synthesis because they maintain internal memory states to capture temporal dependencies. These architectures are chosen based on domain needs: feedforward models for static data analysis and feedback models for dynamic, time-dependent data modeling.

Performance Comparison in Real-World Tasks

Feedforward neural networks excel in tasks requiring static pattern recognition and fast inference, such as image classification, due to their straightforward, layer-by-layer data processing. Feedback neural networks, including recurrent architectures, outperform in dynamic and sequential data tasks like natural language processing and time-series prediction because of their ability to retain contextual information over time. Performance comparison in real-world applications shows feedforward models often achieve higher accuracy with less computational cost in fixed-input scenarios, whereas feedback networks are superior for temporal dependencies despite increased complexity and training time.

Future Trends in Neural Network Architecture

Feedforward Neural Networks continue evolving with improvements in depth and activation functions to enhance pattern recognition and computational efficiency. Feedback Neural Networks, including recurrent architectures, are advancing with memory augmentation techniques like attention mechanisms to better handle sequential data and temporal dependencies. Future trends emphasize hybrid models combining feedforward and feedback pathways to leverage the strengths of both for adaptive and context-aware AI applications.

Feedforward Neural Networks vs Feedback Neural Networks Infographic

techiny.com

techiny.com