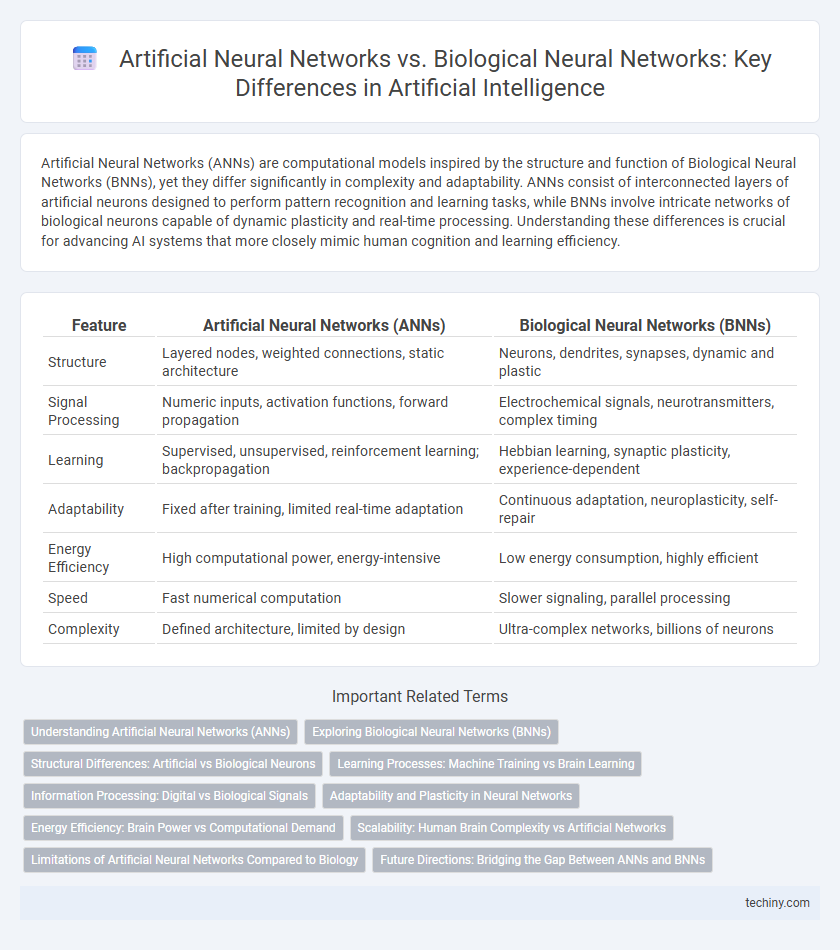

Artificial Neural Networks (ANNs) are computational models inspired by the structure and function of Biological Neural Networks (BNNs), yet they differ significantly in complexity and adaptability. ANNs consist of interconnected layers of artificial neurons designed to perform pattern recognition and learning tasks, while BNNs involve intricate networks of biological neurons capable of dynamic plasticity and real-time processing. Understanding these differences is crucial for advancing AI systems that more closely mimic human cognition and learning efficiency.

Table of Comparison

| Feature | Artificial Neural Networks (ANNs) | Biological Neural Networks (BNNs) |

|---|---|---|

| Structure | Layered nodes, weighted connections, static architecture | Neurons, dendrites, synapses, dynamic and plastic |

| Signal Processing | Numeric inputs, activation functions, forward propagation | Electrochemical signals, neurotransmitters, complex timing |

| Learning | Supervised, unsupervised, reinforcement learning; backpropagation | Hebbian learning, synaptic plasticity, experience-dependent |

| Adaptability | Fixed after training, limited real-time adaptation | Continuous adaptation, neuroplasticity, self-repair |

| Energy Efficiency | High computational power, energy-intensive | Low energy consumption, highly efficient |

| Speed | Fast numerical computation | Slower signaling, parallel processing |

| Complexity | Defined architecture, limited by design | Ultra-complex networks, billions of neurons |

Understanding Artificial Neural Networks (ANNs)

Artificial Neural Networks (ANNs) are computational models inspired by the structure and functioning of Biological Neural Networks but operate through layers of interconnected nodes called neurons. ANNs process input data by adjusting weighted connections during training, enabling pattern recognition, classification, and decision-making tasks in fields like computer vision and natural language processing. Unlike biological networks, ANNs lack the complexity of neurochemical interactions but excel in scalability, reproducibility, and efficiency across diverse artificial intelligence applications.

Exploring Biological Neural Networks (BNNs)

Exploring Biological Neural Networks (BNNs) reveals highly complex structures composed of billions of interconnected neurons, capable of adaptive learning and plasticity that currently surpass Artificial Neural Networks (ANNs) in flexibility and efficiency. BNNs utilize chemical and electrical signaling to process information, supporting cognitive functions such as memory formation, sensory perception, and motor control. Understanding synaptic mechanisms, neural coding, and network dynamics in BNNs provides critical insights for advancing the design and capabilities of ANNs in artificial intelligence applications.

Structural Differences: Artificial vs Biological Neurons

Artificial neural networks consist of simplified neurons modeled as mathematical functions with inputs, weights, biases, and activation functions, lacking the complex biological structures like dendrites, axons, and synapses found in biological neurons. Biological neurons exhibit highly intricate morphologies with thousands of synaptic connections enabling dynamic chemical signaling and plasticity, features absent in artificial neurons. These structural differences impact processing capabilities, with biological neurons supporting adaptive learning through biochemical processes, while artificial neurons rely on predefined algorithms for weight adjustment.

Learning Processes: Machine Training vs Brain Learning

Artificial Neural Networks (ANNs) rely on supervised and unsupervised machine training methods such as backpropagation and gradient descent to optimize weights through large datasets and iterative adjustments, enabling pattern recognition and decision-making. In contrast, biological neural networks in the human brain learn through synaptic plasticity, a dynamic process involving long-term potentiation, neurochemical changes, and experience-driven adaptation. While ANNs require explicit training cycles and predefined architectures, brain learning is continuous, self-organizing, and influenced by emotions and environmental feedback.

Information Processing: Digital vs Biological Signals

Artificial Neural Networks (ANNs) process digital signals through binary computations, enabling precise and efficient pattern recognition using layers of interconnected nodes. In contrast, Biological Neural Networks handle analog signals via complex electrochemical processes, allowing adaptive and parallel information processing with inherent noise tolerance. This fundamental difference influences how each system encodes, transmits, and interprets information, impacting learning dynamics and processing speed.

Adaptability and Plasticity in Neural Networks

Artificial Neural Networks (ANNs) exhibit adaptability through adjustable weights and learning algorithms such as backpropagation, allowing them to optimize performance on specific tasks. Biological Neural Networks demonstrate superior plasticity, featuring synaptic modifications, neurogenesis, and structural reorganization that enable continuous learning and memory formation. The inherent plasticity of biological systems surpasses current ANN capabilities, inspiring ongoing research in neuromorphic computing and adaptive learning models.

Energy Efficiency: Brain Power vs Computational Demand

Artificial Neural Networks (ANNs) consume significantly more energy than Biological Neural Networks (BNNs) due to extensive computational demand and reliance on electronic hardware. The human brain operates approximately on 20 watts of power, utilizing highly efficient synaptic signaling and parallel processing, whereas large-scale ANNs require megawatts of electricity for training and inference. Energy efficiency in BNNs remains a critical benchmark for developing next-generation AI architectures aimed at reducing the carbon footprint of machine learning models.

Scalability: Human Brain Complexity vs Artificial Networks

Human brain complexity surpasses artificial networks in scalability, boasting approximately 86 billion neurons interconnected by trillions of synapses that enable vast parallel processing and adaptability. Artificial neural networks, although inspired by biological neurons, typically consist of millions to billions of artificial neurons structured in layers with limited connectivity, posing challenges in scaling to the brain's intricate architecture. Advances in neuromorphic computing and deep learning frameworks aim to bridge this gap, enhancing scalability by mimicking biological efficiency and dynamic connectivity.

Limitations of Artificial Neural Networks Compared to Biology

Artificial Neural Networks (ANNs) lack the complex adaptability and plasticity inherent in Biological Neural Networks (BNNs), resulting in limited ability to generalize from sparse data and withstand noisy environments. While BNNs excel at energy-efficient parallel processing and self-repair, ANNs require extensive computational resources and large labeled datasets for training. Moreover, current ANNs fail to replicate the dynamic chemical signaling and multi-scale hierarchical organization present in biological brains, restricting their performance in real-world, context-dependent tasks.

Future Directions: Bridging the Gap Between ANNs and BNNs

Future directions in Artificial Intelligence emphasize enhancing Artificial Neural Networks (ANNs) by incorporating neurobiological principles from Biological Neural Networks (BNNs), aiming to improve learning efficiency, adaptability, and robustness. Advances in neuromorphic computing and bio-inspired algorithms facilitate the simulation of synaptic plasticity and neural dynamics observed in the human brain. Ongoing interdisciplinary research integrates insights from neuroscience, cognitive science, and machine learning to bridge the gap between ANNs and BNNs, enabling more human-like intelligence in AI systems.

Artificial Neural Networks vs Biological Neural Networks Infographic

techiny.com

techiny.com