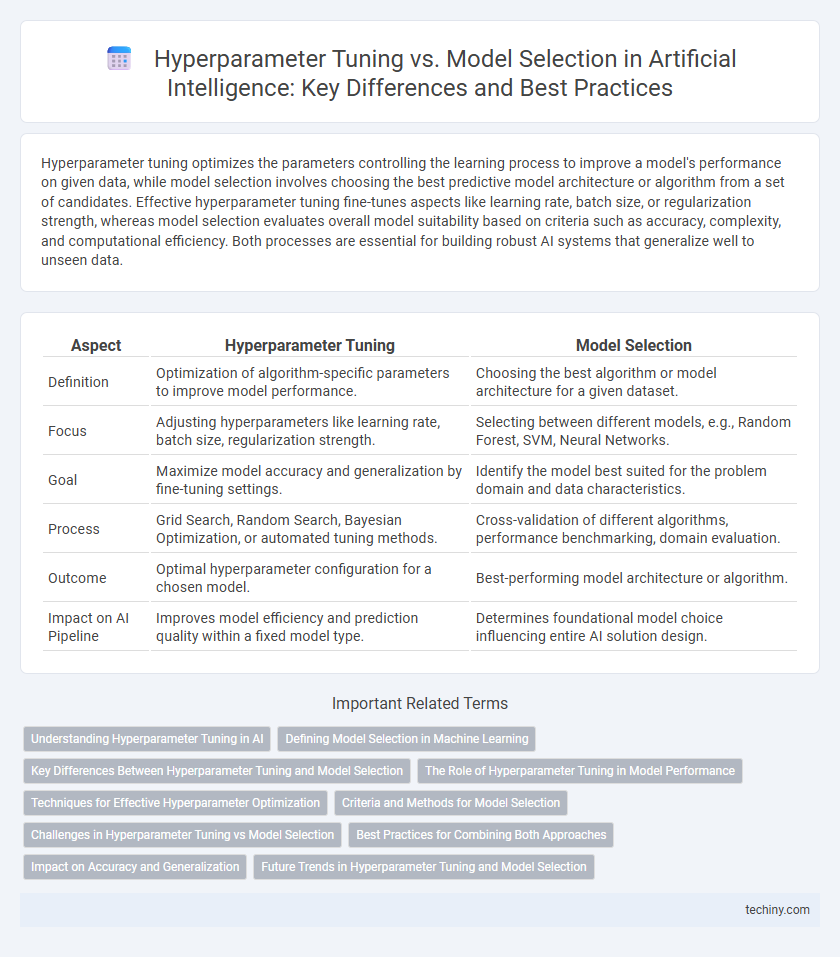

Hyperparameter tuning optimizes the parameters controlling the learning process to improve a model's performance on given data, while model selection involves choosing the best predictive model architecture or algorithm from a set of candidates. Effective hyperparameter tuning fine-tunes aspects like learning rate, batch size, or regularization strength, whereas model selection evaluates overall model suitability based on criteria such as accuracy, complexity, and computational efficiency. Both processes are essential for building robust AI systems that generalize well to unseen data.

Table of Comparison

| Aspect | Hyperparameter Tuning | Model Selection |

|---|---|---|

| Definition | Optimization of algorithm-specific parameters to improve model performance. | Choosing the best algorithm or model architecture for a given dataset. |

| Focus | Adjusting hyperparameters like learning rate, batch size, regularization strength. | Selecting between different models, e.g., Random Forest, SVM, Neural Networks. |

| Goal | Maximize model accuracy and generalization by fine-tuning settings. | Identify the model best suited for the problem domain and data characteristics. |

| Process | Grid Search, Random Search, Bayesian Optimization, or automated tuning methods. | Cross-validation of different algorithms, performance benchmarking, domain evaluation. |

| Outcome | Optimal hyperparameter configuration for a chosen model. | Best-performing model architecture or algorithm. |

| Impact on AI Pipeline | Improves model efficiency and prediction quality within a fixed model type. | Determines foundational model choice influencing entire AI solution design. |

Understanding Hyperparameter Tuning in AI

Hyperparameter tuning in artificial intelligence involves optimizing parameters that govern the learning process, such as learning rate, batch size, and regularization strength, to enhance model performance. Unlike model selection, which focuses on choosing the best algorithm or architecture, hyperparameter tuning fine-tunes a chosen model to achieve higher accuracy and generalization. Effective hyperparameter tuning relies on techniques like grid search, random search, and Bayesian optimization to systematically explore the parameter space and identify optimal configurations.

Defining Model Selection in Machine Learning

Model selection in machine learning involves choosing the best algorithm or model architecture based on its performance on validation data, aiming to optimize predictive accuracy and generalization. It focuses on comparing different models or configurations to identify which is most suitable for the given task and dataset. Effective model selection prevents overfitting and underfitting by balancing complexity and bias using criteria such as cross-validation scores or information-theoretic metrics.

Key Differences Between Hyperparameter Tuning and Model Selection

Hyperparameter tuning involves optimizing parameters that govern the learning process of a chosen machine learning model, such as learning rate, batch size, or number of iterations. Model selection focuses on choosing the best algorithm or model architecture--like decision trees, support vector machines, or neural networks--based on performance metrics. While hyperparameter tuning fine-tunes a specific model's performance, model selection determines which model structure best fits the data and problem domain.

The Role of Hyperparameter Tuning in Model Performance

Hyperparameter tuning plays a critical role in optimizing model performance by systematically adjusting parameters such as learning rate, batch size, and regularization strength to improve accuracy and generalization. Effective tuning directly impacts the predictive power and robustness of machine learning models by preventing overfitting and underfitting. In contrast, model selection involves choosing the best algorithm or architecture, but without fine-tuning hyperparameters, even the best models may perform suboptimally.

Techniques for Effective Hyperparameter Optimization

Effective hyperparameter optimization techniques include grid search, random search, and Bayesian optimization, each enabling precise adjustment of model parameters to enhance predictive performance. Advanced methods like Hyperband and genetic algorithms accelerate the search process by efficiently allocating resources and exploring complex hyperparameter spaces. Leveraging cross-validation during tuning ensures robust evaluation of hyperparameter configurations, reducing overfitting and improving model generalization in artificial intelligence applications.

Criteria and Methods for Model Selection

Model selection in artificial intelligence relies on criteria such as cross-validation accuracy, computational efficiency, and model complexity to balance performance and generalization. Common methods for model selection include grid search, random search, and Bayesian optimization, which systematically evaluate hyperparameter combinations to identify the best-performing model. Effective model selection ensures robust predictions by minimizing overfitting and underfitting through careful assessment of validation metrics.

Challenges in Hyperparameter Tuning vs Model Selection

Hyperparameter tuning involves optimizing model parameters such as learning rate and regularization strength, requiring extensive computational resources and careful validation to avoid overfitting. Model selection challenges stem from evaluating different algorithms or architectures to identify the best fit for the dataset, often complicated by varying performance metrics and training times. Both processes require balancing bias-variance trade-offs and managing limited data, with hyperparameter tuning demanding fine-grained adjustments whereas model selection relies on broader comparative analyses.

Best Practices for Combining Both Approaches

Effective hyperparameter tuning combined with strategic model selection enhances AI performance by systematically exploring model architectures and optimizing their parameters to balance bias and variance. Employing techniques such as nested cross-validation ensures unbiased evaluation while leveraging automated search methods like Bayesian optimization accelerates hyperparameter discovery. Integrating these best practices results in robust, generalizable models tailored to specific datasets and tasks.

Impact on Accuracy and Generalization

Hyperparameter tuning fine-tunes model parameters like learning rate and regularization strength to optimize accuracy on validation data, directly improving model performance and reducing overfitting. Model selection involves choosing the best algorithm or model architecture, impacting generalization by balancing bias-variance trade-offs for diverse datasets. Both processes critically influence the final model's ability to achieve high accuracy while maintaining robustness across unseen data.

Future Trends in Hyperparameter Tuning and Model Selection

Future trends in hyperparameter tuning emphasize automated and adaptive methods driven by reinforcement learning and Bayesian optimization to efficiently navigate complex search spaces. Model selection is increasingly leveraging meta-learning and neural architecture search to dynamically identify optimal models based on data characteristics and task requirements. Integration of these approaches with scalable cloud platforms and real-time feedback systems promises enhanced accuracy and reduced computational costs in AI workflows.

Hyperparameter tuning vs Model selection Infographic

techiny.com

techiny.com