On-policy learning evaluates and improves the policy that is used to make decisions, ensuring that the agent learns from actions taken according to its current strategy. Off-policy learning allows the agent to learn from data generated by a different policy, enabling the use of past experiences or explorations that differ from the current behavior policy. This distinction affects sample efficiency and stability in reinforcement learning algorithms, influencing their application in dynamic environments.

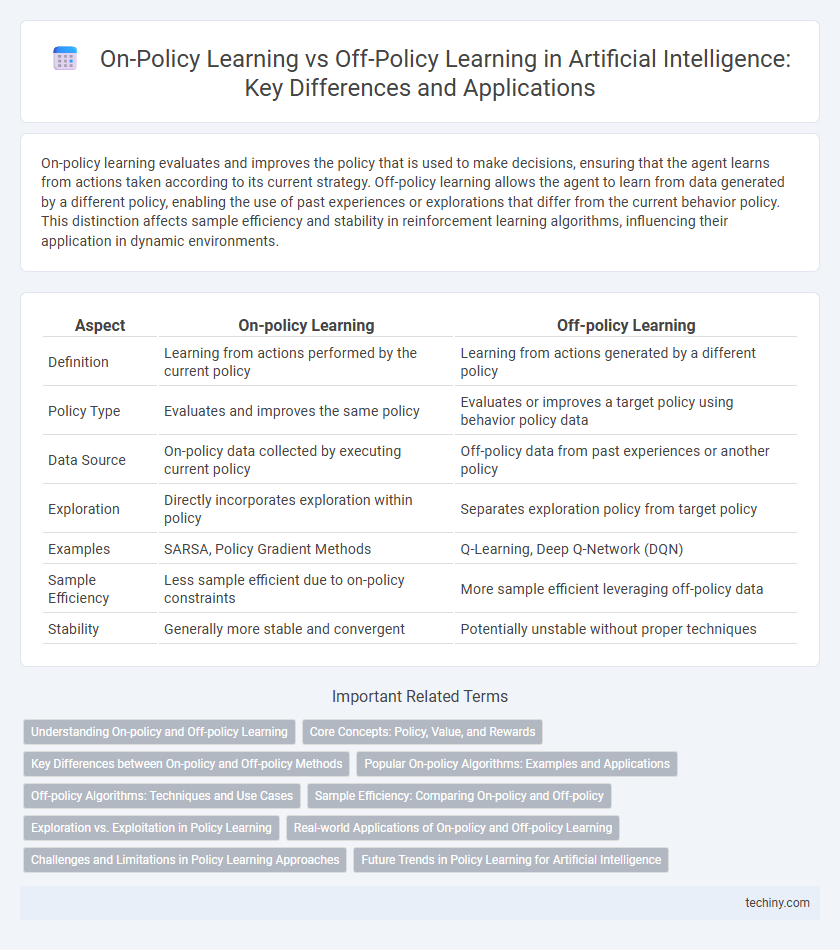

Table of Comparison

| Aspect | On-policy Learning | Off-policy Learning |

|---|---|---|

| Definition | Learning from actions performed by the current policy | Learning from actions generated by a different policy |

| Policy Type | Evaluates and improves the same policy | Evaluates or improves a target policy using behavior policy data |

| Data Source | On-policy data collected by executing current policy | Off-policy data from past experiences or another policy |

| Exploration | Directly incorporates exploration within policy | Separates exploration policy from target policy |

| Examples | SARSA, Policy Gradient Methods | Q-Learning, Deep Q-Network (DQN) |

| Sample Efficiency | Less sample efficient due to on-policy constraints | More sample efficient leveraging off-policy data |

| Stability | Generally more stable and convergent | Potentially unstable without proper techniques |

Understanding On-policy and Off-policy Learning

On-policy learning evaluates and improves the policy that an agent is currently following, using data collected from actions taken by that same policy, enabling real-time adaptation and policy refinement. Off-policy learning, by contrast, learns the value of an optimal policy independently from the agent's current actions, utilizing data from different policies or historical experiences, which enhances sample efficiency and allows for more flexible exploration strategies. Understanding these distinctions is critical for selecting suitable algorithms like SARSA for on-policy and Q-learning for off-policy reinforcement learning tasks.

Core Concepts: Policy, Value, and Rewards

On-policy learning directly evaluates and improves the policy used to make decisions by interacting with the environment, relying on value functions estimated from the same policy's experiences. Off-policy learning separates the behavior policy generating data from the target policy being optimized, which allows learning from past or exploratory actions. Both approaches utilize reward signals to update value estimates, but on-policy methods ensure consistency between policy and data, while off-policy methods enable greater flexibility and data reuse.

Key Differences between On-policy and Off-policy Methods

On-policy learning evaluates and improves the policy that is used to make decisions, ensuring that updates are based on actions actually taken by the current policy, which enhances stability in environments where the policy continuously evolves. Off-policy learning, meanwhile, can learn from data generated by a different policy than the one being improved, allowing for greater sample efficiency and the use of previously collected experiences. Key differences include the reliance on the same behavior policy in on-policy methods versus the flexibility of off-policy methods to leverage diverse data, impacting convergence properties and exploration strategies in reinforcement learning algorithms.

Popular On-policy Algorithms: Examples and Applications

Popular on-policy algorithms such as Proximal Policy Optimization (PPO) and Advantage Actor-Critic (A2C) are widely used in reinforcement learning due to their stable policy updates and sample efficiency. These algorithms excel in applications like robotics control, where adapting actions based on the current policy improves learning accuracy, and game playing environments such as OpenAI Five or AlphaStar. On-policy methods directly optimize the current policy using data collected from interactions with the environment, enabling robust performance in continuous control and decision-making tasks.

Off-policy Algorithms: Techniques and Use Cases

Off-policy learning algorithms, such as Q-learning and Deep Q-Networks (DQN), enable agents to learn optimal policies from data generated by different behaviors or policies, enhancing sample efficiency and flexibility. These techniques are crucial in environments where exploration policies differ from the target policy, allowing reuse of past experiences stored in replay buffers to improve learning stability. Off-policy algorithms find extensive use in robotics, autonomous driving, and recommendation systems, where adapting to dynamic and partially observable environments is essential.

Sample Efficiency: Comparing On-policy and Off-policy

On-policy learning methods update policies based solely on the data generated by the current policy, resulting in lower sample efficiency due to limited reuse of past experiences. Off-policy learning can leverage experiences collected from different policies, significantly improving sample efficiency by maximizing the utility of historical data. Algorithms like Q-learning exemplify off-policy approaches, allowing faster convergence in environments with expensive data collection.

Exploration vs. Exploitation in Policy Learning

On-policy learning balances exploration and exploitation by evaluating and improving the current policy using data gathered from actions taken within that policy, ensuring updates directly reflect the agent's behavior. Off-policy learning separates the behavior policy, which explores the environment, from the target policy being optimized, allowing greater flexibility in learning from diverse experiences and improving exploitation efficiency. This distinction impacts sample efficiency, convergence stability, and the ability to learn optimal strategies in reinforcement learning scenarios.

Real-world Applications of On-policy and Off-policy Learning

On-policy learning algorithms such as SARSA excel in real-world applications requiring continuous policy evaluation and improvement, including online recommendation systems and robotics navigation. Off-policy methods like Q-learning enable efficient learning from past experiences or stored datasets, making them ideal for scenarios like autonomous driving where exploration risks must be minimized. These distinctions allow tailored deployment of reinforcement learning strategies to optimize performance and safety across diverse industries.

Challenges and Limitations in Policy Learning Approaches

On-policy learning faces challenges like high variance and sample inefficiency since it updates the policy using data generated strictly from the current policy, limiting exploration of alternative strategies. Off-policy learning struggles with stability and convergence issues due to the discrepancy between the behavior policy generating data and the target policy being optimized, which can lead to biased value estimates. Both approaches require careful handling of the trade-off between bias and variance to improve learning efficiency and policy performance in complex environments.

Future Trends in Policy Learning for Artificial Intelligence

Future trends in policy learning for artificial intelligence emphasize the integration of hybrid on-policy and off-policy methods to enhance sample efficiency and stability. Advancements in meta-learning and deep reinforcement learning are driving adaptive algorithms that dynamically switch between policy evaluation and improvement phases. Research priorities include scalable algorithms that balance exploration-exploitation trade-offs while leveraging large-scale, real-world data for robust policy generalization.

On-policy Learning vs Off-policy Learning Infographic

techiny.com

techiny.com