Model bias refers to errors introduced by overly simplistic assumptions in the learning algorithm, causing underfitting and poor generalization to new data. Model variance occurs when the algorithm captures noise instead of underlying patterns, leading to overfitting and sensitivity to training data fluctuations. Balancing bias and variance is crucial for developing robust artificial intelligence models that perform accurately across diverse datasets.

Table of Comparison

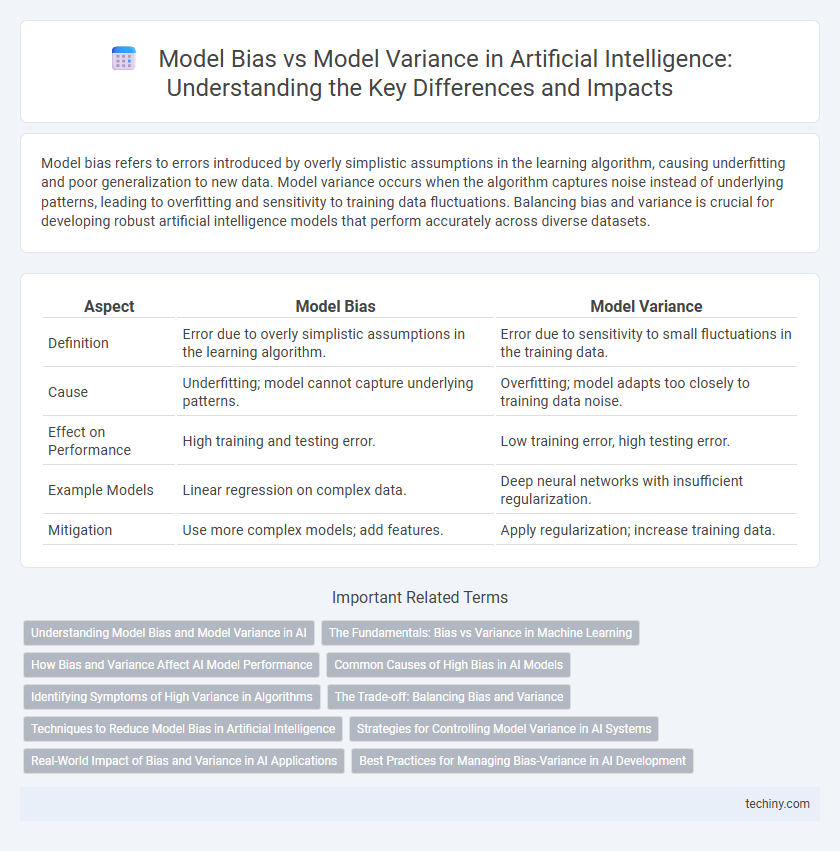

| Aspect | Model Bias | Model Variance |

|---|---|---|

| Definition | Error due to overly simplistic assumptions in the learning algorithm. | Error due to sensitivity to small fluctuations in the training data. |

| Cause | Underfitting; model cannot capture underlying patterns. | Overfitting; model adapts too closely to training data noise. |

| Effect on Performance | High training and testing error. | Low training error, high testing error. |

| Example Models | Linear regression on complex data. | Deep neural networks with insufficient regularization. |

| Mitigation | Use more complex models; add features. | Apply regularization; increase training data. |

Understanding Model Bias and Model Variance in AI

Model bias in AI refers to systematic errors caused by overly simplistic assumptions in the learning algorithm, leading to underfitting and poor generalization on training data. Model variance denotes the sensitivity of an AI model to fluctuations in the training dataset, causing overfitting and high variability in predictions. Balancing model bias and variance is critical for optimizing AI model performance and achieving accurate, reliable outcomes on unseen data.

The Fundamentals: Bias vs Variance in Machine Learning

Model bias refers to errors introduced by approximating real-world problems with overly simplistic assumptions, leading to underfitting and poor performance on training and test data. Model variance occurs when a model is too sensitive to fluctuations in the training data, capturing noise instead of the underlying pattern, which results in overfitting and poor generalization to new data. Balancing bias and variance is critical for optimizing machine learning model accuracy and robustness within artificial intelligence systems.

How Bias and Variance Affect AI Model Performance

Bias in AI models leads to systematic errors and poor generalization by oversimplifying data patterns, causing underfitting. Variance represents sensitivity to training data fluctuations, resulting in overfitting and unstable predictions on unseen data. Balancing bias and variance is crucial for optimizing AI model performance and achieving accurate, reliable results.

Common Causes of High Bias in AI Models

High bias in AI models often results from underfitting, where models are too simple to capture underlying data patterns, leading to systematic errors. Common causes include insufficient model complexity, inadequate feature selection, and overly restrictive assumptions during training. This results in poor predictive performance and inability to generalize to new data.

Identifying Symptoms of High Variance in Algorithms

High variance in algorithms often manifests through overfitting, where models perform exceptionally well on training data but poorly on unseen test data. Symptoms include large fluctuations in predictions for slightly different training sets, indicating sensitivity to noise. High variance models require techniques like cross-validation and regularization to improve generalization and reduce prediction error.

The Trade-off: Balancing Bias and Variance

Model bias refers to errors due to overly simplistic assumptions in machine learning algorithms, while model variance indicates sensitivity to fluctuations in the training data. Balancing bias and variance is crucial for optimizing predictive performance, as high bias leads to underfitting and high variance causes overfitting. Techniques such as cross-validation, regularization, and ensemble methods help manage this trade-off by improving generalization and minimizing prediction error.

Techniques to Reduce Model Bias in Artificial Intelligence

Techniques to reduce model bias in artificial intelligence include increasing training data diversity, employing regularization methods like L1 and L2 to prevent underfitting, and selecting more complex models such as ensemble methods or deep neural networks. Hyperparameter tuning optimizes model complexity and learning rates to capture data patterns accurately. Incorporating cross-validation ensures the model generalizes well, minimizing systematic errors stemming from biased assumptions.

Strategies for Controlling Model Variance in AI Systems

Controlling model variance in AI systems involves techniques such as cross-validation, regularization methods like L1 and L2, and ensemble learning approaches including bagging and boosting. Adjusting model complexity by pruning decision trees or limiting neural network layers helps reduce overfitting, while dropout methods in deep learning improve generalization. Proper tuning of hyperparameters and incorporating more diverse training data further stabilize model predictions against variance fluctuations.

Real-World Impact of Bias and Variance in AI Applications

Model bias in AI often leads to unfair or inaccurate outcomes by oversimplifying data patterns, causing significant real-world issues such as discrimination in hiring or lending processes. Model variance results in overfitting, where AI systems perform well on training data but fail to generalize, leading to unreliable predictions in dynamic environments like autonomous driving or medical diagnosis. Balancing bias and variance is crucial for developing robust AI applications that ensure fairness, accuracy, and trustworthiness in real-world deployments.

Best Practices for Managing Bias-Variance in AI Development

Effective management of bias and variance in AI development involves implementing techniques such as cross-validation, regularization, and ensemble methods to enhance model generalization. Monitoring performance metrics including mean squared error and F1 score helps identify overfitting or underfitting, enabling timely adjustments to model complexity. Employing diverse, representative training data alongside continuous feedback loops reduces bias and variance, ensuring robust and fair AI models.

model bias vs model variance Infographic

techiny.com

techiny.com