Feature engineering involves manually selecting and transforming raw data into meaningful inputs for machine learning models, relying heavily on domain expertise and human intuition. Feature learning, by contrast, allows algorithms to automatically discover the representations needed for feature detection or classification from raw data, often through deep learning techniques. This shift reduces dependency on manual intervention and can improve model performance by uncovering complex patterns beyond human capacity.

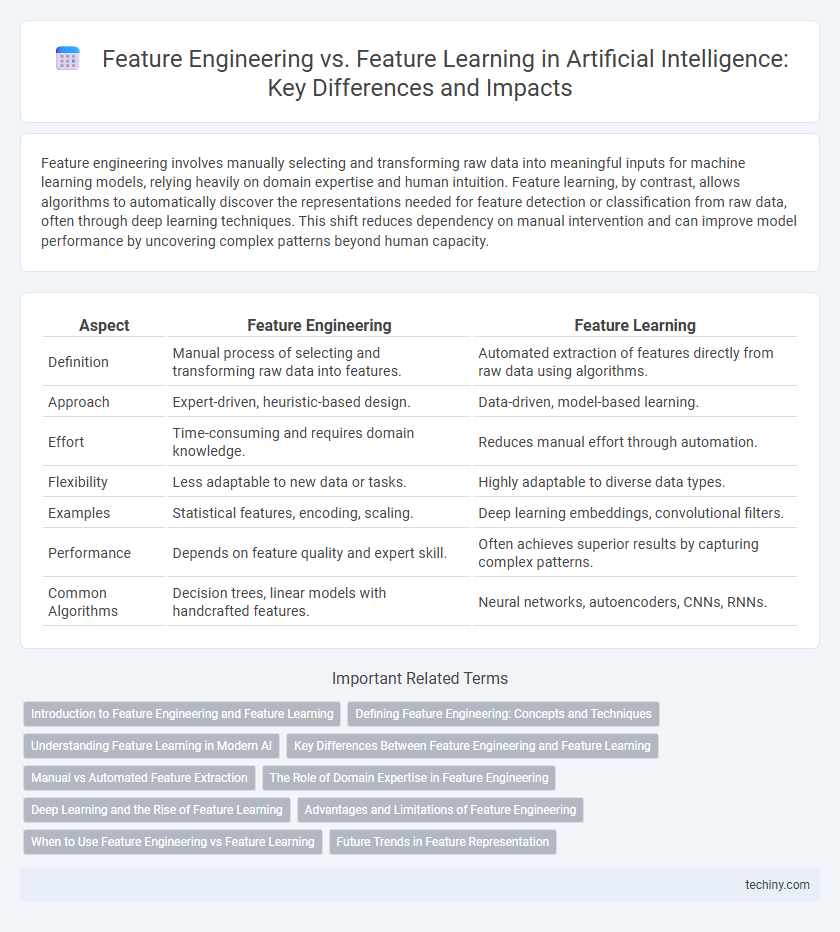

Table of Comparison

| Aspect | Feature Engineering | Feature Learning |

|---|---|---|

| Definition | Manual process of selecting and transforming raw data into features. | Automated extraction of features directly from raw data using algorithms. |

| Approach | Expert-driven, heuristic-based design. | Data-driven, model-based learning. |

| Effort | Time-consuming and requires domain knowledge. | Reduces manual effort through automation. |

| Flexibility | Less adaptable to new data or tasks. | Highly adaptable to diverse data types. |

| Examples | Statistical features, encoding, scaling. | Deep learning embeddings, convolutional filters. |

| Performance | Depends on feature quality and expert skill. | Often achieves superior results by capturing complex patterns. |

| Common Algorithms | Decision trees, linear models with handcrafted features. | Neural networks, autoencoders, CNNs, RNNs. |

Introduction to Feature Engineering and Feature Learning

Feature engineering involves manually selecting, transforming, and creating input variables to improve machine learning model performance, relying heavily on domain expertise and data preprocessing techniques. Feature learning, often implemented through deep learning models, enables automatic extraction of relevant features directly from raw data, reducing the need for manual intervention. Both approaches play crucial roles in building efficient AI systems, with feature learning increasingly favored in complex data environments for its scalability and adaptability.

Defining Feature Engineering: Concepts and Techniques

Feature engineering involves the manual process of selecting, transforming, and creating input variables to improve the performance of machine learning models, relying heavily on domain expertise and data understanding. Techniques include normalization, encoding categorical variables, feature scaling, and extraction methods like principal component analysis (PCA) to reduce dimensionality. Effective feature engineering enhances model accuracy and interpretability by optimizing the representation of data before feeding it into algorithms.

Understanding Feature Learning in Modern AI

Feature learning in modern AI enables algorithms to automatically discover the representations needed for feature detection or classification from raw data, significantly reducing the reliance on manual feature engineering. Deep learning models, such as convolutional neural networks and autoencoders, excel at extracting hierarchical features that improve accuracy in complex tasks like image recognition and natural language processing. This automated approach enhances scalability and adaptability, driving advancements in applications ranging from speech synthesis to autonomous systems.

Key Differences Between Feature Engineering and Feature Learning

Feature engineering involves manually selecting, modifying, or creating features from raw data based on domain knowledge, while feature learning automates this process through algorithms like deep learning to extract relevant representations. The key difference lies in the level of human intervention, with feature engineering requiring explicit design choices and feature learning enabling models to discover patterns autonomously. Feature learning often leads to improved performance in complex tasks by capturing hierarchical data structures that manual feature engineering might overlook.

Manual vs Automated Feature Extraction

Feature engineering involves manual extraction and selection of relevant features based on domain expertise, which can be time-consuming and requires deep understanding of the data. Feature learning, often implemented through deep learning models, automates the process by enabling algorithms to discover optimal features directly from raw data, improving scalability and adaptability. Automated feature extraction enhances model performance by capturing complex patterns and reducing reliance on handcrafted feature design.

The Role of Domain Expertise in Feature Engineering

Feature engineering relies heavily on domain expertise to manually select, transform, and create relevant features that improve model accuracy and interpretability. Domain knowledge enables the identification of meaningful patterns and relationships within raw data, which automated feature learning methods may overlook. In contrast, feature learning approaches, such as deep learning, reduce the dependency on expert input by automatically discovering features but may lack the contextual precision that domain-driven feature engineering provides.

Deep Learning and the Rise of Feature Learning

Feature engineering involves manually selecting and transforming raw data into meaningful input features for machine learning models, requiring domain expertise and extensive trial-and-error. In contrast, feature learning, particularly through deep learning, automates this process by enabling neural networks to discover hierarchical feature representations directly from raw data, improving model performance and scalability. The rise of deep learning has significantly shifted the emphasis from traditional feature engineering to feature learning, driven by advances in architectures like convolutional neural networks and transformers.

Advantages and Limitations of Feature Engineering

Feature engineering allows precise control over input variables, enabling domain experts to create highly interpretable and relevant features that enhance model accuracy. However, it requires significant manual effort, domain knowledge, and can introduce human bias, limiting scalability for large and complex datasets. Despite these challenges, feature engineering remains valuable for improving model performance in scenarios where domain-specific insights are critical.

When to Use Feature Engineering vs Feature Learning

Feature engineering is optimal when domain expertise is available, and the dataset is small or moderately sized, enabling tailored feature creation that improves model accuracy and interpretability. Feature learning excels with large, complex datasets where algorithms like deep learning automatically extract relevant features, reducing manual intervention and adapting to intricate patterns. Selecting between the two depends on data scale, complexity, and available expert knowledge, balancing precision with computational efficiency.

Future Trends in Feature Representation

Future trends in feature representation emphasize the shift from manual feature engineering to automated feature learning using deep learning models. Advances in neural architecture search and self-supervised learning techniques enable models to extract more robust, high-dimensional features directly from raw data. This evolution accelerates adaptation to diverse applications, improving accuracy and reducing reliance on domain expertise in artificial intelligence systems.

Feature Engineering vs Feature Learning Infographic

techiny.com

techiny.com