Softmax and Sigmoid functions serve distinct purposes in artificial intelligence, with Softmax commonly used for multi-class classification by converting logits into probability distributions across multiple classes, while Sigmoid is ideal for binary classification by mapping inputs to values between 0 and 1. Softmax outputs a probability vector that sums to one, providing a clear prediction among several classes, whereas Sigmoid outputs independent probabilities, making it suitable for tasks involving mutually exclusive binary outcomes. Choosing between Softmax and Sigmoid depends on the model architecture and the specific classification problem to ensure optimal performance and interpretability.

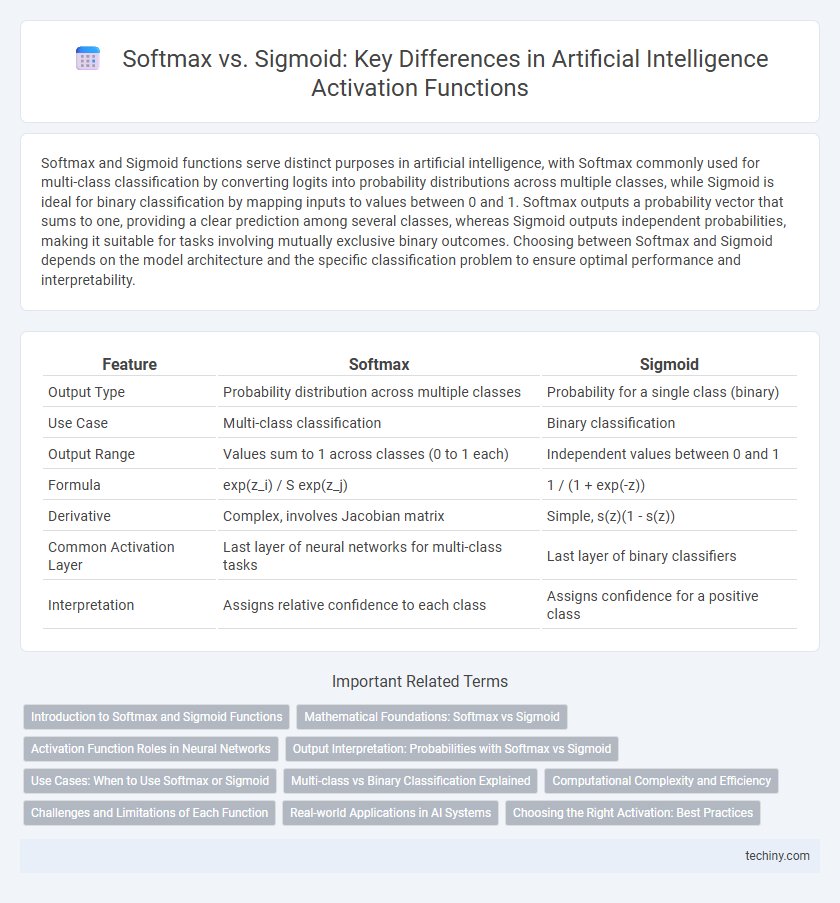

Table of Comparison

| Feature | Softmax | Sigmoid |

|---|---|---|

| Output Type | Probability distribution across multiple classes | Probability for a single class (binary) |

| Use Case | Multi-class classification | Binary classification |

| Output Range | Values sum to 1 across classes (0 to 1 each) | Independent values between 0 and 1 |

| Formula | exp(z_i) / S exp(z_j) | 1 / (1 + exp(-z)) |

| Derivative | Complex, involves Jacobian matrix | Simple, s(z)(1 - s(z)) |

| Common Activation Layer | Last layer of neural networks for multi-class tasks | Last layer of binary classifiers |

| Interpretation | Assigns relative confidence to each class | Assigns confidence for a positive class |

Introduction to Softmax and Sigmoid Functions

Softmax and sigmoid functions are essential activation functions in artificial intelligence, particularly in neural networks for classification tasks. The sigmoid function outputs a value between 0 and 1, making it ideal for binary classification by modeling probabilities for two classes. Softmax function generalizes this concept for multi-class classification by converting a vector of raw scores into a probability distribution over multiple classes, ensuring the sum of the outputs equals 1.

Mathematical Foundations: Softmax vs Sigmoid

Softmax function transforms a vector of real numbers into a probability distribution, assigning normalized probabilities that sum to one, making it essential for multi-class classification tasks. Sigmoid function maps inputs to values between 0 and 1, suitable for binary classification by modeling probabilities for individual classes independently. Mathematically, softmax applies the exponential function to each input and normalizes by the sum of exponentials, whereas sigmoid uses the logistic function 1/(1+e^(-x)) to squash values, influencing gradient behavior and convergence properties in neural networks.

Activation Function Roles in Neural Networks

Softmax and Sigmoid activation functions serve distinct roles in neural networks, with Softmax primarily used in multi-class classification tasks by converting logits into probability distributions that sum to one, enabling clear class predictions. Sigmoid, on the other hand, is suitable for binary classification, outputting values between 0 and 1 that represent probabilities of the positive class, facilitating decision thresholds. Both functions critically influence gradient flow during backpropagation, impacting model convergence and predictive performance.

Output Interpretation: Probabilities with Softmax vs Sigmoid

Softmax outputs a probability distribution across multiple classes, ensuring the sum of all probabilities equals one, making it ideal for multi-class classification problems. Sigmoid produces independent probabilities for each class, ranging from 0 to 1, without enforcing a collective sum constraint, suitable for binary classification or multi-label scenarios. Understanding this distinction is crucial for model design, as choosing Softmax allows interpreting outputs as exclusive class probabilities, while Sigmoid treats each output independently.

Use Cases: When to Use Softmax or Sigmoid

Softmax is ideal for multi-class classification problems where the goal is to assign probabilities across multiple mutually exclusive classes, such as image recognition or natural language processing tasks involving multiple categories. Sigmoid functions work best for binary classification problems or multi-label classification, where each label is independent and can be true or false simultaneously, like email spam detection or medical diagnosis. Choosing Softmax or Sigmoid depends on the nature of the output labels--mutually exclusive versus independent--and the required probability distribution over the classes.

Multi-class vs Binary Classification Explained

Softmax function is primarily used in multi-class classification tasks as it converts output logits into probabilities distributed across multiple classes, ensuring they sum to one. Sigmoid function, on the other hand, is suited for binary classification by mapping outputs to a probability between 0 and 1 for a single class. Softmax enables mutually exclusive class predictions, while sigmoid supports independent, non-exclusive class predictions in multi-label problems.

Computational Complexity and Efficiency

Softmax function requires computing exponentials for all classes and normalizing by their sum, leading to higher computational complexity compared to sigmoid, which processes each output independently with a single exponential operation. Sigmoid activation is more efficient for binary classification or multilabel problems due to its element-wise computation and lower resource consumption. In large-scale multiclass classification, softmax's probability distribution provides better interpretability but demands more memory and processing power, affecting real-time system performance.

Challenges and Limitations of Each Function

Softmax function faces challenges with computational inefficiency and gradient vanishing when dealing with large output classes, impacting training speed and model performance. Sigmoid functions suffer from saturation effects causing gradient vanishing, which hinders deep network training and can lead to biased probability outputs in multi-class problems. Both activation functions have limitations in modeling complex decision boundaries, necessitating alternative methods or hybrid approaches in advanced AI models.

Real-world Applications in AI Systems

Softmax functions excel in multi-class classification problems like image recognition, providing normalized probability distributions that enable AI systems to differentiate among multiple categories effectively. Sigmoid activation is ideal for binary classification tasks such as spam detection or disease diagnosis, where outputs represent probabilities of two possible states. Integration of Softmax and Sigmoid in neural architectures enhances diverse AI applications by optimizing decision boundaries tailored to specific output requirements.

Choosing the Right Activation: Best Practices

Choose Softmax activation for multi-class classification tasks where outputs represent mutually exclusive probabilities, as it normalizes outputs into a probability distribution over classes. Opt for Sigmoid activation in binary classification or multi-label problems, providing independent probabilities for each class without enforcing exclusivity. Evaluate model requirements and output interpretation carefully to select the activation function that aligns with your neural network's learning objectives and performance metrics.

Softmax vs Sigmoid Infographic

techiny.com

techiny.com