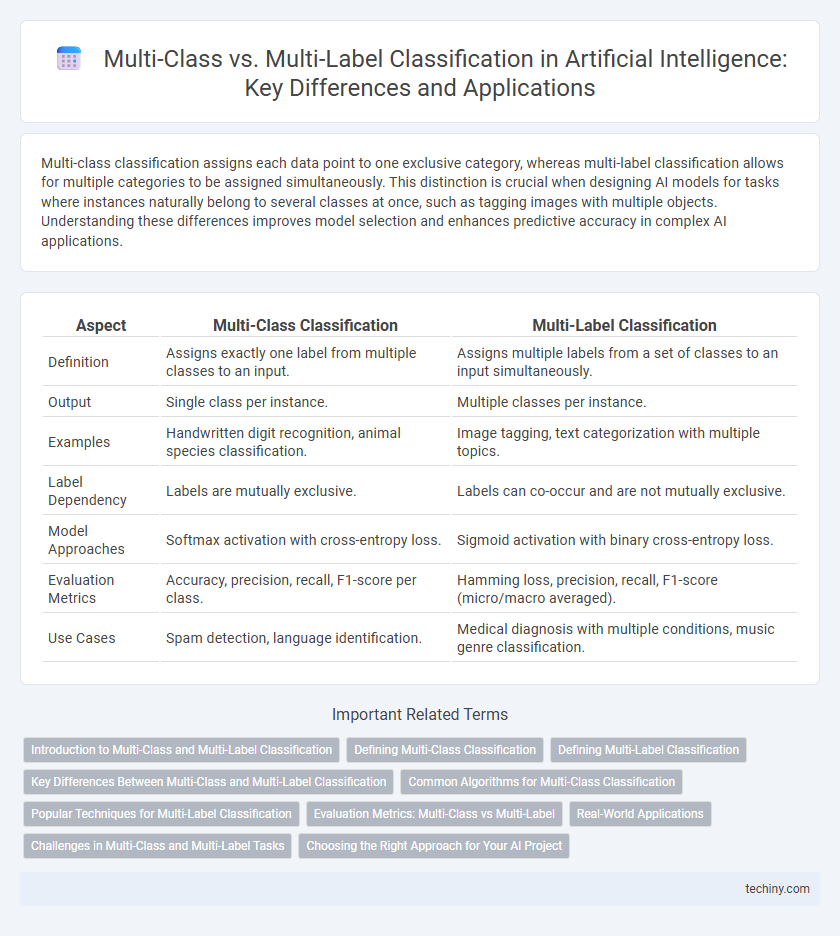

Multi-class classification assigns each data point to one exclusive category, whereas multi-label classification allows for multiple categories to be assigned simultaneously. This distinction is crucial when designing AI models for tasks where instances naturally belong to several classes at once, such as tagging images with multiple objects. Understanding these differences improves model selection and enhances predictive accuracy in complex AI applications.

Table of Comparison

| Aspect | Multi-Class Classification | Multi-Label Classification |

|---|---|---|

| Definition | Assigns exactly one label from multiple classes to an input. | Assigns multiple labels from a set of classes to an input simultaneously. |

| Output | Single class per instance. | Multiple classes per instance. |

| Examples | Handwritten digit recognition, animal species classification. | Image tagging, text categorization with multiple topics. |

| Label Dependency | Labels are mutually exclusive. | Labels can co-occur and are not mutually exclusive. |

| Model Approaches | Softmax activation with cross-entropy loss. | Sigmoid activation with binary cross-entropy loss. |

| Evaluation Metrics | Accuracy, precision, recall, F1-score per class. | Hamming loss, precision, recall, F1-score (micro/macro averaged). |

| Use Cases | Spam detection, language identification. | Medical diagnosis with multiple conditions, music genre classification. |

Introduction to Multi-Class and Multi-Label Classification

Multi-class classification involves assigning a single label from multiple possible categories to each input, commonly used in image recognition and text categorization tasks. Multi-label classification, in contrast, allows each input to be associated with multiple labels simultaneously, essential for applications such as tagging content with multiple attributes or predicting multiple diseases from patient data. Understanding the distinction between these two classification types is crucial for selecting appropriate machine learning models and designing effective algorithms in artificial intelligence.

Defining Multi-Class Classification

Multi-class classification involves categorizing data points into one and only one class from three or more possible categories, ensuring exclusive and mutually independent labels. Each instance is assigned a single class label based on feature patterns, which simplifies decision boundaries in supervised machine learning tasks. This approach contrasts with multi-label classification where instances can belong to multiple classes simultaneously, making multi-class classification crucial for problems requiring distinct, non-overlapping class predictions.

Defining Multi-Label Classification

Multi-label classification involves assigning multiple labels to a single instance, unlike multi-class classification which assigns only one label per instance. This method is essential in fields like text categorization, image annotation, and bioinformatics where an input can belong to several categories simultaneously. Effective multi-label classifiers use algorithms such as binary relevance, classifier chains, and label powerset to capture label dependencies and improve prediction accuracy.

Key Differences Between Multi-Class and Multi-Label Classification

Multi-class classification involves assigning a single label from multiple possible categories to each input, whereas multi-label classification allows assigning multiple labels simultaneously to one input instance. Multi-class suffers from exclusivity constraints where an instance belongs to only one class, while multi-label supports overlapping classes without mutual exclusivity. Performance metrics like accuracy suit multi-class, while precision, recall, and F1-score are crucial for evaluating multi-label classification due to label dependencies.

Common Algorithms for Multi-Class Classification

Common algorithms for multi-class classification include decision trees, support vector machines (SVM), k-nearest neighbors (KNN), and neural networks, each capable of assigning input data to one of several predefined categories. Techniques like one-vs-rest (OvR) and one-vs-one (OvO) are often employed with SVMs to handle multi-class problems effectively. Ensemble methods such as random forests and gradient boosting also enhance performance by combining multiple weak classifiers to improve accuracy and robustness.

Popular Techniques for Multi-Label Classification

Popular techniques for multi-label classification include problem transformation methods like Binary Relevance, which treats each label as an independent binary classification task, and Classifier Chains, which account for label correlations by linking classifiers in a chain. Algorithm adaptation approaches, such as Multi-Label k-Nearest Neighbors (ML-kNN) and neural network architectures designed for multi-label outputs, directly handle multiple labels within a single model. Ensemble methods combine multiple classifiers to improve prediction accuracy and address label dependencies effectively in multi-label scenarios.

Evaluation Metrics: Multi-Class vs Multi-Label

Evaluation metrics for multi-class classification commonly include accuracy, precision, recall, and F1-score calculated per class, with overall performance summarized via macro, micro, or weighted averages. Multi-label classification requires specialized metrics such as Hamming loss, subset accuracy, and label-based F1-scores to handle the presence of multiple concurrent labels per instance. Evaluating multi-label models also involves ranking-based metrics like average precision and coverage error, which assess the quality of predicted label rankings and partial label matches.

Real-World Applications

Multi-class classification assigns each instance to a single category, making it ideal for applications like image recognition where an object belongs to only one class, such as identifying animal species in wildlife monitoring. Multi-label classification allows multiple categories per instance, critical for real-world tasks like disease diagnosis, where a patient may have several concurrent conditions, or in text categorization, where a document can belong to multiple topics simultaneously. Leveraging these methods enhances AI's capability in complex environments such as autonomous driving, medical imaging, and multimedia content tagging.

Challenges in Multi-Class and Multi-Label Tasks

Multi-class classification challenges include managing class imbalance and the difficulty of distinguishing between numerous, mutually exclusive categories, which can lead to increased model complexity and reduced accuracy. Multi-label classification faces the challenge of capturing label dependencies and handling combinatorial explosion due to the exponential number of possible label sets, requiring more sophisticated architectures and training strategies. Both tasks demand careful feature engineering and evaluation metrics tailored to their distinct output structures to improve prediction performance.

Choosing the Right Approach for Your AI Project

Selecting the appropriate classification technique is crucial in AI project success, with multi-class classification assigning each instance to one exclusive category, while multi-label classification allows multiple simultaneous category assignments. Multi-class classification is ideal for applications like image recognition where each image belongs to a single class, whereas multi-label classification suits use cases such as text categorization where multiple topics per document are possible. Evaluating the problem domain and data characteristics ensures the choice of a method that optimally balances complexity, accuracy, and computational efficiency.

Multi-Class Classification vs Multi-Label Classification Infographic

techiny.com

techiny.com