Spiking Neural Networks (SNNs) emulate biological neural activity by processing information through discrete spikes, enhancing efficiency and temporal precision compared to traditional Feedforward Neural Networks (FNNs), which rely on continuous activations. SNNs excel in low-power applications and temporal data processing due to their event-driven nature, whereas FNNs offer simplicity and faster training for static pattern recognition tasks. Advances in neuromorphic hardware continue to boost the practicality of SNNs, positioning them as promising candidates for energy-efficient AI systems.

Table of Comparison

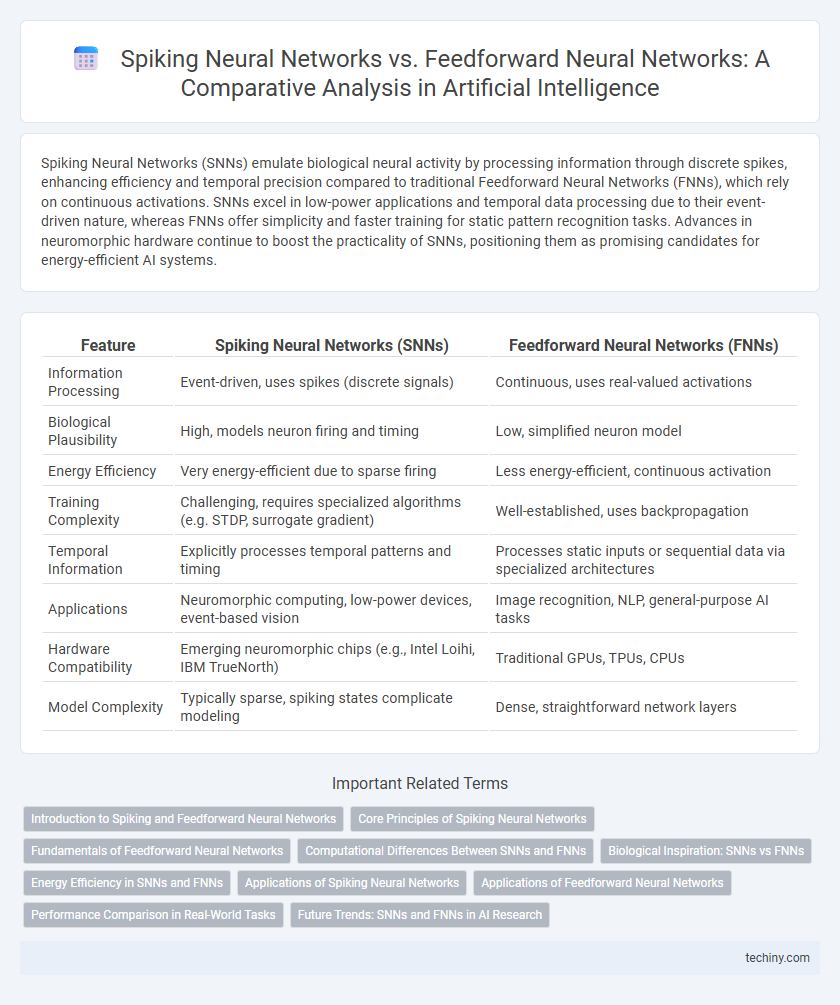

| Feature | Spiking Neural Networks (SNNs) | Feedforward Neural Networks (FNNs) |

|---|---|---|

| Information Processing | Event-driven, uses spikes (discrete signals) | Continuous, uses real-valued activations |

| Biological Plausibility | High, models neuron firing and timing | Low, simplified neuron model |

| Energy Efficiency | Very energy-efficient due to sparse firing | Less energy-efficient, continuous activation |

| Training Complexity | Challenging, requires specialized algorithms (e.g. STDP, surrogate gradient) | Well-established, uses backpropagation |

| Temporal Information | Explicitly processes temporal patterns and timing | Processes static inputs or sequential data via specialized architectures |

| Applications | Neuromorphic computing, low-power devices, event-based vision | Image recognition, NLP, general-purpose AI tasks |

| Hardware Compatibility | Emerging neuromorphic chips (e.g., Intel Loihi, IBM TrueNorth) | Traditional GPUs, TPUs, CPUs |

| Model Complexity | Typically sparse, spiking states complicate modeling | Dense, straightforward network layers |

Introduction to Spiking and Feedforward Neural Networks

Spiking Neural Networks (SNNs) mimic the brain's natural processing by transmitting information via discrete spikes over time, enabling energy-efficient computations and temporal data handling. Feedforward Neural Networks (FNNs) process input data through layers in a unidirectional flow without temporal dynamics, excelling in static pattern recognition tasks. SNNs offer advantages in modeling biological neural activity and temporal sequences, whereas FNNs are typically simpler and faster to train for traditional machine learning applications.

Core Principles of Spiking Neural Networks

Spiking Neural Networks (SNNs) operate by emulating the temporal dynamics of biological neurons through discrete spikes, enabling efficient temporal information processing and sparse data representation. Unlike Feedforward Neural Networks (FNNs) that rely on continuous activation functions and static input-output mappings, SNNs utilize event-driven communication and membrane potential integration to capture temporal patterns. The core principles of SNNs include spike timing-dependent plasticity (STDP) for adaptive learning and intrinsic temporal encoding, which enhance energy efficiency and enable complex spatiotemporal computations.

Fundamentals of Feedforward Neural Networks

Feedforward Neural Networks (FNNs) consist of neurons organized in layers where information flows in one direction from input to output without cycles or loops. Each neuron applies a weighted sum of inputs followed by a nonlinear activation function, enabling the network to learn complex mappings from data. These fundamental characteristics make FNNs widely used for tasks like image recognition, classification, and regression in artificial intelligence.

Computational Differences Between SNNs and FNNs

Spiking Neural Networks (SNNs) differ computationally from Feedforward Neural Networks (FNNs) by processing information using discrete spike events, enabling event-driven and time-dependent computation that closely mimics biological neurons. FNNs rely on continuous valued activations and matrix multiplications, resulting in higher computational overhead and energy consumption compared to the sparse activations of SNNs. The temporal coding in SNNs allows for asynchronous processing and potential energy efficiency, making them suitable for neuromorphic hardware and real-time applications where power constraints are critical.

Biological Inspiration: SNNs vs FNNs

Spiking Neural Networks (SNNs) closely mimic biological neurons by transmitting information through discrete spikes, enabling temporal coding and more energy-efficient processing compared to Feedforward Neural Networks (FNNs), which use continuous activation functions and static signal propagation. SNNs' dynamic temporal behavior aligns with the brain's natural communication patterns, allowing for richer representation of time-dependent data. In contrast, FNNs lack this temporal dimension, relying on layer-by-layer processing that limits biological plausibility and temporal information handling.

Energy Efficiency in SNNs and FNNs

Spiking Neural Networks (SNNs) exhibit superior energy efficiency compared to traditional Feedforward Neural Networks (FNNs) due to their event-driven processing, which significantly reduces power consumption by activating neurons only when spike events occur. Unlike FNNs relying on continuous activation functions and dense matrix multiplications that demand constant energy use, SNNs leverage sparse temporal coding, minimizing redundant computations. This efficiency makes SNNs particularly advantageous for implementation in low-power embedded systems and neuromorphic hardware designed to mimic brain-like operations.

Applications of Spiking Neural Networks

Spiking Neural Networks (SNNs) excel in energy-efficient processing for real-time sensory data, making them ideal for robotics, neuromorphic computing, and brain-machine interfaces. Their event-driven architecture enables low-latency pattern recognition in auditory and visual systems, outperforming traditional Feedforward Neural Networks in dynamic environments. SNNs' capacity for temporal coding enhances applications in speech recognition, autonomous vehicles, and wearable health monitoring devices.

Applications of Feedforward Neural Networks

Feedforward Neural Networks (FNNs) are extensively utilized in image recognition, natural language processing, and speech recognition due to their ability to approximate complex functions and handle large datasets efficiently. Their applications include facial recognition systems, text classification, and automated translation, where rapid and accurate predictions are crucial. FNNs excel in supervised learning tasks by propagating inputs through multiple layers to produce deterministic outputs, enabling effective classification and regression models in various AI domains.

Performance Comparison in Real-World Tasks

Spiking Neural Networks (SNNs) demonstrate superior energy efficiency in real-world tasks such as sensory processing and event-based data analysis, leveraging temporal spike patterns for sparse information coding. Feedforward Neural Networks (FNNs) excel in static data applications with faster inference times and higher accuracy on benchmarks like image classification and regression problems. While SNNs offer advantages in low-power embedded systems, FNNs maintain dominance in traditional AI workloads due to mature training algorithms and hardware support.

Future Trends: SNNs and FNNs in AI Research

Spiking Neural Networks (SNNs) are gaining momentum in AI research due to their energy-efficient, brain-inspired event-driven processing, which contrasts with the traditional, dense computation of Feedforward Neural Networks (FNNs). Future trends highlight the integration of neuromorphic hardware with SNNs to enhance real-time learning and low-power applications, while FNNs continue to advance with deeper architectures and improved training algorithms. The convergence of SNNs and FNNs aims to leverage the strengths of both models for more adaptive, scalable, and efficient artificial intelligence systems.

Spiking Neural Networks vs Feedforward Neural Networks Infographic

techiny.com

techiny.com