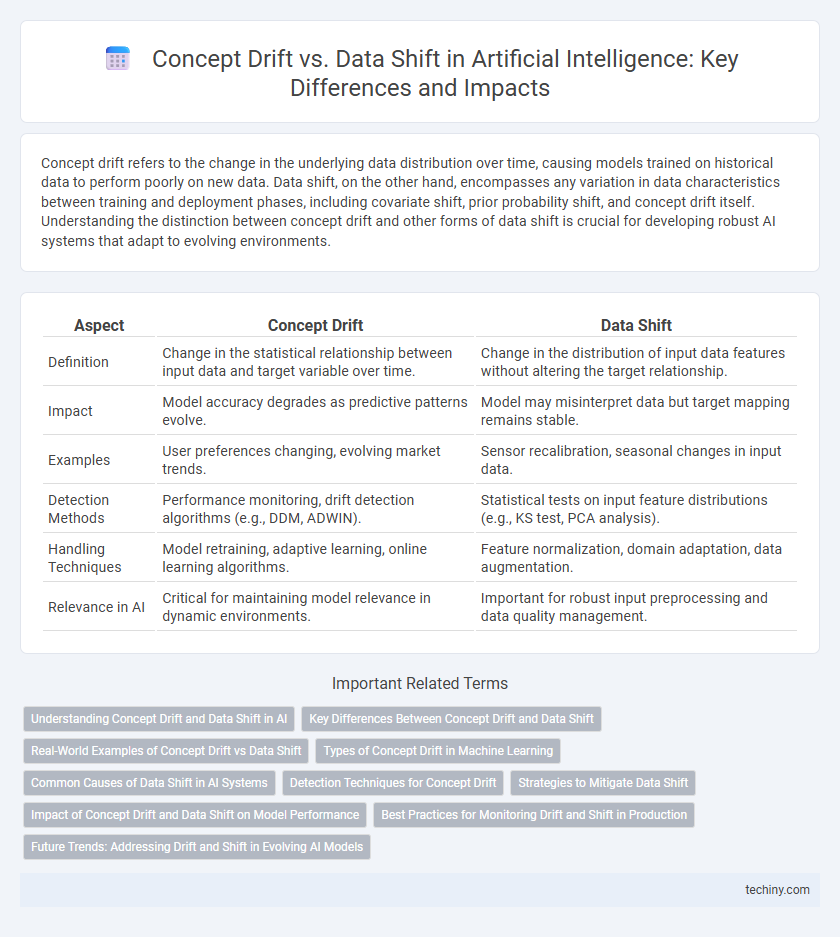

Concept drift refers to the change in the underlying data distribution over time, causing models trained on historical data to perform poorly on new data. Data shift, on the other hand, encompasses any variation in data characteristics between training and deployment phases, including covariate shift, prior probability shift, and concept drift itself. Understanding the distinction between concept drift and other forms of data shift is crucial for developing robust AI systems that adapt to evolving environments.

Table of Comparison

| Aspect | Concept Drift | Data Shift |

|---|---|---|

| Definition | Change in the statistical relationship between input data and target variable over time. | Change in the distribution of input data features without altering the target relationship. |

| Impact | Model accuracy degrades as predictive patterns evolve. | Model may misinterpret data but target mapping remains stable. |

| Examples | User preferences changing, evolving market trends. | Sensor recalibration, seasonal changes in input data. |

| Detection Methods | Performance monitoring, drift detection algorithms (e.g., DDM, ADWIN). | Statistical tests on input feature distributions (e.g., KS test, PCA analysis). |

| Handling Techniques | Model retraining, adaptive learning, online learning algorithms. | Feature normalization, domain adaptation, data augmentation. |

| Relevance in AI | Critical for maintaining model relevance in dynamic environments. | Important for robust input preprocessing and data quality management. |

Understanding Concept Drift and Data Shift in AI

Concept drift in AI refers to the change in the underlying data distribution that affects model performance over time, requiring continuous model adaptation. Data shift encompasses any variation in data distribution, including covariate shift, prior probability shift, and concept drift, impacting predictive accuracy. Effective AI systems implement monitoring and retraining strategies to detect and address both concept drift and data shifts, ensuring robust and reliable model outcomes.

Key Differences Between Concept Drift and Data Shift

Concept drift refers to changes in the underlying relationship between input data and target variables, causing predictive models to become less accurate over time. Data shift involves variations in the input data distribution without altering the target relationship, affecting model performance by introducing unfamiliar data patterns. Understanding these distinctions is crucial for developing adaptive AI systems that maintain robustness in dynamic environments.

Real-World Examples of Concept Drift vs Data Shift

Concept drift occurs when the underlying data distribution changes over time, such as evolving customer preferences in e-commerce recommendation systems, leading to decreased model accuracy. Data shift refers to discrepancies between training and deployment data, exemplified by medical imaging models trained on high-quality hospital scans but tested on lower-quality images from rural clinics. Real-world AI applications in finance, healthcare, and autonomous driving continuously face these challenges, requiring dynamic adaptation through retraining or online learning techniques to maintain performance.

Types of Concept Drift in Machine Learning

Concept drift in machine learning occurs when the statistical properties of the target variable change over time, affecting model accuracy. Types of concept drift include sudden drift, where changes happen abruptly; gradual drift, involving slow transitions between concepts; incremental drift, characterized by continuous small changes; and recurring drift, where previous concepts reappear periodically. Detecting and adapting to these drifts is essential for maintaining robust AI model performance in dynamic environments.

Common Causes of Data Shift in AI Systems

Data shift in AI systems commonly arises from changes in data collection methods, environmental conditions, or user behavior patterns, causing model performance degradation. Variations in sensor calibration, sampling bias, and evolving user preferences frequently contribute to discrepancies between training and operational data distributions. Identifying and addressing these shifts is crucial for maintaining model accuracy and robustness over time.

Detection Techniques for Concept Drift

Detection techniques for concept drift in artificial intelligence include statistical tests such as the Kolmogorov-Smirnov test and the Page-Hinkley test, which monitor changes in data distributions over time. Machine learning-based detectors, like drift detection methods (DDM) and adaptive windowing (ADWIN), dynamically adjust learning models to identify shifts in predictive performance. Ensemble approaches combine multiple detectors to improve sensitivity and robustness in recognizing concept drift compared to simple data shift detection.

Strategies to Mitigate Data Shift

Strategies to mitigate data shift include continuous monitoring of data distributions and implementing adaptive learning models that update in real-time. Employing techniques such as transfer learning, data augmentation, and domain adaptation helps maintain model accuracy despite evolving data patterns. Robust retraining pipelines and feedback loops enable timely adjustments to prevent performance degradation caused by shifts in input data.

Impact of Concept Drift and Data Shift on Model Performance

Concept drift and data shift significantly affect artificial intelligence model performance by causing degradation in prediction accuracy over time. Concept drift occurs when the relationship between input features and the target variable changes, leading to outdated model assumptions, while data shift involves changes in the input data distribution without altering the target concept. Addressing these issues through continuous model monitoring, retraining, and adaptive algorithms is crucial for maintaining reliable AI system outputs in dynamic environments.

Best Practices for Monitoring Drift and Shift in Production

Effective monitoring of concept drift and data shift in production requires continuous evaluation of model inputs and outputs using statistical tests such as Kullback-Leibler divergence and Population Stability Index (PSI). Employing automated drift detection algorithms like ADWIN or DDM alongside real-time data pipelines ensures timely identification of distributional changes. Integrating robust alerting systems and retraining protocols maintains model performance and reliability in dynamic environments.

Future Trends: Addressing Drift and Shift in Evolving AI Models

Future trends in addressing concept drift and data shift in evolving AI models emphasize adaptive learning algorithms that continuously update model parameters in real-time. Advanced techniques such as meta-learning and ensemble methods enhance robustness by detecting and accommodating changes in data distributions across various domains. Integration of automated monitoring systems with explainable AI further ensures transparency and resilience against performance degradation in dynamic environments.

Concept drift vs data shift Infographic

techiny.com

techiny.com