Balancing exploration and exploitation is crucial in artificial intelligence, where exploration involves discovering new strategies or knowledge, while exploitation leverages existing information to maximize performance. Effective AI systems strategically alternate between these approaches to optimize learning and decision-making processes. Achieving the right balance enhances adaptability and long-term success in dynamic environments.

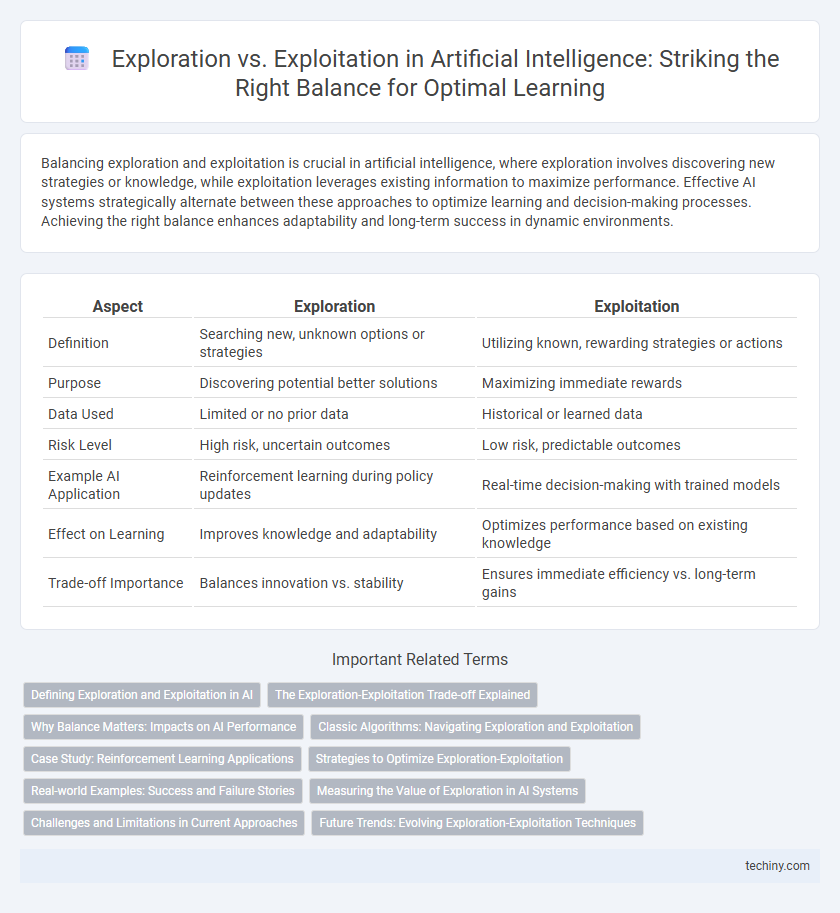

Table of Comparison

| Aspect | Exploration | Exploitation |

|---|---|---|

| Definition | Searching new, unknown options or strategies | Utilizing known, rewarding strategies or actions |

| Purpose | Discovering potential better solutions | Maximizing immediate rewards |

| Data Used | Limited or no prior data | Historical or learned data |

| Risk Level | High risk, uncertain outcomes | Low risk, predictable outcomes |

| Example AI Application | Reinforcement learning during policy updates | Real-time decision-making with trained models |

| Effect on Learning | Improves knowledge and adaptability | Optimizes performance based on existing knowledge |

| Trade-off Importance | Balances innovation vs. stability | Ensures immediate efficiency vs. long-term gains |

Defining Exploration and Exploitation in AI

Exploration in artificial intelligence refers to the process where algorithms seek out new information or strategies to improve decision-making in uncertain environments. Exploitation involves leveraging known data and learned policies to maximize immediate performance or rewards. Balancing exploration and exploitation is crucial for optimizing AI models, especially in reinforcement learning contexts where discovering novel solutions must be weighed against utilizing established knowledge.

The Exploration-Exploitation Trade-off Explained

The exploration-exploitation trade-off in artificial intelligence balances the need to explore new actions to discover potentially better rewards with exploiting known actions that already yield high returns. Effective algorithms dynamically adjust this balance to optimize performance over time, preventing premature convergence on suboptimal strategies. Understanding and managing this trade-off is crucial for reinforcement learning, multi-armed bandits, and adaptive systems in achieving long-term success.

Why Balance Matters: Impacts on AI Performance

Balancing exploration and exploitation is crucial in artificial intelligence to optimize learning efficiency and decision-making accuracy. Overemphasizing exploration can lead to excessive resource consumption without guaranteed improvement, while excessive exploitation risks premature convergence on suboptimal solutions. Achieving an optimal balance enhances AI performance by enabling comprehensive knowledge acquisition and effective utilization of learned information.

Classic Algorithms: Navigating Exploration and Exploitation

Classic algorithms in artificial intelligence, such as Multi-Armed Bandits and Q-Learning, balance exploration and exploitation by systematically evaluating uncertain actions while leveraging learned knowledge to maximize rewards. The e-greedy algorithm is a fundamental method where the agent explores new actions with probability e and exploits known actions otherwise, optimizing decision-making in dynamic environments. Strategies like Upper Confidence Bound (UCB) dynamically adjust exploration based on confidence intervals, enhancing performance by prioritizing actions with high potential payoffs.

Case Study: Reinforcement Learning Applications

Reinforcement learning balances exploration and exploitation to optimize decision-making processes in complex environments like robotics and game playing. Case studies such as AlphaGo demonstrate how strategic exploration of new moves combined with exploitation of known winning patterns leads to superior performance. This balance is crucial for training intelligent agents that adapt dynamically while maximizing long-term rewards.

Strategies to Optimize Exploration-Exploitation

Balancing exploration and exploitation in artificial intelligence involves implementing adaptive algorithms such as epsilon-greedy, Upper Confidence Bound (UCB), and Thompson Sampling to optimize decision-making under uncertainty. Techniques like dynamic adjustment of exploration rates based on real-time feedback and contextual multi-armed bandit models enhance learning efficiency by prioritizing high-reward actions while still discovering new opportunities. Leveraging reinforcement learning frameworks allows systems to strategically navigate the trade-off, maximizing cumulative rewards in complex environments.

Real-world Examples: Success and Failure Stories

Exploration and exploitation in artificial intelligence are critical for balancing innovation and efficiency, as demonstrated by companies like Google DeepMind, which successfully explored new reinforcement learning algorithms leading to breakthroughs such as AlphaGo. In contrast, Uber's initial over-exploitation of ride-sharing algorithms without adequate exploration of ethical implications resulted in public backlash and regulatory challenges. Real-world applications show that optimal AI performance relies on dynamic adaptation between exploration of novel strategies and exploitation of proven models to ensure sustainable success.

Measuring the Value of Exploration in AI Systems

Measuring the value of exploration in AI systems involves quantifying the long-term benefits of discovering new strategies or information that improve decision-making under uncertainty. Metrics such as expected cumulative reward, information gain, and reduction in model uncertainty are critical indicators that balance exploration against exploitation. Effective evaluation frameworks integrate these measures to optimize learning efficiency and maximize overall system performance in dynamic environments.

Challenges and Limitations in Current Approaches

Current approaches to the exploration vs exploitation dilemma in artificial intelligence face challenges such as balancing short-term gains with long-term learning efficiency, often leading to suboptimal decision-making in dynamic environments. Limitations include the inability of many algorithms to adaptively shift between exploration and exploitation phases, resulting in excessive exploration or premature convergence. Moreover, computational complexity and scalability issues further hinder effective implementation in real-time, high-dimensional applications.

Future Trends: Evolving Exploration-Exploitation Techniques

Emerging exploration-exploitation techniques in artificial intelligence increasingly leverage adaptive algorithms that balance uncertainty and reward optimization through dynamic parameter tuning and meta-learning frameworks. Reinforcement learning frameworks integrate deep neural networks to enhance exploration efficiency in high-dimensional spaces while maintaining exploitation of known strategies for maximal performance. Future trends also point to hybrid models incorporating probabilistic inference and curiosity-driven mechanisms to foster more robust and scalable decision-making in complex, uncertain environments.

Exploration vs Exploitation Infographic

techiny.com

techiny.com