Explainable AI offers transparency by providing clear insights into how decisions are made, enhancing trust and accountability in applications like healthcare and finance. Black Box AI, on the other hand, operates with complex models whose internal workings are opaque, making it difficult to interpret results or identify errors. The trade-off between explainability and performance often guides the choice depending on the criticality of understanding the AI's reasoning.

Table of Comparison

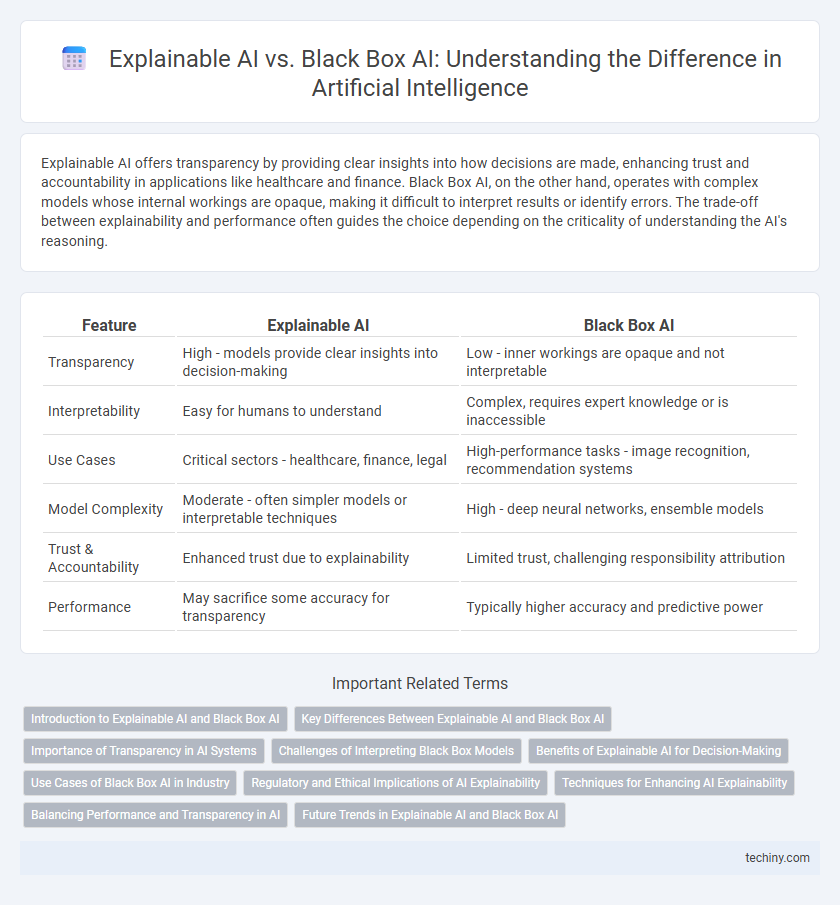

| Feature | Explainable AI | Black Box AI |

|---|---|---|

| Transparency | High - models provide clear insights into decision-making | Low - inner workings are opaque and not interpretable |

| Interpretability | Easy for humans to understand | Complex, requires expert knowledge or is inaccessible |

| Use Cases | Critical sectors - healthcare, finance, legal | High-performance tasks - image recognition, recommendation systems |

| Model Complexity | Moderate - often simpler models or interpretable techniques | High - deep neural networks, ensemble models |

| Trust & Accountability | Enhanced trust due to explainability | Limited trust, challenging responsibility attribution |

| Performance | May sacrifice some accuracy for transparency | Typically higher accuracy and predictive power |

Introduction to Explainable AI and Black Box AI

Explainable AI (XAI) refers to artificial intelligence models designed to provide transparent, interpretable, and understandable outputs that allow users to comprehend the decision-making process. Black Box AI involves complex models like deep neural networks where the internal workings are hidden, making it difficult to explain how specific decisions or predictions are made. Understanding the trade-offs between transparency and performance is crucial for applications in critical domains such as healthcare, finance, and autonomous systems.

Key Differences Between Explainable AI and Black Box AI

Explainable AI (XAI) offers transparency by providing clear, interpretable insights into how models make decisions, while Black Box AI operates with opaque algorithms that obscure decision-making processes. XAI enhances trust and accountability through human-understandable explanations, critical in regulated industries like healthcare and finance. In contrast, Black Box AI often delivers higher accuracy but sacrifices interpretability, limiting its deployment where explanation or auditability is required.

Importance of Transparency in AI Systems

Explainable AI provides clear insights into decision-making processes, enabling users to understand, trust, and validate AI outputs. Black Box AI systems operate without revealing internal mechanisms, increasing risks of biased or erroneous decisions that are difficult to detect and correct. Transparency in AI systems is crucial for ethical compliance, regulatory adherence, and fostering human-AI collaboration by ensuring accountability and mitigating potential harms.

Challenges of Interpreting Black Box Models

Interpreting black box AI models presents significant challenges due to their complex and opaque internal structures, which hinder transparency and accountability. The lack of interpretability limits users' ability to understand decision-making processes, increasing risks of bias, errors, and reduced trust in critical applications such as healthcare and finance. Efforts to develop post-hoc explainability techniques and inherently interpretable models aim to address these challenges but often face trade-offs between accuracy and explainability.

Benefits of Explainable AI for Decision-Making

Explainable AI enhances decision-making by providing transparent reasoning behind predictions, enabling stakeholders to trust and validate outcomes confidently. By revealing the underlying factors influencing AI decisions, it reduces biases and errors, leading to more ethical and accountable processes. Organizations benefit from improved regulatory compliance and clearer communication with users, fostering better adoption and collaboration.

Use Cases of Black Box AI in Industry

Black Box AI is widely utilized in industries such as finance for fraud detection, healthcare for diagnostic imaging, and automotive for autonomous driving systems due to its ability to process complex data efficiently. Despite its lack of interpretability, Black Box AI models like deep neural networks deliver high accuracy in predicting outcomes and optimizing decision-making processes. These use cases highlight the trade-off between model explainability and performance in critical industrial applications.

Regulatory and Ethical Implications of AI Explainability

Explainable AI enhances transparency by providing interpretable models that align with regulatory frameworks such as the EU's GDPR and the U.S. Algorithmic Accountability Act, ensuring compliance and accountability. This transparency mitigates ethical risks related to bias, discrimination, and unfair decision-making by enabling stakeholders to understand and challenge AI outputs. In contrast, black box AI's opacity raises significant legal and ethical concerns, including difficulties in auditing, explaining decisions, and meeting emerging standards for responsible AI deployment.

Techniques for Enhancing AI Explainability

Techniques for enhancing AI explainability include model-agnostic methods such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations), which provide interpretable insights into black box models. Intrinsic explainability leverages inherently transparent models like decision trees and linear regression, enabling users to understand the decision-making process directly. Rule extraction and visualization tools further improve comprehension by translating complex AI behaviors into human-readable formats, facilitating trust and accountability in AI systems.

Balancing Performance and Transparency in AI

Explainable AI (XAI) enhances transparency by providing clear insights into model decision-making processes, enabling trust and accountability in applications such as healthcare and finance. Black Box AI models often achieve superior performance with complex architectures like deep neural networks but lack interpretability, posing risks in critical decision environments. Balancing performance and transparency requires developing hybrid approaches that integrate explainability techniques without significantly sacrificing model accuracy.

Future Trends in Explainable AI and Black Box AI

Future trends in Explainable AI (XAI) emphasize enhancing transparency and interpretability to build user trust and meet regulatory standards, with techniques like model-agnostic explanations and causal inference gaining prominence. Black Box AI continues to advance in complexity and performance, leveraging deep learning and neural networks, but its opaque decision-making highlights the critical need for hybrid models that balance accuracy with explainability. Research is increasingly focused on integrating XAI methods directly within black box architectures to foster accountable AI systems in high-stakes domains such as healthcare and finance.

Explainable AI vs Black Box AI Infographic

techiny.com

techiny.com