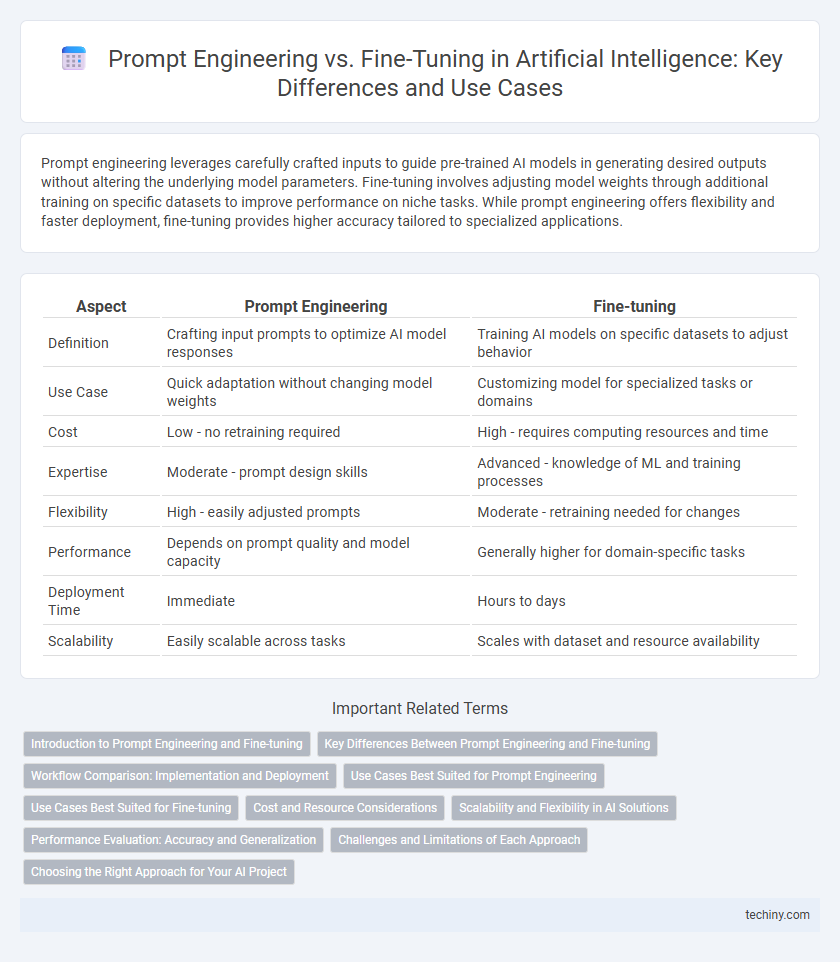

Prompt engineering leverages carefully crafted inputs to guide pre-trained AI models in generating desired outputs without altering the underlying model parameters. Fine-tuning involves adjusting model weights through additional training on specific datasets to improve performance on niche tasks. While prompt engineering offers flexibility and faster deployment, fine-tuning provides higher accuracy tailored to specialized applications.

Table of Comparison

| Aspect | Prompt Engineering | Fine-tuning |

|---|---|---|

| Definition | Crafting input prompts to optimize AI model responses | Training AI models on specific datasets to adjust behavior |

| Use Case | Quick adaptation without changing model weights | Customizing model for specialized tasks or domains |

| Cost | Low - no retraining required | High - requires computing resources and time |

| Expertise | Moderate - prompt design skills | Advanced - knowledge of ML and training processes |

| Flexibility | High - easily adjusted prompts | Moderate - retraining needed for changes |

| Performance | Depends on prompt quality and model capacity | Generally higher for domain-specific tasks |

| Deployment Time | Immediate | Hours to days |

| Scalability | Easily scalable across tasks | Scales with dataset and resource availability |

Introduction to Prompt Engineering and Fine-tuning

Prompt engineering involves designing and refining input prompts to guide AI language models in generating desired outputs without altering the model's underlying parameters. Fine-tuning modifies the pre-trained model's weights by training it on domain-specific data, enhancing performance for specialized tasks. While prompt engineering offers flexibility and quick adaptation, fine-tuning provides deeper customization by embedding task-specific knowledge into the model itself.

Key Differences Between Prompt Engineering and Fine-tuning

Prompt engineering involves crafting precise input queries to guide pre-trained AI models without altering their internal parameters, enabling flexibility and rapid iteration. Fine-tuning modifies the model's weights through additional training on specific datasets, tailoring the model's behavior more deeply but requiring significant computational resources and time. Key differences include the scope of changes--prompt engineering adjusts inputs, fine-tuning adjusts the model--and the associated cost and complexity, with prompt engineering being lightweight and fine-tuning demanding intensive optimization.

Workflow Comparison: Implementation and Deployment

Prompt engineering involves crafting specific input queries to guide pre-trained AI models without altering their underlying parameters, enabling rapid deployment with minimal resource investment. Fine-tuning requires further training of AI models on specialized datasets, leading to tailored performance improvements but demanding significant computational power and extended implementation timelines. Deploying fine-tuned models often involves managing updated weights and version control, whereas prompt engineering workflows integrate seamlessly with existing APIs, facilitating quicker iteration and scalability.

Use Cases Best Suited for Prompt Engineering

Prompt engineering is best suited for use cases requiring rapid iteration and adaptation without extensive retraining, such as conversational agents, content generation, and exploratory data analysis. It enables leveraging large pre-trained models for diverse tasks by designing effective input prompts, minimizing time and computational resources. This approach excels in scenarios needing flexibility and quick deployment across multiple domains without altering the underlying model weights.

Use Cases Best Suited for Fine-tuning

Fine-tuning is best suited for domain-specific applications requiring tailored model behavior, such as medical diagnosis, legal document analysis, and personalized recommendation systems. It enables models to learn nuanced patterns from specialized datasets, improving accuracy and relevance beyond general-purpose capabilities. Industries with unique linguistic or contextual needs benefit from fine-tuning to enhance performance and reliability in critical decision-making tasks.

Cost and Resource Considerations

Prompt engineering reduces costs by leveraging pre-trained models without requiring extensive computational resources, making it ideal for quick iterations and low-budget applications. Fine-tuning involves retraining models on specific datasets, demanding significant computing power and storage, which increases expenses and time investment. Organizations must balance these trade-offs based on project scope, budget constraints, and resource availability to optimize AI deployment.

Scalability and Flexibility in AI Solutions

Prompt engineering offers scalability by enabling quick adaptation of large language models to diverse tasks without retraining, making it highly flexible for evolving AI solutions. Fine-tuning requires extensive computational resources and time, limiting scalability but providing tailored model adjustments for specific applications. Organizations prioritize prompt engineering when seeking rapid deployment and cost efficiency, while fine-tuning remains optimal for specialized performance in domain-specific use cases.

Performance Evaluation: Accuracy and Generalization

Prompt engineering leverages carefully crafted input prompts to guide pre-trained AI models, enabling quicker adaptation and cost efficiency without modifying the model's underlying parameters. Fine-tuning involves retraining the entire model or select layers on specialized datasets, often resulting in higher accuracy but with increased computational resources and risk of overfitting. Performance evaluation benchmarks such as accuracy and generalization reveal that prompt engineering excels in maintaining broad applicability, while fine-tuning typically achieves superior task-specific precision at the expense of potential loss in generalizability.

Challenges and Limitations of Each Approach

Prompt engineering faces challenges in consistently eliciting accurate responses from AI models due to ambiguous or poorly constructed prompts, often requiring extensive trial-and-error and domain expertise. Fine-tuning involves limitations such as the need for large, high-quality labeled datasets, significant computational resources, and risks of overfitting or catastrophic forgetting, which can degrade model generalization. Both approaches struggle with scalability and adaptability across different tasks, highlighting the importance of balancing precision in input design and model customization.

Choosing the Right Approach for Your AI Project

Prompt engineering optimizes AI model responses by crafting effective input queries, ideal for projects requiring flexibility and rapid iteration without retraining. Fine-tuning adjusts pre-trained models with domain-specific data to achieve higher accuracy and tailored performance, essential for specialized or complex tasks. Selecting between prompt engineering and fine-tuning depends on project goals, data availability, computational resources, and the desired level of customization in AI behavior.

Prompt Engineering vs Fine-tuning Infographic

techiny.com

techiny.com