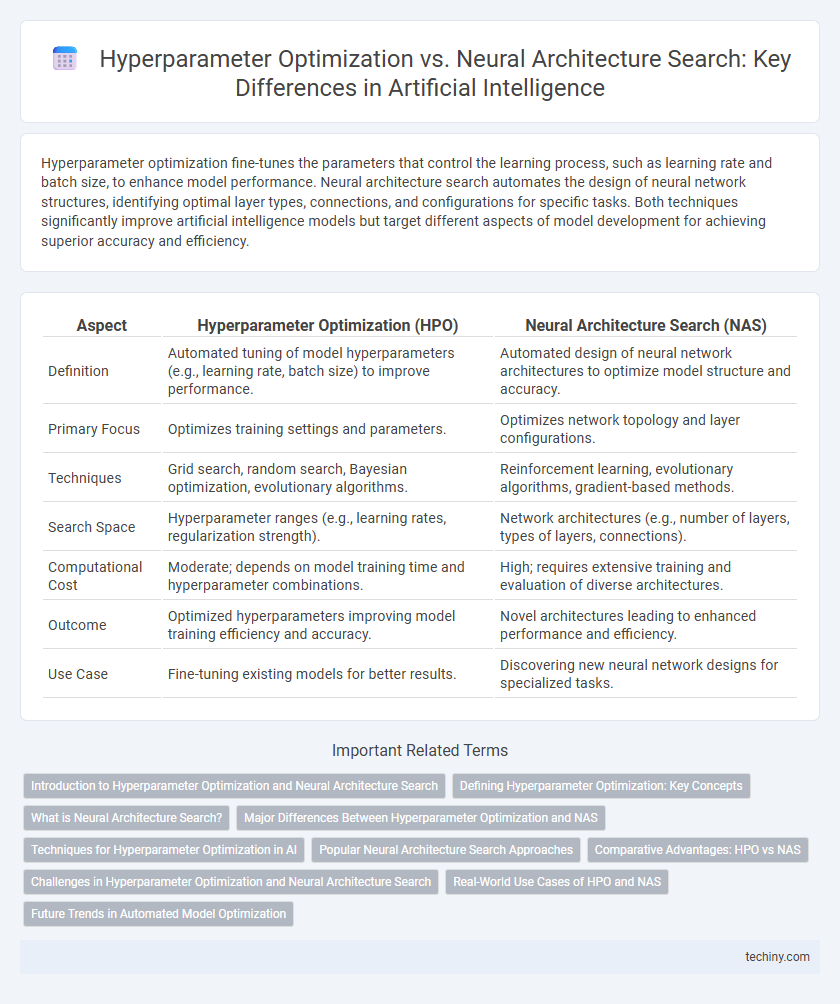

Hyperparameter optimization fine-tunes the parameters that control the learning process, such as learning rate and batch size, to enhance model performance. Neural architecture search automates the design of neural network structures, identifying optimal layer types, connections, and configurations for specific tasks. Both techniques significantly improve artificial intelligence models but target different aspects of model development for achieving superior accuracy and efficiency.

Table of Comparison

| Aspect | Hyperparameter Optimization (HPO) | Neural Architecture Search (NAS) |

|---|---|---|

| Definition | Automated tuning of model hyperparameters (e.g., learning rate, batch size) to improve performance. | Automated design of neural network architectures to optimize model structure and accuracy. |

| Primary Focus | Optimizes training settings and parameters. | Optimizes network topology and layer configurations. |

| Techniques | Grid search, random search, Bayesian optimization, evolutionary algorithms. | Reinforcement learning, evolutionary algorithms, gradient-based methods. |

| Search Space | Hyperparameter ranges (e.g., learning rates, regularization strength). | Network architectures (e.g., number of layers, types of layers, connections). |

| Computational Cost | Moderate; depends on model training time and hyperparameter combinations. | High; requires extensive training and evaluation of diverse architectures. |

| Outcome | Optimized hyperparameters improving model training efficiency and accuracy. | Novel architectures leading to enhanced performance and efficiency. |

| Use Case | Fine-tuning existing models for better results. | Discovering new neural network designs for specialized tasks. |

Introduction to Hyperparameter Optimization and Neural Architecture Search

Hyperparameter optimization involves systematically tuning parameters such as learning rate, batch size, and regularization factors to improve AI model performance. Neural architecture search focuses on automating the design of neural network structures, exploring various layer types, depths, and connectivity patterns to optimize accuracy and efficiency. Both techniques aim to enhance machine learning outcomes, with hyperparameter optimization fine-tuning training settings and neural architecture search discovering optimal network designs.

Defining Hyperparameter Optimization: Key Concepts

Hyperparameter Optimization involves systematically tuning parameters such as learning rate, batch size, and regularization strength to enhance model performance and generalization in artificial intelligence. This process uses methods like grid search, random search, and Bayesian optimization to efficiently navigate the hyperparameter space. Effective hyperparameter tuning is critical for achieving optimal accuracy and robustness in machine learning models compared to the broader search for architecture design in Neural Architecture Search.

What is Neural Architecture Search?

Neural Architecture Search (NAS) is an advanced technique in artificial intelligence designed to automate the process of designing neural network architectures. It uses algorithms, often based on reinforcement learning or evolutionary strategies, to discover the optimal model structure that maximizes performance on specific tasks. NAS significantly reduces human effort and expertise required in model design, accelerating the development of highly efficient deep learning models.

Major Differences Between Hyperparameter Optimization and NAS

Hyperparameter Optimization (HPO) primarily focuses on tuning algorithm-specific parameters such as learning rate, batch size, and regularization strength to enhance model performance, while Neural Architecture Search (NAS) automates the design of the neural network's structure, including the number of layers, type of layers, and connectivity patterns. HPO explores a predefined search space of numerical or categorical parameters, whereas NAS searches a combinatorial space of network topologies, which is computationally more intensive and complex. The major difference lies in HPO optimizing fixed model configurations for hyperparameters, while NAS dynamically discovers optimal architectures from scratch or from a base model space.

Techniques for Hyperparameter Optimization in AI

Techniques for hyperparameter optimization in AI include grid search, random search, Bayesian optimization, and gradient-based optimization methods. Grid search evaluates hyperparameters exhaustively across a predefined space, while random search samples hyperparameters randomly, offering better efficiency in high-dimensional spaces. Bayesian optimization uses probabilistic models to predict promising hyperparameter settings, significantly improving model performance by balancing exploration and exploitation.

Popular Neural Architecture Search Approaches

Popular Neural Architecture Search (NAS) approaches include reinforcement learning, evolutionary algorithms, and gradient-based methods like DARTS. Reinforcement learning methods dynamically adapt architectures by treating the search as a sequential decision process, while evolutionary algorithms evolve a population of models through mutation and selection. Gradient-based NAS techniques optimize architecture parameters directly using gradient descent, significantly reducing computational cost compared to traditional methods.

Comparative Advantages: HPO vs NAS

Hyperparameter Optimization (HPO) excels in fine-tuning model parameters to improve performance with relatively lower computational cost, making it suitable for scenarios where model architecture is predefined. Neural Architecture Search (NAS) offers the advantage of automating the discovery of optimal neural network structures, enabling the design of highly specialized architectures that can outperform manually crafted models but at a higher computational expense. While HPO provides efficiency and simplicity in enhancing existing models, NAS delivers adaptability and innovation in architecture design, with the trade-off between resource consumption and potential accuracy gains being a key consideration.

Challenges in Hyperparameter Optimization and Neural Architecture Search

Challenges in Hyperparameter Optimization include high-dimensional search spaces and computational expense due to numerous trials required for tuning parameters such as learning rate, batch size, and regularization strength. Neural Architecture Search faces difficulties in balancing search space complexity and efficiency, with issues like extensive computational resources needed and potential overfitting to specific tasks. Both methods struggle with scalability and generalization across diverse datasets and model configurations.

Real-World Use Cases of HPO and NAS

Hyperparameter Optimization (HPO) significantly improves machine learning model performance by fine-tuning parameters such as learning rate and batch size, essential in industries like finance for fraud detection and healthcare for predictive diagnostics. Neural Architecture Search (NAS) automates the design of neural networks, enabling efficient model deployment in resource-constrained environments, evident in autonomous driving systems and mobile device applications. Both techniques drive innovation by balancing computational cost and model accuracy, enhancing real-world AI applications across diverse sectors.

Future Trends in Automated Model Optimization

Future trends in automated model optimization emphasize the integration of hyperparameter optimization (HPO) and neural architecture search (NAS) techniques to enhance model performance and efficiency. Advances in reinforcement learning and meta-learning are driving more adaptive and resource-efficient search algorithms, reducing computational costs and enabling real-time model tuning. The convergence of HPO and NAS frameworks aims to automate the end-to-end model design process, paving the way for more robust, scalable, and application-specific AI solutions.

Hyperparameter Optimization vs Neural Architecture Search Infographic

techiny.com

techiny.com