Batch processing in artificial intelligence handles large volumes of data by processing them in groups, which enhances efficiency for tasks like model training and data analysis. Online processing, however, deals with data in real-time, allowing AI systems to respond immediately to new inputs, crucial for applications such as autonomous driving or fraud detection. Choosing between batch and online processing depends on the specific AI use case, balancing the need for speed against computational resources and data volume.

Table of Comparison

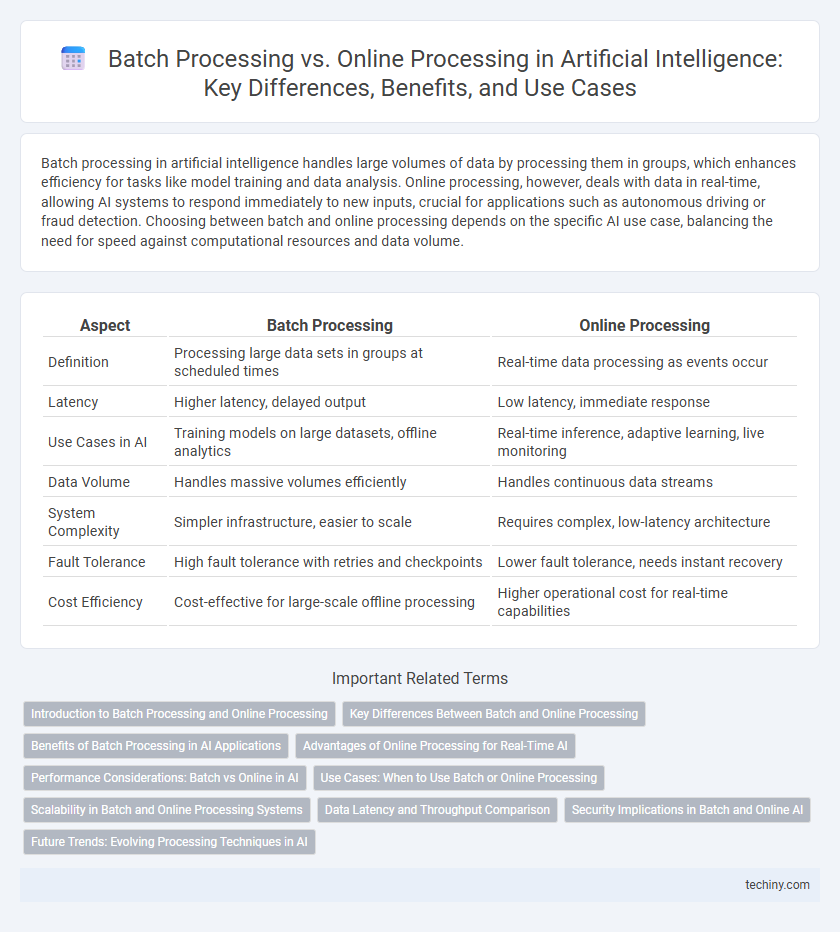

| Aspect | Batch Processing | Online Processing |

|---|---|---|

| Definition | Processing large data sets in groups at scheduled times | Real-time data processing as events occur |

| Latency | Higher latency, delayed output | Low latency, immediate response |

| Use Cases in AI | Training models on large datasets, offline analytics | Real-time inference, adaptive learning, live monitoring |

| Data Volume | Handles massive volumes efficiently | Handles continuous data streams |

| System Complexity | Simpler infrastructure, easier to scale | Requires complex, low-latency architecture |

| Fault Tolerance | High fault tolerance with retries and checkpoints | Lower fault tolerance, needs instant recovery |

| Cost Efficiency | Cost-effective for large-scale offline processing | Higher operational cost for real-time capabilities |

Introduction to Batch Processing and Online Processing

Batch processing in artificial intelligence involves executing large volumes of data collectively, enabling efficient handling of tasks like data aggregation and offline model training. Online processing, conversely, processes data in real-time or near-real-time, supporting immediate decision-making and adaptive AI systems. Choosing between batch and online processing impacts system latency, resource allocation, and scalability in AI applications.

Key Differences Between Batch and Online Processing

Batch processing involves handling large volumes of data collected over time, executing tasks in scheduled groups to optimize resource usage and system efficiency. Online processing processes data in real-time, enabling immediate input, transaction handling, and instant feedback, crucial for applications requiring continuous user interaction. The key differences lie in latency, with batch processing exhibiting higher delays due to scheduled execution, and online processing offering low latency through continuous, immediate data handling.

Benefits of Batch Processing in AI Applications

Batch processing in AI applications enables the handling of large-scale data sets efficiently, reducing computational overhead and optimizing resource allocation. It allows for comprehensive model training and data analysis by processing data in bulk, which improves accuracy and consistency. This approach supports complex AI tasks such as deep learning model updates and large-scale data cleansing, enhancing system performance and scalability.

Advantages of Online Processing for Real-Time AI

Online processing enables real-time AI systems to deliver instantaneous data analysis and decision-making, significantly enhancing responsiveness and user experience. It supports continuous data input streams, allowing AI models to adapt quickly to new information and changing conditions, which is critical for applications like autonomous vehicles and fraud detection. This immediacy reduces latency compared to batch processing, ensuring timely insights crucial for dynamic environments.

Performance Considerations: Batch vs Online in AI

Batch processing in AI excels in handling large volumes of data efficiently by processing tasks collectively, reducing computational overhead and enabling complex model training with optimized resource allocation. Online processing offers real-time data analysis and rapid model updates, critical for applications requiring immediate responsiveness and adaptive learning, although it may incur higher latency and computational costs per transaction. Performance considerations hinge on balancing throughput and latency demands, where batch processing suits high-volume, non-urgent tasks and online processing fits dynamic environments needing continuous data ingestion and instant decision-making.

Use Cases: When to Use Batch or Online Processing

Batch processing excels in scenarios involving large volumes of data requiring periodic analysis, such as monthly financial reporting or training machine learning models with extensive datasets. Online processing suits real-time applications like fraud detection, recommendation systems, and dynamic pricing where immediate data updates and low latency responses are critical. Choosing between batch and online processing depends on factors like data size, processing speed requirements, and the need for up-to-date insights in AI-driven decision-making.

Scalability in Batch and Online Processing Systems

Batch processing systems excel in scalability by handling large volumes of data through scheduled, sequential execution that maximizes resource utilization without real-time constraints. Online processing systems require scalable architectures designed to manage continuous, simultaneous user interactions, ensuring low latency and high availability. Cloud-based infrastructure and distributed computing frameworks enhance scalability in both batch and online AI processing environments, enabling efficient resource allocation and dynamic workload management.

Data Latency and Throughput Comparison

Batch processing in artificial intelligence involves processing large volumes of data at once, resulting in higher throughput but increased data latency due to delayed analysis. Online processing, by contrast, processes data continuously in real-time, minimizing data latency while typically handling lower throughput compared to batch methods. Selecting between batch and online processing depends on application requirements for immediate insights versus processing efficiency and volume.

Security Implications in Batch and Online AI

Batch processing in AI involves handling large volumes of data simultaneously, which can delay the detection of security breaches but allows for comprehensive integrity checks and controlled access measures. Online processing demands real-time data analysis and immediate threat response, increasing risks related to vulnerability exposure and requiring robust encryption and continuous monitoring to prevent data interception. Balancing latency and security protocols is critical, as batch systems benefit from periodic audits while online systems rely on adaptive defenses against evolving threats.

Future Trends: Evolving Processing Techniques in AI

Future trends in artificial intelligence highlight a shift towards hybrid processing techniques that combine the efficiency of batch processing with the responsiveness of online processing. Advances in edge computing and real-time analytics enable AI systems to process massive datasets in batch mode while simultaneously adapting to new inputs via online processing. These evolving methods enhance model accuracy, reduce latency, and support continuous learning in dynamic AI applications.

Batch Processing vs Online Processing Infographic

techiny.com

techiny.com