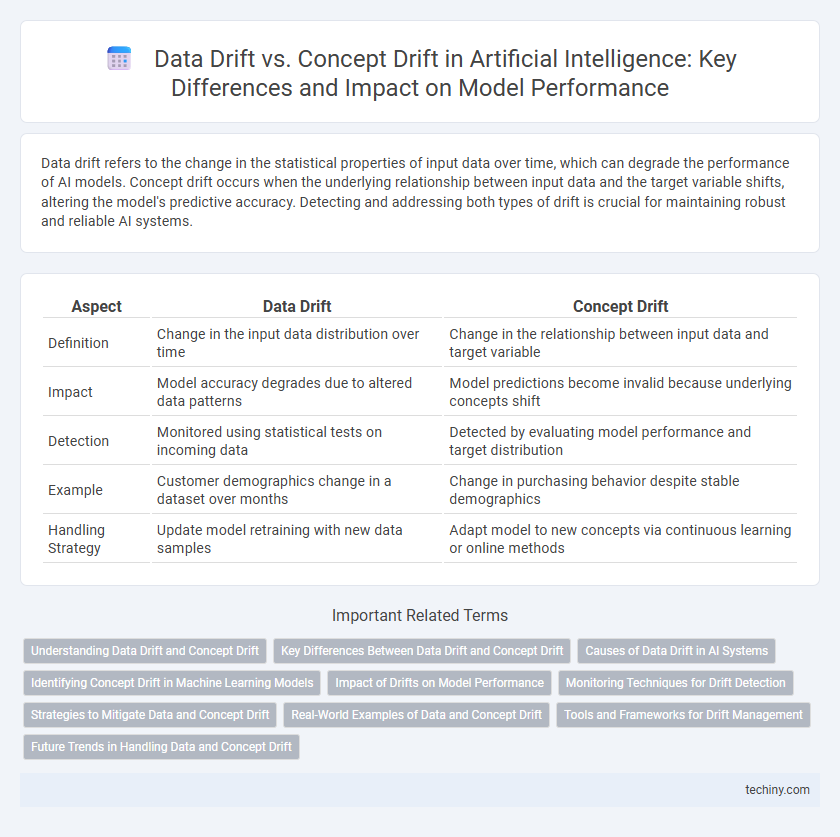

Data drift refers to the change in the statistical properties of input data over time, which can degrade the performance of AI models. Concept drift occurs when the underlying relationship between input data and the target variable shifts, altering the model's predictive accuracy. Detecting and addressing both types of drift is crucial for maintaining robust and reliable AI systems.

Table of Comparison

| Aspect | Data Drift | Concept Drift |

|---|---|---|

| Definition | Change in the input data distribution over time | Change in the relationship between input data and target variable |

| Impact | Model accuracy degrades due to altered data patterns | Model predictions become invalid because underlying concepts shift |

| Detection | Monitored using statistical tests on incoming data | Detected by evaluating model performance and target distribution |

| Example | Customer demographics change in a dataset over months | Change in purchasing behavior despite stable demographics |

| Handling Strategy | Update model retraining with new data samples | Adapt model to new concepts via continuous learning or online methods |

Understanding Data Drift and Concept Drift

Data drift occurs when the statistical properties of input data change over time, leading to degraded model performance, while concept drift refers to changes in the underlying relationship between input features and target variables. Understanding data drift involves monitoring shifts in feature distributions, whereas understanding concept drift requires detecting alterations in the predictive patterns or data labels. Effective handling of both drifts is crucial for maintaining the accuracy and reliability of AI models in dynamic environments.

Key Differences Between Data Drift and Concept Drift

Data drift refers to changes in the input data distribution over time, impacting model performance by altering feature values without changing the underlying relationship with the target variable. Concept drift involves shifts in the actual relationship between input features and the target variable, where the predictive patterns or labels evolve, causing model inaccuracies despite stable input data. Understanding these key differences is essential for implementing effective monitoring strategies in machine learning systems to maintain accuracy and reliability.

Causes of Data Drift in AI Systems

Data drift in AI systems occurs when the statistical properties of input data change over time, leading to degraded model performance. Common causes include evolving user behavior, changes in data collection methods, and external factors like market trends or sensor malfunctions. Monitoring data distributions and implementing adaptive algorithms are crucial to detect and mitigate data drift effects.

Identifying Concept Drift in Machine Learning Models

Concept drift in machine learning models occurs when the underlying data distribution changes, affecting the relationship between input features and target variables, leading to degraded model performance. Identifying concept drift involves monitoring performance metrics such as accuracy, precision, or recall over time and employing statistical tests like the Kolmogorov-Smirnov test or the Page-Hinkley test to detect significant deviations. Implementing real-time drift detection methods and retraining models on updated, relevant data ensures adaptability and sustained accuracy in dynamic environments.

Impact of Drifts on Model Performance

Data drift causes a gradual decline in model accuracy due to shifts in input data distribution, leading to outdated feature representations. Concept drift directly affects the relationship between features and target variables, resulting in erroneous predictions when the underlying data generating process changes. Both drifts degrade model performance over time, necessitating continuous monitoring and retraining to maintain predictive reliability in AI systems.

Monitoring Techniques for Drift Detection

Monitoring techniques for data drift and concept drift rely heavily on statistical tests and machine learning algorithms to identify shifts in data distributions or target concepts over time. Popular methods include the Kolmogorov-Smirnov test and Population Stability Index (PSI) for detecting changes in feature distributions, while concept drift is often monitored using performance metrics like accuracy or F1-score deterioration and adaptive windowing algorithms such as ADWIN. Implementing real-time dashboards and automated alerts further enhances the ability to promptly respond to drift and maintain model reliability.

Strategies to Mitigate Data and Concept Drift

Effective strategies to mitigate data and concept drift include continuous model monitoring and regular retraining using updated datasets to maintain accuracy. Implementing adaptive algorithms that dynamically adjust to changes in data distribution and leveraging feature engineering techniques can help detect shifts early. Deploying ensemble methods and maintaining a robust feedback loop with real-time data also enhance resilience against drift, ensuring sustained AI model performance.

Real-World Examples of Data and Concept Drift

Data drift occurs when the statistical properties of input data change over time, such as seasonal fluctuations in e-commerce customer behavior impacting recommendation systems. Concept drift involves changes in the underlying relationship between input data and target variables, exemplified by credit scoring models becoming less accurate as fraud patterns evolve. Autonomous vehicles illustrate both drifts as sensor data distribution shifts with weather conditions (data drift) and driving behavior adapts to new traffic laws (concept drift).

Tools and Frameworks for Drift Management

Effective drift management in Artificial Intelligence leverages specialized tools and frameworks such as Alibi Detect, Evidently AI, and IBM Watson OpenScale. These platforms offer real-time monitoring and automatic alerts for data drift by analyzing feature distributions, while concept drift detection employs adaptive models and retraining pipelines found in frameworks like River and TensorFlow Extended (TFX). Integration of these tools within AI workflows ensures robust maintenance of model accuracy and operational resilience against evolving data patterns.

Future Trends in Handling Data and Concept Drift

Emerging techniques in handling data drift and concept drift emphasize adaptive machine learning models leveraging real-time monitoring and automated retraining to maintain predictive accuracy. Advances in explainable AI and federated learning facilitate transparent detection and decentralized adaptation to shifting data distributions without compromising privacy. Future trends prioritize seamless integration of drift detection with continuous deployment pipelines, enabling proactive responses to evolving patterns in dynamic environments.

Data Drift vs Concept Drift Infographic

techiny.com

techiny.com