AI explainability focuses on providing human-understandable justifications for algorithmic decisions, enabling users to grasp how specific outputs are generated. In contrast, AI transparency emphasizes openness about the underlying data, models, and processes, fostering trust through visibility into the system's inner workings. Balancing explainability and transparency is crucial for developing accountable and ethical AI systems that users can both understand and trust.

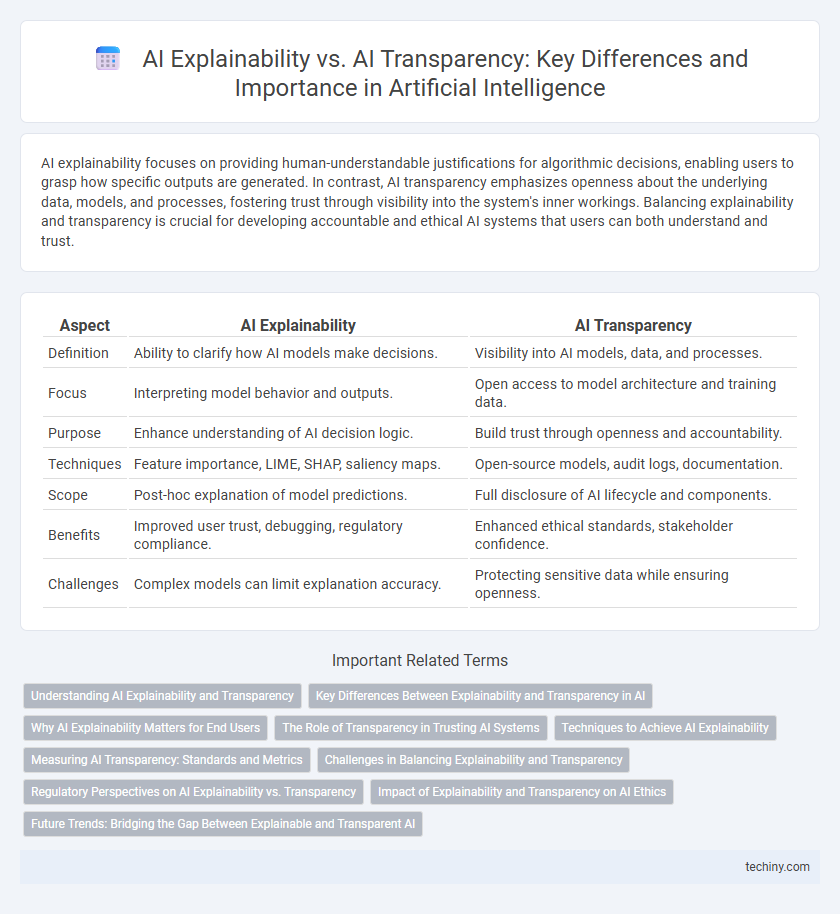

Table of Comparison

| Aspect | AI Explainability | AI Transparency |

|---|---|---|

| Definition | Ability to clarify how AI models make decisions. | Visibility into AI models, data, and processes. |

| Focus | Interpreting model behavior and outputs. | Open access to model architecture and training data. |

| Purpose | Enhance understanding of AI decision logic. | Build trust through openness and accountability. |

| Techniques | Feature importance, LIME, SHAP, saliency maps. | Open-source models, audit logs, documentation. |

| Scope | Post-hoc explanation of model predictions. | Full disclosure of AI lifecycle and components. |

| Benefits | Improved user trust, debugging, regulatory compliance. | Enhanced ethical standards, stakeholder confidence. |

| Challenges | Complex models can limit explanation accuracy. | Protecting sensitive data while ensuring openness. |

Understanding AI Explainability and Transparency

AI explainability refers to the ability to interpret and understand the decision-making processes of an artificial intelligence model, allowing stakeholders to grasp how inputs are transformed into outputs. AI transparency involves openness about the model's architecture, data sources, training methods, and potential limitations, enabling trust and accountability. Understanding both explainability and transparency is crucial for ethical AI deployment, as they collectively ensure models are interpretable, reliable, and auditable.

Key Differences Between Explainability and Transparency in AI

Explainability in AI refers to the ability of a model to provide understandable reasons behind its decisions, focusing on interpretability of the output. Transparency involves the openness about the AI system's architecture, data sources, and algorithms, enabling stakeholders to examine the internal workings. Key differences lie in explainability targeting the reasoning process for specific outcomes, while transparency emphasizes full visibility into the model's design and operational framework.

Why AI Explainability Matters for End Users

AI explainability matters for end users because it clarifies how algorithms generate specific outcomes, fostering trust and enabling informed decision-making. Transparency alone reveals the system's structure, but explainability translates complex model processes into understandable insights, crucial for users to validate results and identify biases. Clear explainability enhances user confidence in AI applications across healthcare, finance, and legal domains, where accountability and ethical considerations are paramount.

The Role of Transparency in Trusting AI Systems

AI transparency involves making the inner workings and decision processes of AI systems accessible and understandable to users, which directly impacts the level of trust placed in these technologies. Transparent AI models provide clear explanations of data inputs, algorithms, and decision paths, enabling stakeholders to verify system reliability and fairness. This openness reduces uncertainty and strengthens confidence in AI applications across critical domains such as healthcare, finance, and autonomous systems.

Techniques to Achieve AI Explainability

Techniques to achieve AI explainability include model-agnostic methods such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which provide post-hoc interpretations of complex models. Interpretable models like decision trees and linear regression offer intrinsic explainability by enabling straightforward understanding of decision pathways. Visualization tools, feature importance analysis, and counterfactual explanations also play critical roles in clarifying AI decision-making processes while enhancing user trust and regulatory compliance.

Measuring AI Transparency: Standards and Metrics

Measuring AI transparency involves established standards and metrics such as the AI Transparency Index and interpretability benchmarks that quantify how accessible and understandable AI systems are to users. Frameworks like the IEEE Transparent AI standard provide guidelines for documenting decision-making processes and data provenance, enabling stakeholders to assess model behavior and trustworthiness effectively. Quantitative methods including feature importance scores, model-agnostic explanation tools like LIME and SHAP, and compliance with regulations such as the EU's GDPR contribute to standardized evaluation of transparency in AI systems.

Challenges in Balancing Explainability and Transparency

Balancing AI explainability and transparency poses significant challenges due to the complexity of advanced machine learning models, which often operate as black boxes that are difficult to interpret. Ensuring explainability demands simplifying model decisions into human-understandable terms, while transparency requires revealing the underlying algorithms and data, potentially exposing proprietary information or increasing vulnerability to security risks. Achieving an optimal balance necessitates ongoing advancements in interpretable AI techniques and regulatory frameworks that address both ethical concerns and technical feasibility.

Regulatory Perspectives on AI Explainability vs. Transparency

Regulatory perspectives on AI explainability emphasize the need for clear, understandable models to ensure accountability and compliance with data protection laws such as GDPR, which mandates actionable explanations for automated decisions. In contrast, AI transparency focuses on the broader disclosure of AI system architectures, data sources, and development processes to foster trust and facilitate audits by regulatory bodies. Balancing explainability and transparency is critical for regulators aiming to mitigate risks related to bias, discrimination, and privacy while promoting ethical AI deployment.

Impact of Explainability and Transparency on AI Ethics

AI explainability enhances user trust by clarifying how algorithms make decisions, enabling better accountability and ethical oversight. AI transparency involves revealing model structures and data sources, which helps detect biases and ensures compliance with ethical standards. Together, explainability and transparency mitigate risks of unfair outcomes, promoting responsible AI deployment aligned with ethical principles.

Future Trends: Bridging the Gap Between Explainable and Transparent AI

Future trends in AI emphasize bridging the gap between explainability and transparency by developing advanced algorithms that provide clear, interpretable insights while maintaining comprehensive visibility into decision-making processes. Enhanced AI frameworks will leverage hybrid models combining symbolic reasoning with deep learning to offer both human-understandable explanations and full access to underlying data flows. Increasing regulatory demands and ethical considerations drive innovation towards AI systems that balance user trust and performance through integrative transparency-explainability solutions.

AI Explainability vs AI Transparency Infographic

techiny.com

techiny.com