Centralized learning aggregates all data into a single system, enabling comprehensive model training but raising concerns about data privacy and security. Federated learning trains models locally on decentralized devices, ensuring data remains on-source while sharing model updates, which enhances privacy and reduces communication overhead. Balancing model accuracy with privacy preservation is essential when choosing between centralized and federated learning approaches.

Table of Comparison

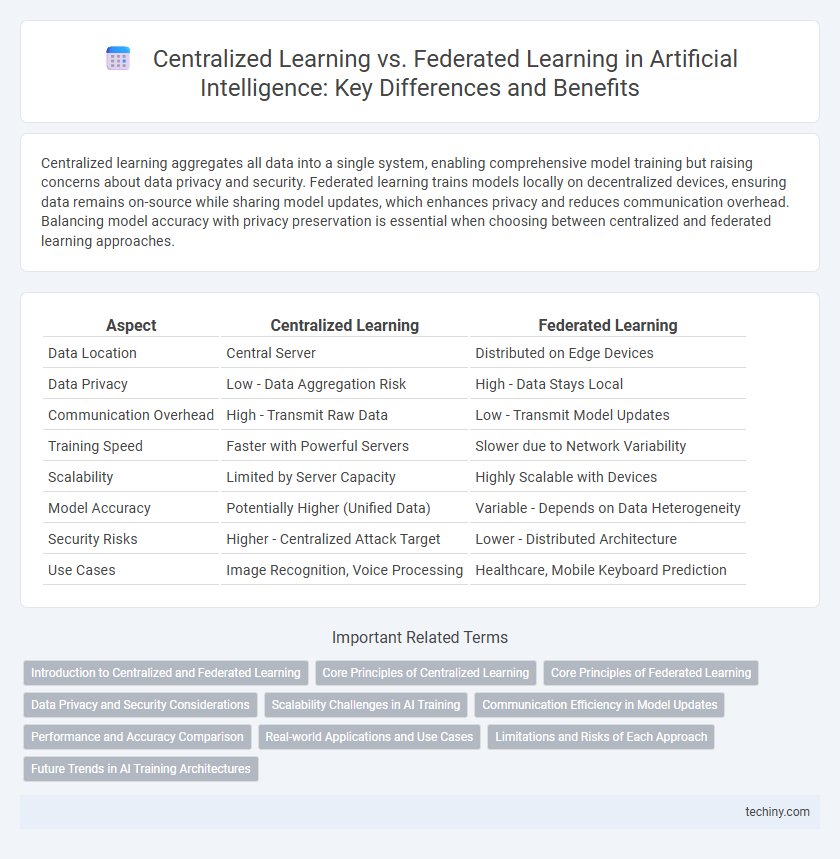

| Aspect | Centralized Learning | Federated Learning |

|---|---|---|

| Data Location | Central Server | Distributed on Edge Devices |

| Data Privacy | Low - Data Aggregation Risk | High - Data Stays Local |

| Communication Overhead | High - Transmit Raw Data | Low - Transmit Model Updates |

| Training Speed | Faster with Powerful Servers | Slower due to Network Variability |

| Scalability | Limited by Server Capacity | Highly Scalable with Devices |

| Model Accuracy | Potentially Higher (Unified Data) | Variable - Depends on Data Heterogeneity |

| Security Risks | Higher - Centralized Attack Target | Lower - Distributed Architecture |

| Use Cases | Image Recognition, Voice Processing | Healthcare, Mobile Keyboard Prediction |

Introduction to Centralized and Federated Learning

Centralized learning involves aggregating all training data into a single server where machine learning models are trained, offering high accuracy but posing privacy risks and communication bottlenecks. Federated learning distributes model training across multiple edge devices, enabling local data processing that preserves data privacy and reduces bandwidth usage. This decentralized approach allows collaborative model improvement while maintaining user data confidentiality.

Core Principles of Centralized Learning

Centralized learning involves aggregating data from multiple sources into a single, centralized server where machine learning models are trained with full access to the entire dataset, enabling comprehensive pattern recognition and feature extraction. This approach relies on high-quality data aggregation and centralized computational resources to optimize model accuracy and performance. Core principles include data centralization, powerful computational infrastructure, and straightforward model updates through global dataset availability.

Core Principles of Federated Learning

Federated Learning emphasizes decentralized data processing by training machine learning models across multiple devices or servers while keeping data localized. Core principles include data privacy, as raw data never leaves local devices, and model aggregation, where only lightweight model updates are shared to a central server for global model refinement. This approach mitigates data security risks and reduces communication overhead compared to traditional centralized learning methods.

Data Privacy and Security Considerations

Centralized Learning aggregates all data in a single location, increasing risks of data breaches and unauthorized access, which poses significant privacy concerns. Federated Learning processes data locally on edge devices, transmitting only model updates, thereby minimizing sensitive data exposure and enhancing security. Encryption techniques and secure aggregation protocols further strengthen Federated Learning's ability to protect user data from potential threats during model training.

Scalability Challenges in AI Training

Centralized learning faces significant scalability challenges due to its reliance on a single server that collects and processes vast amounts of data, leading to bottlenecks in communication and computation. Federated learning addresses these issues by distributing model training across multiple decentralized devices, reducing data transfer loads and enabling parallel processing. However, federated learning struggles with heterogeneity in device capabilities and data distribution, complicating synchronization and model convergence at scale.

Communication Efficiency in Model Updates

Centralized learning requires frequent transmission of large model updates from all devices to a central server, leading to significant communication overhead and network congestion. Federated learning minimizes communication by sending only encrypted model updates or gradients, often relying on periodic aggregation instead of continuous data transfer. Techniques like model compression, update sparsification, and asynchronous communication further enhance communication efficiency in federated learning systems.

Performance and Accuracy Comparison

Centralized learning typically achieves higher accuracy due to the availability of complete datasets during model training, enabling extensive pattern recognition and optimization. Federated learning, while preserving data privacy and reducing communication overhead by training models locally on decentralized data, may experience slightly lower performance because of data heterogeneity and limited access to global information. Performance trade-offs between centralized and federated learning depend on factors such as data distribution, network latency, and aggregation methods, with federated learning showing promising results in scenarios prioritizing privacy and scalability.

Real-world Applications and Use Cases

Centralized learning is widely used in applications where data can be aggregated securely, such as image recognition in autonomous vehicles and recommendation systems for e-commerce platforms. Federated learning enables privacy-preserving AI by allowing edge devices like smartphones and IoT sensors to collaboratively train models without sharing raw data, proving essential in healthcare diagnostics and personalized finance. Industries leverage federated learning for regulatory compliance while maintaining model accuracy, particularly in sectors like banking, telecommunications, and smart cities.

Limitations and Risks of Each Approach

Centralized learning faces risks related to data privacy breaches and single points of failure due to aggregating sensitive information in one location, increasing vulnerability to cyberattacks. Federated learning mitigates these risks by keeping data decentralized but struggles with challenges like heterogeneous data distribution, communication overhead, and potential model poisoning attacks that can degrade overall performance. Both approaches require careful consideration of security protocols and system robustness to manage their inherent limitations effectively.

Future Trends in AI Training Architectures

Centralized learning faces challenges in data privacy and scalability, driving interest towards federated learning, which allows decentralized model training across distributed devices. Future AI training architectures will increasingly leverage hybrid models that combine centralized data orchestration with federated updates to optimize performance while ensuring privacy. Innovations in secure multi-party computation and differential privacy will enhance federated learning's adoption across industries such as healthcare, finance, and IoT.

Centralized Learning vs Federated Learning Infographic

techiny.com

techiny.com