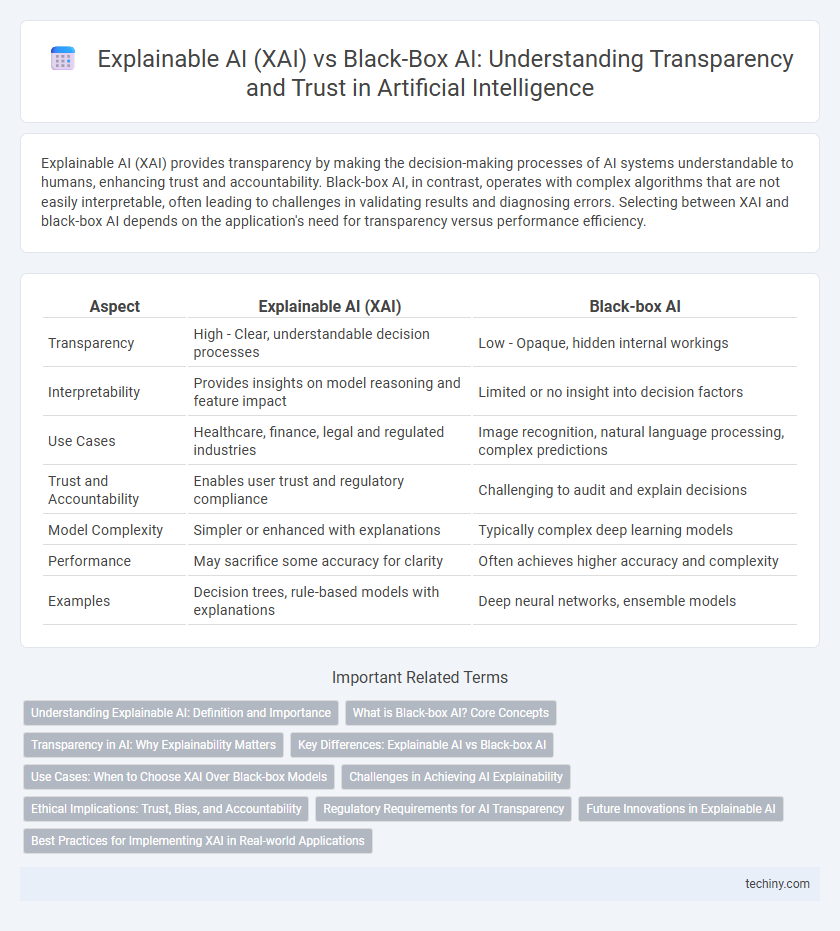

Explainable AI (XAI) provides transparency by making the decision-making processes of AI systems understandable to humans, enhancing trust and accountability. Black-box AI, in contrast, operates with complex algorithms that are not easily interpretable, often leading to challenges in validating results and diagnosing errors. Selecting between XAI and black-box AI depends on the application's need for transparency versus performance efficiency.

Table of Comparison

| Aspect | Explainable AI (XAI) | Black-box AI |

|---|---|---|

| Transparency | High - Clear, understandable decision processes | Low - Opaque, hidden internal workings |

| Interpretability | Provides insights on model reasoning and feature impact | Limited or no insight into decision factors |

| Use Cases | Healthcare, finance, legal and regulated industries | Image recognition, natural language processing, complex predictions |

| Trust and Accountability | Enables user trust and regulatory compliance | Challenging to audit and explain decisions |

| Model Complexity | Simpler or enhanced with explanations | Typically complex deep learning models |

| Performance | May sacrifice some accuracy for clarity | Often achieves higher accuracy and complexity |

| Examples | Decision trees, rule-based models with explanations | Deep neural networks, ensemble models |

Understanding Explainable AI: Definition and Importance

Explainable AI (XAI) refers to machine learning models designed to provide transparent, interpretable, and understandable outputs, enhancing trust and accountability in AI-driven decisions. Unlike black-box AI systems, whose internal processes remain hidden and opaque, XAI enables stakeholders to comprehend how inputs influence outputs, which is crucial in high-stakes domains like healthcare, finance, and autonomous systems. This transparency reduces biases, facilitates regulatory compliance, and supports ethical AI deployment by allowing validation and audit of AI behavior.

What is Black-box AI? Core Concepts

Black-box AI refers to machine learning models whose internal decision-making processes are not transparent or interpretable by humans, often seen in deep neural networks and complex ensemble methods. These models prioritize predictive accuracy over interpretability, making it difficult to understand how inputs are transformed into outputs. Core concepts include opacity, non-linearity, and the challenge of extracting meaningful explanations from high-dimensional feature interactions.

Transparency in AI: Why Explainability Matters

Explainable AI (XAI) provides transparency by revealing the decision-making processes behind AI models, enabling users to understand, trust, and verify outcomes. In contrast, black-box AI models operate without clear insights into their logic, often limiting accountability and increasing risks in critical applications like healthcare or finance. Transparency in AI is crucial for ethical compliance, enhancing user confidence, and facilitating regulatory approval by making AI behavior interpretable and auditable.

Key Differences: Explainable AI vs Black-box AI

Explainable AI (XAI) provides transparent, interpretable models that allow users to understand how decisions are made, enhancing trust and accountability in AI systems. Black-box AI relies on complex algorithms like deep neural networks, where internal processes are opaque, making it difficult to explain outputs or identify biases. The key difference lies in XAI's emphasis on interpretability and clarity versus the high complexity and lack of transparency inherent in black-box models.

Use Cases: When to Choose XAI Over Black-box Models

Explainable AI (XAI) is crucial in high-stakes industries like healthcare, finance, and legal systems where transparency and trust are mandatory for decision-making compliance and risk mitigation. Black-box AI models, while powerful in pattern recognition and predictive accuracy, are preferred in applications with lower regulatory scrutiny or where rapid, large-scale data processing is vital, such as recommendation engines and fraud detection. Choosing XAI over black-box depends on the need for interpretability, ethical considerations, and the impact of AI decisions on human lives and regulatory policies.

Challenges in Achieving AI Explainability

Challenges in achieving AI explainability primarily involve the inherent complexity of black-box AI models such as deep neural networks, which obscure decision-making processes behind multiple non-transparent layers. The trade-off between model accuracy and interpretability limits the ability to provide clear, actionable insights without compromising performance. Additionally, lack of standardized metrics for evaluating explainability complicates the comparison and validation of XAI techniques across diverse applications.

Ethical Implications: Trust, Bias, and Accountability

Explainable AI (XAI) enhances trust by providing transparent decision-making processes, enabling users to understand and verify outcomes. Black-box AI often obscures the rationale behind predictions, raising concerns about bias and ethical accountability in high-stakes applications. Ensuring clear explanations supports ethical AI deployment, fostering responsibility and mitigating hidden biases inherent in opaque models.

Regulatory Requirements for AI Transparency

Explainable AI (XAI) is designed to provide transparent decision-making processes that comply with regulatory requirements such as the EU's GDPR and the AI Act, which mandate explanation and accountability in automated decisions. In contrast, Black-box AI models, despite their high accuracy, often struggle to meet these transparency standards due to their opaque internal mechanisms. Regulatory frameworks increasingly demand AI systems to offer interpretable outputs to ensure ethical compliance and enhance user trust.

Future Innovations in Explainable AI

Future innovations in Explainable AI (XAI) focus on advancing transparency and interpretability through the development of hybrid models that combine symbolic AI with deep learning. Enhanced techniques like counterfactual explanations, causal reasoning, and interactive visualization tools aim to provide end-users and regulators with clearer insights into AI decision-making processes. Research in XAI prioritizes integrating ethical considerations and real-time explainability into autonomous systems to increase trust and accountability across industries.

Best Practices for Implementing XAI in Real-world Applications

Implementing Explainable AI (XAI) in real-world applications requires prioritizing transparency by using interpretable models like decision trees or rule-based systems alongside advanced visualization tools for user understanding. Best practices involve continuous monitoring and validation of AI outputs to ensure accountability and mitigate biases, enhancing trustworthiness in critical sectors such as healthcare and finance. Emphasizing collaboration between AI developers, domain experts, and end-users ensures explanations are both technically accurate and contextually meaningful.

Explainable AI (XAI) vs Black-box AI Infographic

techiny.com

techiny.com