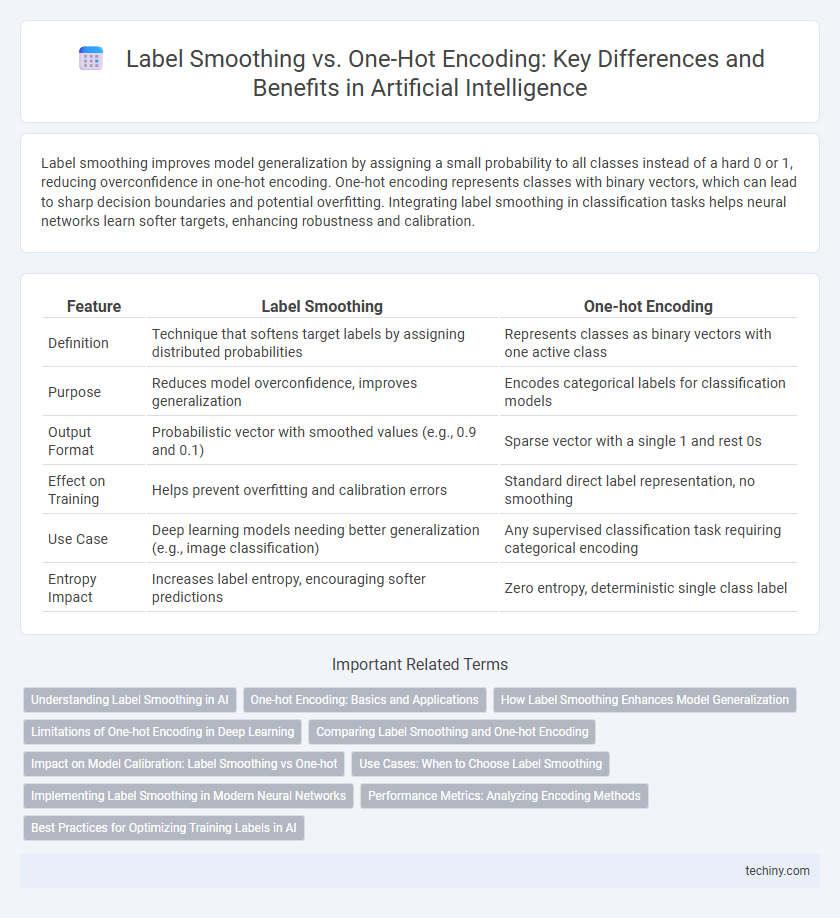

Label smoothing improves model generalization by assigning a small probability to all classes instead of a hard 0 or 1, reducing overconfidence in one-hot encoding. One-hot encoding represents classes with binary vectors, which can lead to sharp decision boundaries and potential overfitting. Integrating label smoothing in classification tasks helps neural networks learn softer targets, enhancing robustness and calibration.

Table of Comparison

| Feature | Label Smoothing | One-hot Encoding |

|---|---|---|

| Definition | Technique that softens target labels by assigning distributed probabilities | Represents classes as binary vectors with one active class |

| Purpose | Reduces model overconfidence, improves generalization | Encodes categorical labels for classification models |

| Output Format | Probabilistic vector with smoothed values (e.g., 0.9 and 0.1) | Sparse vector with a single 1 and rest 0s |

| Effect on Training | Helps prevent overfitting and calibration errors | Standard direct label representation, no smoothing |

| Use Case | Deep learning models needing better generalization (e.g., image classification) | Any supervised classification task requiring categorical encoding |

| Entropy Impact | Increases label entropy, encouraging softer predictions | Zero entropy, deterministic single class label |

Understanding Label Smoothing in AI

Label smoothing in AI is a technique used to improve model generalization by softening the target labels, assigning a small probability to incorrect classes instead of a hard one-hot encoding. This approach reduces overconfidence in neural networks, leading to better calibration and robustness during training. Implementing label smoothing helps prevent the network from becoming overly confident, which can improve performance on unseen data and mitigate issues like overfitting.

One-hot Encoding: Basics and Applications

One-hot encoding represents categorical variables as binary vectors where only one element is set to 1, ensuring a clear and unique class distinction. This method is fundamental in machine learning algorithms such as neural networks and decision trees, enabling efficient classification and prediction tasks. One-hot encoding is widely applied in natural language processing, image recognition, and recommendation systems to handle categorical data without implying ordinal relationships.

How Label Smoothing Enhances Model Generalization

Label smoothing improves model generalization by preventing overconfidence in neural network predictions, thereby reducing the risk of overfitting associated with one-hot encoding. By assigning a small probability to all classes instead of a hard 0 or 1 label, it encourages the model to be less certain and more adaptable to unseen data. This technique promotes better calibration of predicted probabilities, enhancing robustness and overall performance on diverse datasets.

Limitations of One-hot Encoding in Deep Learning

One-hot encoding in deep learning often leads to high-dimensional sparse vectors, which increase computational complexity and memory usage, limiting model scalability. This representation assumes mutual exclusivity among classes, failing to capture label similarities or uncertainties, reducing model generalization in ambiguous scenarios. Label smoothing addresses these limitations by distributing some probability mass to non-target classes, improving calibration and robustness against overfitting and noisy labels.

Comparing Label Smoothing and One-hot Encoding

Label smoothing enhances model generalization by assigning a small probability to incorrect classes, reducing overconfidence in predictions compared to one-hot encoding which represents labels with a single fixed 1 for the true class and 0 for others. One-hot encoding creates sparse and deterministic targets, often leading to higher variance during training, while label smoothing introduces soft targets that improve calibration and robustness in neural networks. Research shows label smoothing decreases overfitting and improves accuracy on image classification benchmarks over traditional one-hot encoding schemes.

Impact on Model Calibration: Label Smoothing vs One-hot

Label smoothing improves model calibration by preventing overconfident predictions, distributing some probability mass to non-target classes, which leads to better uncertainty estimation compared to one-hot encoding. One-hot encoding assigns a probability of 1 to the true class and 0 to others, often causing sharp and overconfident outputs that reduce calibration quality. Empirical studies demonstrate that models trained with label smoothing achieve lower expected calibration error (ECE) and enhanced generalization in classification tasks.

Use Cases: When to Choose Label Smoothing

Label smoothing is ideal for tasks involving noisy labels or when preventing overconfidence in deep learning models such as image classification and natural language processing. It enhances generalization by softening the target distribution, reducing the risk of overfitting compared to strict one-hot encoding. Use label smoothing especially in scenarios requiring better calibration and robustness against mislabeled data.

Implementing Label Smoothing in Modern Neural Networks

Implementing label smoothing in modern neural networks enhances model generalization by distributing some probability mass from the true label to other classes, mitigating overconfidence during training. This technique typically adjusts the target label vector from a strict one-hot encoding, such as converting a label with a 1.0 probability at the true class to a smoothed vector with 0.9 for the true class and 0.1 distributed among other classes. Incorporating label smoothing in architectures like convolutional neural networks (CNNs) and transformers helps reduce overfitting and improves calibration, especially in classification tasks with large output spaces.

Performance Metrics: Analyzing Encoding Methods

Label smoothing enhances model performance by reducing overconfidence, leading to improved generalization and higher accuracy on validation sets compared to one-hot encoding. One-hot encoding creates sharp target distributions that can cause models to become overly confident, often resulting in lower robustness measured by metrics such as precision, recall, and F1-score. Empirical evaluations demonstrate that label smoothing yields better calibration and reduced overfitting, reflected in improved AUC-ROC and log-loss values across diverse AI classification tasks.

Best Practices for Optimizing Training Labels in AI

Label smoothing enhances model generalization by softening the target labels, preventing overconfidence and improving robustness against noisy data. One-hot encoding provides precise target representation but can lead to overfitting and reduced model calibration. Best practices recommend combining label smoothing with one-hot encoding to balance strict target alignment and improved generalization during AI model training.

Label Smoothing vs One-hot Encoding Infographic

techiny.com

techiny.com